We upgraded our cluster to PVE 5.1 on the 16th of November and are experiencing what appear to be memory leaks. The host has 192 GB of RAM but only runs virtual routers so tons of unused RAM. Host is hyper-converged and was previously running Ceph FileStore OSDs, which were migrated to BlueStore OSDs on the 19th of November.

ie: Memory leaks do not relate to FileStore or BlueStore OSDs but to PVE 5.1 or Ceph Luminous upgrades.

Each host only has 4 hdd OSDs so maximum memory utilisation should be around 1 GB per hdd OSDs or 3 GB per ssd OSD.

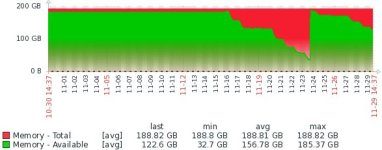

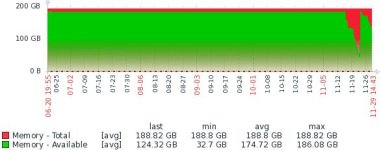

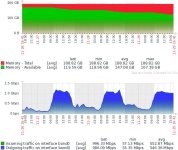

Herewith almost 6 months history:

Herewith the output of 'top':

And 'htop' with sorting by memory utilisation:

ie: Memory leaks do not relate to FileStore or BlueStore OSDs but to PVE 5.1 or Ceph Luminous upgrades.

Each host only has 4 hdd OSDs so maximum memory utilisation should be around 1 GB per hdd OSDs or 3 GB per ssd OSD.

Herewith almost 6 months history:

Herewith the output of 'top':

Code:

top - 14:45:20 up 5 days, 18:27, 1 user, load average: 5.23, 5.23, 5.34

Tasks: 604 total, 2 running, 601 sleeping, 0 stopped, 1 zombie

%Cpu(s): 9.8 us, 6.3 sy, 0.0 ni, 80.0 id, 3.5 wa, 0.0 hi, 0.4 si, 0.0 st

KiB Mem : 19799395+total, 10125188+free, 95258368 used, 1483688 buff/cache

KiB Swap: 26830438+total, 26830438+free, 0 used. 12658549+avail MemAnd 'htop' with sorting by memory utilisation:

Code:

1 [|||||| 15.3%] 6 [||||||| 17.4%] 11 [|| 3.3%] 16 [|| 2.1%]

2 [||||| 11.8%] 7 [||||||||||||||||| 49.4%] 12 [|| 1.7%] 17 [|| 1.6%]

3 [|||| 10.7%] 8 [||||||||||||||| 44.2%] 13 [|| 2.1%] 18 [|| 2.5%]

4 [||||| 10.0%] 9 [||||||||||||||| 46.0%] 14 [|| 2.1%] 19 [|| 2.9%]

5 [|||||||||||| 34.7%] 10 [||||||||||||||||||||||||||80.4%] 15 [|| 2.9%] 20 [|| 2.5%]

Mem[|||||||||||||||||||||||||||||||||||| 91.0G/189G] Tasks: 75, 378 thr; 6 running

Swp[ 0K/256G] Load average: 5.40 5.24 5.33

Uptime: 5 days, 18:28:16

PID USER PRI NI VIRT RES SHR S CPU% MEM% TIME+ Command

30326 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 9:31.79 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30327 ceph 20 0 7065M 6282M 28936 S 0.8 3.2 59:55.45 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30328 ceph 20 0 7065M 6282M 28936 S 0.8 3.2 58:13.03 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30329 ceph 20 0 7065M 6282M 28936 S 0.8 3.2 38:58.86 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30331 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:01.11 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30332 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30394 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:01.49 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30395 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:05.99 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30396 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:15.79 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30397 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30398 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:04.58 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30399 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 8:09.85 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30400 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 4:30.37 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30401 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:14.82 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30421 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30422 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 21:29.48 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30423 ceph 20 0 7065M 6282M 28936 S 1.2 3.2 1h10:23 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30424 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 22:27.70 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30425 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 2:41.31 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30426 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:01.18 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30427 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30428 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:16.50 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30429 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30430 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30431 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30432 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30433 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30434 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30435 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30436 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30437 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30438 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30439 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30440 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:03.26 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30441 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30442 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:37.06 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30443 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:09.19 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30444 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:09.49 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30445 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 10:49.98 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30446 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 18:18.19 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30447 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 5:44.48 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30448 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 8:29.75 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30449 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 23:07.27 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30450 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 4:55.51 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30451 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 7:31.26 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30452 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 11:50.82 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30453 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 10:49.41 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30454 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 18:20.21 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30455 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 5:45.58 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30456 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 8:30.74 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30457 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 23:07.64 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30458 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 4:53.78 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30459 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 7:32.11 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30460 ceph 20 0 7065M 6282M 28936 S 0.4 3.2 11:50.66 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30461 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:04.07 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30462 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:03.63 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30463 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:36.13 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30464 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30465 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30466 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.18 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30467 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30468 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30469 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.00 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

30470 ceph 20 0 7065M 6282M 28936 S 0.0 3.2 0:00.04 /usr/bin/ceph-osd -f --cluster ceph --id 0 --setuser ceph --setgroup ceph

Last edited: