Hi Proxmox enthusiasts!

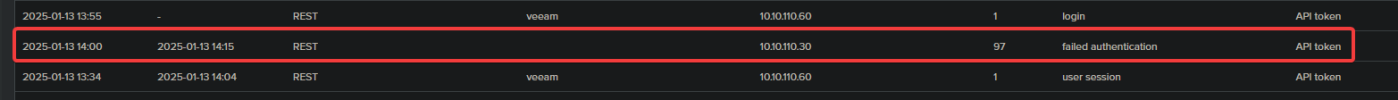

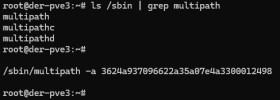

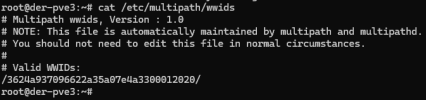

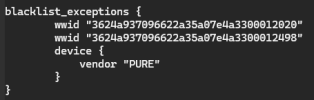

I faced an issue with iSCSI fault tolerance in Proxmox. The current version of Proxmox lacks native support for iSCSI with multipath, which is critical for maintaining infrastructure reliability. To address this, I decided to create a custom plugin, which is now available for everyone on GitHub.

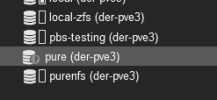

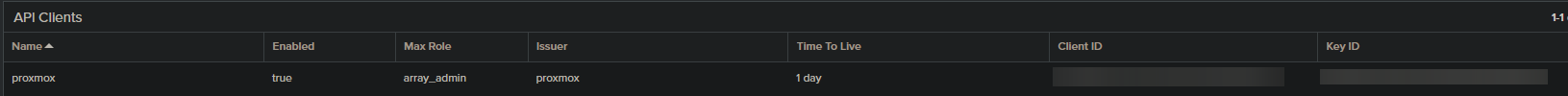

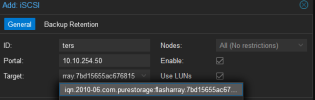

We use PureStorage FlashArray FA-X20R3 (datasheet) and Proxmox VE v8. This plugin has already proven helpful in solving many storage management and integration tasks.

What My Plugin Can Do:

• Live migration – seamless and interruption-free.

• Snapshot creation and copying – convenient for backups and rollbacks.

• Creating VM disks as separate volumes – this unlocks the powerful features of PureStorage, such as automatic snapshot and backup policies.

Why It Matters:

By creating disks as separate volumes, you can leverage the benefits of PureStorage to ensure data reliability and fault tolerance. For example:

• Automating backups with Pure’s built-in features.

• Flexible management of storage policies.

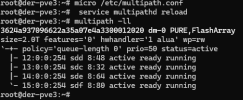

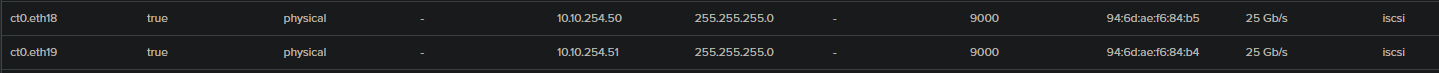

• Supporting multipath connections, significantly improving system performance and resilience.

Technical Details:

• Storage: PureStorage FlashArray FA-X20R3 (FW 6.5.8).

• Tested Proxmox VE version: 8.2.*.

Where to Find and How to Install:

The entire plugin code is available in an open repository on GitHub. You can also find detailed installation and setup instructions there.

What’s Next:

I am open to feedback and suggestions for improving the plugin. If you have ideas, feature requests, or bug reports, feel free to contact me through GitHub or leave a comment on the project.

I hope this plugin will be useful for you as well!

I faced an issue with iSCSI fault tolerance in Proxmox. The current version of Proxmox lacks native support for iSCSI with multipath, which is critical for maintaining infrastructure reliability. To address this, I decided to create a custom plugin, which is now available for everyone on GitHub.

We use PureStorage FlashArray FA-X20R3 (datasheet) and Proxmox VE v8. This plugin has already proven helpful in solving many storage management and integration tasks.

What My Plugin Can Do:

• Live migration – seamless and interruption-free.

• Snapshot creation and copying – convenient for backups and rollbacks.

• Creating VM disks as separate volumes – this unlocks the powerful features of PureStorage, such as automatic snapshot and backup policies.

Why It Matters:

By creating disks as separate volumes, you can leverage the benefits of PureStorage to ensure data reliability and fault tolerance. For example:

• Automating backups with Pure’s built-in features.

• Flexible management of storage policies.

• Supporting multipath connections, significantly improving system performance and resilience.

Technical Details:

• Storage: PureStorage FlashArray FA-X20R3 (FW 6.5.8).

• Tested Proxmox VE version: 8.2.*.

Where to Find and How to Install:

The entire plugin code is available in an open repository on GitHub. You can also find detailed installation and setup instructions there.

What’s Next:

I am open to feedback and suggestions for improving the plugin. If you have ideas, feature requests, or bug reports, feel free to contact me through GitHub or leave a comment on the project.

I hope this plugin will be useful for you as well!