The plugin communicates with Pure array via API using its management interface.

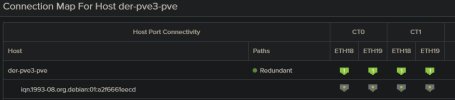

It then uses iscsiadm and multipath to add/remove device mapping. In order for that to work the iscsi and multipathing should be properly configured and the array should be discoverable on the pve host(s).

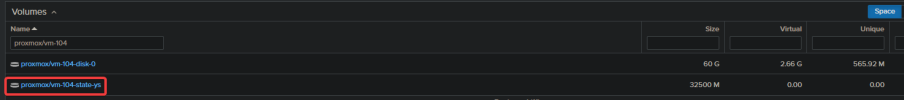

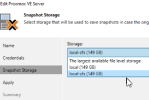

I would start with attaching some Pure volume to PE via Datacenter -> Storage.

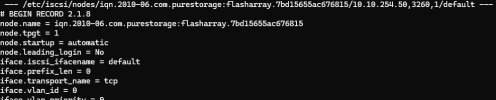

If it works fine, just confirm that node.startup is automatic for the array in the /etc/iscsi/nodes and that "multipath -ll" produces a meaningful output.

Note, you do not need the ISCSI storage afterwards. The plugin will handle things automatically.

It then uses iscsiadm and multipath to add/remove device mapping. In order for that to work the iscsi and multipathing should be properly configured and the array should be discoverable on the pve host(s).

I would start with attaching some Pure volume to PE via Datacenter -> Storage.

If it works fine, just confirm that node.startup is automatic for the array in the /etc/iscsi/nodes and that "multipath -ll" produces a meaningful output.

Note, you do not need the ISCSI storage afterwards. The plugin will handle things automatically.