atto benchmark and iometer using test files from Open Performance test (Available here: http://vmktree.org/iometer/) from a windows guest should provide the requested info.

Proxmox VE Ceph Server released (beta)

- Thread starter martin

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

atto benchmark and iometer using test files from Open Performance test (Available here: http://vmktree.org/iometer/) from a windows guest should provide the requested info.

Also,

last version of fio (git), can now use rbd directly !

http://telekomcloud.github.io/ceph/2014/02/26/ceph-performance-analysis_fio_rbd.html

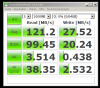

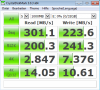

I got a crystal diskmark result from a win7 VM running on our ceph clusters, hardware and network is described here (http://pve.proxmox.com/wiki/Ceph_Server#Recommended_hardware) - all pools are using replication 3.

Code:

qm config 104

bootdisk: virtio0

cores: 6

ide0: none,media=cdrom

memory: 2048

name: windows7-spice

net0: virtio=0A:8B:AB:10:10:49,bridge=vmbr0

ostype: win7

parent: demo

sockets: 1

vga: qxl2

virtio0: local:104/vm-104-disk-1.qcow2,format=qcow2,cache=writeback,size=32G

virtio1: ceph3:vm-104-disk-1,cache=writeback,size=32G

some rados benchmarks on the same cluster (replication 3):

write speed

read speed

write speed

Code:

rados -p test3 bench 60 write --no-cleanup

...

2014-03-10 20:56:08.302342min lat: 0.037403 max lat: 4.61637 avg lat: 0.23234

sec Cur ops started finished avg MB/s cur MB/s last lat avg lat

60 16 4143 4127 275.094 412 0.141569 0.23234

Total time run: 60.120882

Total writes made: 4144

Write size: 4194304

Bandwidth (MB/sec): 275.711

Stddev Bandwidth: 131.575

Max bandwidth (MB/sec): 416

Min bandwidth (MB/sec): 0

Average Latency: 0.232095

Stddev Latency: 0.378471

Max latency: 4.61637

Min latency: 0.037403read speed

Code:

rados -p test3 bench 60 seq

...

Total time run: 13.370731

Total reads made: 4144

Read size: 4194304

Bandwidth (MB/sec): 1239.723

Average Latency: 0.0515508

Max latency: 0.673166

Min latency: 0.008432Absolutely great things to read about Proxmox - my congrats to the Proxmox devs and contributors.

For my understanding (and others too?) who are not as familiar with Ceph as you guys:

I read that Ceph needs at least 2 copies for data safety but 2+ copies more for HA (says: http://ceph.com/docs/master/architecture/),

however the Proxmox wiki suggests 3 nodes as the minimum for Ceph.

Now I understand that Ceph, for production use wants > 2 copies, that's fair enough, I do see the point.

However: Can it be tested and configured with only 2 nodes?

I'd have 2 servers available for some testing, but not 3 - for production that would be possible though.

I ran 2 node CEPH cluster in a stressful production environment for last 10 months. No issues. Only recently i added 3rd node to increase performance and we are anticipating growth of our data. Even with 3 nodes you can still use 2 copies.

I would just like to point out that if you have more than 6 OSDs per node, it is a wise idea to put the journal on the OSDs themselves. As you increase your number of OSDs, putting journal on the same OSD reduces the risk of losing multiple OSDs together. This way you only have to worry about losing OSD and its journal only.

I got a crystal diskmark result from a win7 VM running on our ceph clusters, hardware and network is described here (http://pve.proxmox.com/wiki/Ceph_Server#Recommended_hardware) - all pools are using replication 3.

Code:qm config 104 bootdisk: virtio0 cores: 6 ide0: none,media=cdrom memory: 2048 name: windows7-spice net0: virtio=0A:8B:AB:10:10:49,bridge=vmbr0 ostype: win7 parent: demo sockets: 1 vga: qxl2 virtio0: local:104/vm-104-disk-1.qcow2,format=qcow2,cache=writeback,size=32G virtio1: ceph3:vm-104-disk-1,cache=writeback,size=32G

View attachment 1991

Hi Tom,

thank you for your performance tests.

One question. I think using VM's with writeback disk cache in production systems is not a good decision.

Please can you change to cache=none and post the CrystalDiskMark results again.

Thank you very much.

Regards,

Oer

the crystal disk benchmarks are not so wow. specially the 4k reads/writes are really poor. i have more or less the same speed (4k) on servers with 2 sata disks (raid 1)

using replication of 2 performs better?

also i think using ssd for journal is a risk like mentioned above. it can get even worse when the ssds reach end of life cicle or have some other problems. as they are all the same model and have more or less the same data and read/writes because of replication it can happen that all of them will fail at the same time killing the whole cluster....

using replication of 2 performs better?

also i think using ssd for journal is a risk like mentioned above. it can get even worse when the ssds reach end of life cicle or have some other problems. as they are all the same model and have more or less the same data and read/writes because of replication it can happen that all of them will fail at the same time killing the whole cluster....

the crystal disk benchmarks are not so wow. specially the 4k reads/writes are really poor. i have more or less the same speed (4k) on servers with 2 sata disks (raid 1)

using replication of 2 performs better?

if you run the same benchmark in parallel - e.g. 100 guest you will see a the difference between ceph RBD and your sata raid1. if your goal is a very fast single VM, then ceph is not the winner. a fast hardware raid with a lot of cache, ssd only or ssd & sas hdd´s is a good choice here.

also i think using ssd for journal is a risk like mentioned above. it can get even worse when the ssds reach end of life cicle or have some other problems. as they are all the same model and have more or less the same data and read/writes because of replication it can happen that all of them will fail at the same time killing the whole cluster....

You need to be prepared for failing OSD and journal disks and you need design your ceph hardware according to your goals. If money is no concern, just use enterprise class SSDs for all your OSD. the really cool feature is that with ceph you have the freedom to choose your hardware according to your needs and you can always upgrade your hardware without downtime. replacing OSD, journal SSD disks, all this can be done via our new GUI (of course, someone needs to plug in the new disk in your servers before).

Hi Tom,

thank you for your performance tests.

One question. I think using VM's with writeback disk cache in production systems is not a good decision.

Please can you change to cache=none and post the CrystalDiskMark results again.

Thank you very much.

Regards,

Oer

Hi Tom,

can you please do this performance tests.

it would be very important to me.

Thank you very much.

Regards,

Oer

Hi Tom,

thank you for your performance tests.

One question. I think using VM's with writeback disk cache in production systems is not a good decision.

..

Why not? Writeback is the recommended setting for ceph rbd if you want good write performance.

Feature Request. Add support of disk partitions. Command pveceph createosd /dev/sd[X] can use only WHOLE disk but not disk partition like /dev/sdd4 Clean ceph installation support partitions.

http://forum.proxmox.com/threads/17863-Ceph-Server-why-block-devices-and-not-partitions

and

http://forum.proxmox.com/threads/17909-Ceph-server-feedback

RTFF (read the "famous" forum)...

Marco

Feature Request. Add support of disk partitions. Command pveceph createosd /dev/sd[X] can use only WHOLE disk but not disk partition like /dev/sdd4 Clean ceph installation support partitions.

See http://forum.proxmox.com/threads/17863-Ceph-Server-why-block-devices-and-not-partitions

How far is Proxmox for using OpenVZ on ceph?Either by ploop images or the file system part of ceph?Will either of above solutions be available any time soon?

How far is Proxmox for using OpenVZ on ceph?Either by ploop images or the file system part of ceph?Will either of above solutions be available any time soon?

nothing usable for now but yes, containers on distributed storage would be nice.

I just set up a a 3node proxmox cluster thats being virtualized by proxmox. While I can't run KVM VMs on this, I can test pve-ceph. I noticed the status on the webinterface saying HEALTH_WARN, not specifying details. I can only speculate, but maybe this display does not have all the possible circumstances covered yet?

Anyway, the reason for the health warn is clock skew, meaning the system time of the systems is too far apart (Ceph allows for .05s diffference per default). Since this is a virtualized cluster I have no problem blaming this issue solely on KVM, so this is not a bug report or anything.

I wanted to leave the following hint however:

in the [global] section of /etc/pve/ceph.conf you can add

to make the test cluster say HEALTH_OK. It may not be a good idea to do this on production clusters but then again, the ceph mailing list does say that setting this to .1 or even .2 should be okay. Additionally, specifying 1-3 local NTP servers in /etc/ntp.conf might help (it did not for me).

Funny sidenote: Even though this is a virtual testing cluster, "rados bench -p test 300 write" is STILL giving me rates that exceed a single physical disk! This setup is terribly bad for performance, but I am still getting good rates (for such a test anyways...). The pool has size=3, this Ceph has 1GBit networking and the virtual OSDs are stored on some fibrechannel SAN box.

Write bench is giving me 35MB/s throughput (between 3 ceph nodes, 2 OSDs each)

Anyway, the reason for the health warn is clock skew, meaning the system time of the systems is too far apart (Ceph allows for .05s diffference per default). Since this is a virtualized cluster I have no problem blaming this issue solely on KVM, so this is not a bug report or anything.

I wanted to leave the following hint however:

in the [global] section of /etc/pve/ceph.conf you can add

Code:

mon clock drift allowed = .3to make the test cluster say HEALTH_OK. It may not be a good idea to do this on production clusters but then again, the ceph mailing list does say that setting this to .1 or even .2 should be okay. Additionally, specifying 1-3 local NTP servers in /etc/ntp.conf might help (it did not for me).

Funny sidenote: Even though this is a virtual testing cluster, "rados bench -p test 300 write" is STILL giving me rates that exceed a single physical disk! This setup is terribly bad for performance, but I am still getting good rates (for such a test anyways...). The pool has size=3, this Ceph has 1GBit networking and the virtual OSDs are stored on some fibrechannel SAN box.

Write bench is giving me 35MB/s throughput (between 3 ceph nodes, 2 OSDs each)

Last edited: