I will preface this by saying I am a total ProxMox/CEHP noob... I am good with Linux and I have been a VMware Admin/Engineer for the better part of 2 decades...

I have looked through dozens of walk-thoughts and videos but I am still struggling with CEPH and I need some help.

Goal:

HA VM/Storage Cluster with Tiered Storage Capability

NVME

SSD

Spinner

Current Environment:

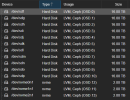

4x Compute Nodes Dell PowerEdge R650 w/

1TB RAM

2x 64GB Sata-Dom ProxMox OS

3x 2TB SSD RAIDz ZFS Local Storage

3x SuperMicro Mass storage servers w/

512GB RAM

2x 64GB Sata-Dom ProxMox OS

4x 2TB NVME

12x 2TB SSD

14x 16TB HDD Spinner Drives

40Gbps interface dedicated CEPH/Cluster Network

4x10Gbps Bonded Client Network

Any help or advice would be greatly appreciated!

I have looked through dozens of walk-thoughts and videos but I am still struggling with CEPH and I need some help.

Goal:

HA VM/Storage Cluster with Tiered Storage Capability

NVME

SSD

Spinner

Current Environment:

4x Compute Nodes Dell PowerEdge R650 w/

1TB RAM

2x 64GB Sata-Dom ProxMox OS

3x 2TB SSD RAIDz ZFS Local Storage

3x SuperMicro Mass storage servers w/

512GB RAM

2x 64GB Sata-Dom ProxMox OS

4x 2TB NVME

12x 2TB SSD

14x 16TB HDD Spinner Drives

40Gbps interface dedicated CEPH/Cluster Network

4x10Gbps Bonded Client Network

Any help or advice would be greatly appreciated!