This is new build. Tried both 7.2 and 8.0 PVE with same results.

System will crash randomly, not even being heavy loaded.

Specs:

Tried different things such CPU/ power and Memory Stress test. I've managed to crash it once with memory test but couldn't replicate.

System running LXC containers mainly with cron job to trimm them once a weeek.

# !/bin/bash

# Path to FSTRIM

FSTRIM=/sbin/fstrim

# List of host volumes to trim separated by spaces

# eg TRIMVOLS="/mnt/a /mnt/b /mnt/c"

TRIMVOLS="/"

## Trim all LXC containers ##

echo "LXC CONTAINERS"

for i in $(/sbin/pct list | awk '/^[0-9]/ {print $1}'); do

echo "Trimming Container $i"

/sbin/pct fstrim $i 2>&1 | logger -t "pct fstrim [$$]"

done

echo ""

## Trim host volumes ##

echo "HOST VOLUMES"

for i in $TRIMVOLS; do

echo "Trimming $i"

$FSTRIM -v $i 2>&1 | logger -t "fstrim [$$]"

done

Journal error log:

root@pve04:/var/log/journal/a31d1bf8dc6941c59cdbb78748c91267# journalctl -p err -f

Aug 09 15:27:00 pve04 kernel: mce: [Hardware Error]: CPU 4: Machine Check: 0 Bank 1: bc800800060c0859

Aug 09 15:27:00 pve04 kernel: mce: [Hardware Error]: TSC 0 ADDR da6954e40 MISC d012000000000000 IPID 100b000000000

Aug 09 15:27:00 pve04 kernel: mce: [Hardware Error]: PROCESSOR 2:a20f12 TIME 1691612813 SOCKET 0 APIC 8 microcode a20120a

Aug 09 15:27:02 pve04 smartd[1978]: Device: /dev/nvme1, number of Error Log entries increased from 44 to 45

Aug 09 15:27:03 pve04 smartd[1978]: Device: /dev/nvme2, number of Error Log entries increased from 44 to 45

Tired memory reseat and make sure all fits correctly - all good there.

LXC container config:

arch: amd64

cores: 4

hostname: n1-grp7-10.1.70.2-16127-hdd1

memory: 7178

net0: name=eth0,bridge=vmbr0,firewall=1,gw=10.1.70.1,hwaddr=0A:41:E9:5D D:81,ip=10.1.70.2/24,rate=4,tag=70,type=veth

D:81,ip=10.1.70.2/24,rate=4,tag=70,type=veth

onboot: 0

ostype: ubuntu

rootfs: local-lvm:vm-7001-disk-0,size=240G

swap: 0

lxc.apparmor.profile: unconfined

lxc.cgroup2.devices.allow: a

lxc.cap.drop:

lxc.cgroup2.devices.allow: b 7:* rwm

lxc.cgroup2.devices.allow: c 10:237 rwm

lxc.mount.entry: /dev/loop0 dev/loop0 none bind,create=file 0 0

lxc.mount.entry: /dev/loop1 dev/loop1 none bind,create=file 0 0

lxc.mount.entry: /dev/loop2 dev/loop2 none bind,create=file 0 0

lxc.mount.entry: /dev/loop3 dev/loop3 none bind,create=file 0 0

lxc.mount.entry: /dev/loop4 dev/loop4 none bind,create=file 0 0

lxc.mount.entry: /dev/loop5 dev/loop5 none bind,create=file 0 0

lxc.mount.entry: /dev/loop6 dev/loop6 none bind,create=file 0 0

lxc.mount.entry: /dev/loop7 dev/loop7 none bind,create=file 0 0

lxc.mount.entry: /dev/loop8 dev/loop8 none bind,create=file 0 0

lxc.mount.entry: /dev/loop9 dev/loop9 none bind,create=file 0 0

lxc.mount.entry: /dev/loop10 dev/loop10 none bind,create=file 0 0

---al lthe way to 99 loop

lxc.mount.entry: /dev/loop99 dev/loop99 none bind,create=file 0 0

lxc.mount.entry: /dev/loop-control dev/loop-control none bind,create=file 0

SVM enabled, I read in one forum someone talking about C-State disabling?! Not sure what this effects.

I have also did not try to run VMs instead of LXC

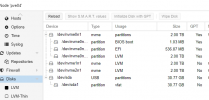

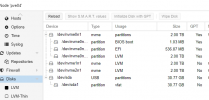

Disk info (all thin):

Any suggestions would be greatly appreciated. Thank You in advance.

System will crash randomly, not even being heavy loaded.

Specs:

- 24 x AMD Ryzen 9 5900X 12-Core Processor (1 Socket)

- Kernel Version Linux 6.2.16-6-pve #1 SMP PREEMPT_DYNAMIC PMX 6.2.16-7 (2023-08-01T11:23Z)

- PVE Manager Version pve-manager/8.0.4/d258a813cfa6b390

- gigabyte x570s aourus elite

Tried different things such CPU/ power and Memory Stress test. I've managed to crash it once with memory test but couldn't replicate.

System running LXC containers mainly with cron job to trimm them once a weeek.

# !/bin/bash

# Path to FSTRIM

FSTRIM=/sbin/fstrim

# List of host volumes to trim separated by spaces

# eg TRIMVOLS="/mnt/a /mnt/b /mnt/c"

TRIMVOLS="/"

## Trim all LXC containers ##

echo "LXC CONTAINERS"

for i in $(/sbin/pct list | awk '/^[0-9]/ {print $1}'); do

echo "Trimming Container $i"

/sbin/pct fstrim $i 2>&1 | logger -t "pct fstrim [$$]"

done

echo ""

## Trim host volumes ##

echo "HOST VOLUMES"

for i in $TRIMVOLS; do

echo "Trimming $i"

$FSTRIM -v $i 2>&1 | logger -t "fstrim [$$]"

done

Journal error log:

root@pve04:/var/log/journal/a31d1bf8dc6941c59cdbb78748c91267# journalctl -p err -f

Aug 09 15:27:00 pve04 kernel: mce: [Hardware Error]: CPU 4: Machine Check: 0 Bank 1: bc800800060c0859

Aug 09 15:27:00 pve04 kernel: mce: [Hardware Error]: TSC 0 ADDR da6954e40 MISC d012000000000000 IPID 100b000000000

Aug 09 15:27:00 pve04 kernel: mce: [Hardware Error]: PROCESSOR 2:a20f12 TIME 1691612813 SOCKET 0 APIC 8 microcode a20120a

Aug 09 15:27:02 pve04 smartd[1978]: Device: /dev/nvme1, number of Error Log entries increased from 44 to 45

Aug 09 15:27:03 pve04 smartd[1978]: Device: /dev/nvme2, number of Error Log entries increased from 44 to 45

Tired memory reseat and make sure all fits correctly - all good there.

LXC container config:

arch: amd64

cores: 4

hostname: n1-grp7-10.1.70.2-16127-hdd1

memory: 7178

net0: name=eth0,bridge=vmbr0,firewall=1,gw=10.1.70.1,hwaddr=0A:41:E9:5D

onboot: 0

ostype: ubuntu

rootfs: local-lvm:vm-7001-disk-0,size=240G

swap: 0

lxc.apparmor.profile: unconfined

lxc.cgroup2.devices.allow: a

lxc.cap.drop:

lxc.cgroup2.devices.allow: b 7:* rwm

lxc.cgroup2.devices.allow: c 10:237 rwm

lxc.mount.entry: /dev/loop0 dev/loop0 none bind,create=file 0 0

lxc.mount.entry: /dev/loop1 dev/loop1 none bind,create=file 0 0

lxc.mount.entry: /dev/loop2 dev/loop2 none bind,create=file 0 0

lxc.mount.entry: /dev/loop3 dev/loop3 none bind,create=file 0 0

lxc.mount.entry: /dev/loop4 dev/loop4 none bind,create=file 0 0

lxc.mount.entry: /dev/loop5 dev/loop5 none bind,create=file 0 0

lxc.mount.entry: /dev/loop6 dev/loop6 none bind,create=file 0 0

lxc.mount.entry: /dev/loop7 dev/loop7 none bind,create=file 0 0

lxc.mount.entry: /dev/loop8 dev/loop8 none bind,create=file 0 0

lxc.mount.entry: /dev/loop9 dev/loop9 none bind,create=file 0 0

lxc.mount.entry: /dev/loop10 dev/loop10 none bind,create=file 0 0

---al lthe way to 99 loop

lxc.mount.entry: /dev/loop99 dev/loop99 none bind,create=file 0 0

lxc.mount.entry: /dev/loop-control dev/loop-control none bind,create=file 0

SVM enabled, I read in one forum someone talking about C-State disabling?! Not sure what this effects.

I have also did not try to run VMs instead of LXC

Disk info (all thin):

Any suggestions would be greatly appreciated. Thank You in advance.