Hi, Im trying to configure gpu passthru in my pve machine, Ive tried a few ways already, but none seem to be working. This one (https://github.com/JamesTurland/JimsGarage/tree/main/GPU_passthrough) got me the furthest, the vm is working on the pve console, windows (11) even recognizes the gpu, but doesnt output anything thru it. Ive dont everything in the guides, checked it everything should be working. Just the vm for some reason doesnt want to output thru the gpu (connected thru dvi port on the gpu). Clueless what else it could be now. Ive also tried installing some drivers on the windows VM, but those dont work either, tried the geforce experience app and also the standalone drivers (Yes its an nvidia gpu).

[SOLVED] Proxmox GPU Passthrough

- Thread starter TommySK

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Same issue here. I did read a post on here the other day that a recent update broke GPU passthrough for some versions but I am having trouble relocating the exact post. The workaround was to pin PVE to the previous version.

My test is for an old PC game VM. So Windows 7, intel UHD 630. Every guide I try gets a graphics adapter in windows but it never appears as the correct one (just standard VGA). I am going to try a windows 10 machine today to see what happens.

My test is for an old PC game VM. So Windows 7, intel UHD 630. Every guide I try gets a graphics adapter in windows but it never appears as the correct one (just standard VGA). I am going to try a windows 10 machine today to see what happens.

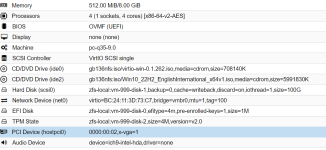

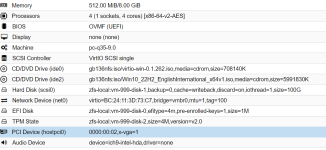

So Ive got it working with windows 10. new VM created with standard VGA display so I could VNC the install and PCI CPU attached as below, installed Win 10, set static IP so I could RDP to it. Turned on RDP connections. Appeared as Basic adapter until I installed the GPU drivers then it came alive. windows was showing 2 displays but VNC still worked right up until I told windows to make the GPU the main display. Then VNC would no longer work so i removed that display and rebooted the machine and RDP works fine and can see the proper adapter. Still doesnt help me with windows 7 though as the same process isnt working :-(

Heres the machine setup

I should add that I had previously followed the guides to add the relevant lines of text to load the correct modules and as i understand it not all of these items in grub are needed but dont hurt either.

this line in /etc/default/grub

this line in /etc/modprobe.d/vfio.conf

these lines in /etc/modprobe.d/blacklist.conf

/etc/modprobe.d/kvm.conf

/etc/modprobe.d/iommu_unsafe_interrupts.conf

Heres the machine setup

I should add that I had previously followed the guides to add the relevant lines of text to load the correct modules and as i understand it not all of these items in grub are needed but dont hurt either.

this line in /etc/default/grub

Code:

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pcie_acs_override=downstream,multifunction nofb nomodeset video=vesafb:off,video=efifb:off"this line in /etc/modprobe.d/vfio.conf

Code:

options vfio-pci ids=8086:3e92 disable_vga=1these lines in /etc/modprobe.d/blacklist.conf

Code:

blacklist radeon

blacklist nouveau

blacklist nvidia

blacklist nvidiafb

blacklist i915/etc/modprobe.d/kvm.conf

Code:

options kvm ignore_msrs=1/etc/modprobe.d/iommu_unsafe_interrupts.conf

Code:

options vfio_iommu_type1 allow_unsafe_interrupts=1

Last edited:

I just tried the same thing on windows 11, it didn't work. I did: 1. Set up the VM, 2. installed win11 on it, 3. installed NVidia drivers for the gpu, 4. set the gpu connected to the VM as primary, 5. proxmox console (the vnc thing in the Gui) stopped working, 6. attached a monitor to the gpu output, 7. realized theres no ouput ... I tried rebooting the pve, on the monitor it displayed the boot screen (the screen that says press del to enter bios etc.), then just went black, cuz you have to blacklist it to give it to a VM, started the VM, but still no output, pve says the VM was running for like 5 mins so it cant be it didnt have enough time to boot. The VM also has the same settings as yours, no idea whats causing the problem. Btw when i go to device manager on the win11 VM it says the gpus showing error 43 (didnt start correctly) even with the drivers installed, perchance you used drivers from virtio or somewhere else? I used the original NVidia drivers from their website. Gonna also try win10 VM too later.

And also, yeah, I got the same lines of code in the machine as you posted, I didn't post it yesterday because I was already annoyed by this thing.

And also, yeah, I got the same lines of code in the machine as you posted, I didn't post it yesterday because I was already annoyed by this thing.

The only additional thing I have which came to me this morning is an earlier fix I had tried was still in place from a post I found on this forum. Thats this line in the crontab

crontab -e

and in the script itself

Just switch out the PCI number for the PCI number on your device. Also I am running the latest patch levels. GUI shows 8.3.0 as the version.

crontab -e

Code:

@reboot /root/fix_gpu_pass.shand in the script itself

Code:

echo 1 > /sys/bus/pci/devices/0000\:00\:02.0/remove

echo 1 > /sys/bus/pci/rescanJust switch out the PCI number for the PCI number on your device. Also I am running the latest patch levels. GUI shows 8.3.0 as the version.

I just tried it again on updated proxmox 8.3-1, as I had the old version of it. Installed it completely new from an official ISO. Did everything in the guide mentioned plus the lines that you mentioned, tried it with windows 10 VM as you said it worked for you, but still nothing. Windows 10 doesn’t even recognise the gpu and only display it in device manager as pci device. Clueless what else could be wrong, I’m running the newest version and did everything by the guides iommu enabled, remmaping enabled, the device is in separate iommu group. Basically everything that should be set up is working correctly. But still it’s not working, I also just realised that proxmox doesn’t even detect my usb devices. Whenever I plug in a usb stick and do lsusb nothing shows up, only the proxmox usb controllers. I’ve also updated my BIOS and it’s on the UEFI boot, VT-d is enabled, in bios I think everything should be also fine. If you have any ideas, please. Thanks

Is yours integrated or pci graphics? Mines integrated so you could try ticking pciexpress but if it's showing in the raw mapping list for the proxmox host then it should pass through. If it's not in the raw mapping list then no idea

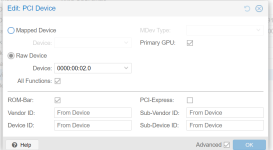

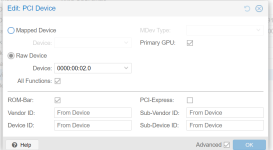

It’s in the raw devices dropdown menu I don’t have it mapped or anything, and yeah I got NVIDIA 1060 in pcie slot 1 (or 0 whatever the first one is), I tried ticking primary gpu, pcie, rom bar, basically everything in the add pcie component menu. Nothing helped primary gpu even disabled the novnc console, but still didn’t output anything.

I found the solution, I was installing incorrect drivers or something. I tried the original standalone nvidia drivers first, but the virtio drivers worked the passthrough is worked and windows recognised the GPU. Thanks

nice - worth marking the thread as resolved to help others find the potential solution as this seems to come up a lot

Hey everyone,

Trying to get some help with iGPU passthrough on my HP EliteDesk 800 G5 Mini, which has an i5-9500T (UHD 630 graphics).

I'm running Proxmox VE 8.4 and trying to pass the iGPU to a Windows 11 VM so it can output directly to my LG TV via a DisplayPort-to-HDMI cable connected to the rear port.

What I’ve done so far:

Trying to get some help with iGPU passthrough on my HP EliteDesk 800 G5 Mini, which has an i5-9500T (UHD 630 graphics).

I'm running Proxmox VE 8.4 and trying to pass the iGPU to a Windows 11 VM so it can output directly to my LG TV via a DisplayPort-to-HDMI cable connected to the rear port.

What I’ve done so far:

- Enabled VT-d and iGPU Multi-Monitor in BIOS

- Edited GRUB: intel_iommu=on iommu=pt video=efifb

ff video=vesafb

ff video=vesafb ff

ff - Blacklisted i915 in /etc/modprobe.d/blacklist-i915.conf

- Bound the iGPU (00:02.0) to vfio-pci

- Created the VM in Proxmox and passed through the iGPU (PCI device + primary GPU checked)

- Windows 11 boots, I can connect via AnyDesk, install Intel drivers just fine...

- Has anyone successfully passed through UHD 630 on an i5-9500T to a Win11 VM?

- Do I need a ROM file or some trick like i915.force_probe instead of blacklisting?

- Is my DisplayPort-to-HDMI adapter a potential issue here?

- Is GVT-g worth trying in this case, or full passthrough preferred?

As per my post I got gpu to work to a windows 11 box, i didn't try output to another device though.

Interestingly though when I upgraded to 8.4 it stopped working even though my config was still in place.

I still can't resolve the issue so just gave up and bought another mini pc to do that work.

Interestingly though when I upgraded to 8.4 it stopped working even though my config was still in place.

I still can't resolve the issue so just gave up and bought another mini pc to do that work.

what specs is the mini pc?As per my post I got gpu to work to a windows 11 box, i didn't try output to another device though.

Interestingly though when I upgraded to 8.4 it stopped working even though my config was still in place.

I still can't resolve the issue so just gave up and bought another mini pc to do that work.

Pretty much similar to your gpu. Older Intel nuc with Intel graphics 16 gig ram nvme for storage, just with windows installed directly as the os. Picked it up second hand for a couple of hundred bucks.