Hello,

I am using Proxmox VE v7.2.3 and I want to passthrough a PCIe RAID Controller Card (LSI MegaRAID SATA-SAS 9260-8i is the card in question, to be exact) with two 12 GB Ironwolf NAS drives.

When I tried to passthrough this card to my TrueNAS Core VM, the whole Proxmox froze and went offline and I had to restart the whole server.

I initally couldn't even boot the Proxmox node since that TrueNAS VM was set to autoboot, but I managed to fix it by switching IOMMU off in Proxmox recovery mode.

When everything was back up and running, I ran this command:

and I got this message:

I also ran this command:

and I got this message:

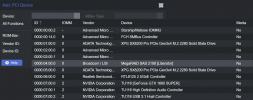

When I try to pass it through to that same VM, I get a screen like this:

I am new to Proxmox, but my guess this means that this card has its dedicated IOMMU group.

I saw in other forum topic that one person solved it by placing the HBA in another PCIe slot, but I don't have any empty slots left on my motherboard.

Is there any way to solve this problem? Also, if I get a different card from another manufacturer, could I face the same issue?

I am using Proxmox VE v7.2.3 and I want to passthrough a PCIe RAID Controller Card (LSI MegaRAID SATA-SAS 9260-8i is the card in question, to be exact) with two 12 GB Ironwolf NAS drives.

When I tried to passthrough this card to my TrueNAS Core VM, the whole Proxmox froze and went offline and I had to restart the whole server.

I initally couldn't even boot the Proxmox node since that TrueNAS VM was set to autoboot, but I managed to fix it by switching IOMMU off in Proxmox recovery mode.

When everything was back up and running, I ran this command:

Code:

dmesg | grep 'remapping'and I got this message:

0.880005] AMD-Vi: Interrupt remapping enabledI also ran this command:

Code:

find /sys/kernel/iommu_groups/ -type land I got this message:

/sys/kernel/iommu_groups/7/devices/0000:00:08.0

/sys/kernel/iommu_groups/5/devices/0000:00:07.0

/sys/kernel/iommu_groups/13/devices/0000:09:00.1

/sys/kernel/iommu_groups/3/devices/0000:00:04.0

/sys/kernel/iommu_groups/11/devices/0000:08:00.0

/sys/kernel/iommu_groups/1/devices/0000:00:02.0

/sys/kernel/iommu_groups/8/devices/0000:00:08.1

/sys/kernel/iommu_groups/6/devices/0000:00:07.1

/sys/kernel/iommu_groups/14/devices/0000:09:00.3

/sys/kernel/iommu_groups/4/devices/0000:00:05.0

/sys/kernel/iommu_groups/12/devices/0000:09:00.0

/sys/kernel/iommu_groups/2/devices/0000:00:03.1

/sys/kernel/iommu_groups/2/devices/0000:07:00.2

/sys/kernel/iommu_groups/2/devices/0000:07:00.0

/sys/kernel/iommu_groups/2/devices/0000:00:03.0

/sys/kernel/iommu_groups/2/devices/0000:07:00.3

/sys/kernel/iommu_groups/2/devices/0000:07:00.1

/sys/kernel/iommu_groups/10/devices/0000:00:18.3

/sys/kernel/iommu_groups/10/devices/0000:00:18.1

/sys/kernel/iommu_groups/10/devices/0000:00:18.6

/sys/kernel/iommu_groups/10/devices/0000:00:18.4

/sys/kernel/iommu_groups/10/devices/0000:00:18.2

/sys/kernel/iommu_groups/10/devices/0000:00:18.0

/sys/kernel/iommu_groups/10/devices/0000:00:18.7

/sys/kernel/iommu_groups/10/devices/0000:00:18.5

/sys/kernel/iommu_groups/0/devices/0000:03:00.0

/sys/kernel/iommu_groups/0/devices/0000:02:00.2

/sys/kernel/iommu_groups/0/devices/0000:00:01.2

/sys/kernel/iommu_groups/0/devices/0000:02:00.0

/sys/kernel/iommu_groups/0/devices/0000:00:01.0

/sys/kernel/iommu_groups/0/devices/0000:01:00.0

/sys/kernel/iommu_groups/0/devices/0000:06:00.0

/sys/kernel/iommu_groups/0/devices/0000:03:08.0

/sys/kernel/iommu_groups/0/devices/0000:02:00.1

/sys/kernel/iommu_groups/0/devices/0000:00:01.1

/sys/kernel/iommu_groups/0/devices/0000:05:00.0

/sys/kernel/iommu_groups/0/devices/0000:03:04.0

/sys/kernel/iommu_groups/0/devices/0000:04:00.0

/sys/kernel/iommu_groups/9/devices/0000:00:14.3

/sys/kernel/iommu_groups/9/devices/0000:00:14.0When I try to pass it through to that same VM, I get a screen like this:

I am new to Proxmox, but my guess this means that this card has its dedicated IOMMU group.

I saw in other forum topic that one person solved it by placing the HBA in another PCIe slot, but I don't have any empty slots left on my motherboard.

Is there any way to solve this problem? Also, if I get a different card from another manufacturer, could I face the same issue?