Hello,

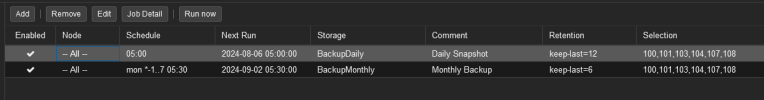

I have two backup jobs for my container.

1. Daily Snapshot with "Keep last 12"

2. Monthly Snapshot (Stop Mode) with "Keep last 6"

My plan was to keep the daily snapshots for the last 12 days and the monthly snapshot for the last 6 month.

But after the monthly run few hours ago Proxmox deleted ALL backups, until only 6 were left. Is this an error?

That makes no sense to me. I want to differentiate between backup job 1 and 2. And what is the point of providing me with the option individually for each backup job if it always affects all backup jobs anyway?

Maybe I don't understand something. I hope someone can help. Thank you very much!

I have two backup jobs for my container.

1. Daily Snapshot with "Keep last 12"

2. Monthly Snapshot (Stop Mode) with "Keep last 6"

My plan was to keep the daily snapshots for the last 12 days and the monthly snapshot for the last 6 month.

But after the monthly run few hours ago Proxmox deleted ALL backups, until only 6 were left. Is this an error?

That makes no sense to me. I want to differentiate between backup job 1 and 2. And what is the point of providing me with the option individually for each backup job if it always affects all backup jobs anyway?

Maybe I don't understand something. I hope someone can help. Thank you very much!