Hello community

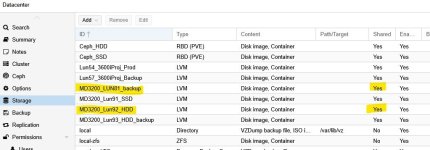

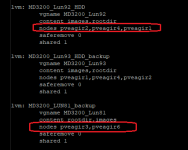

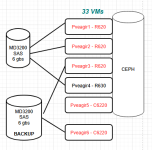

I have a cluster with several nodes and different storages.

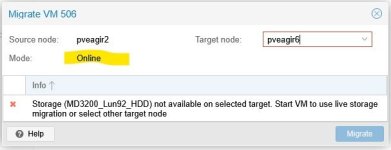

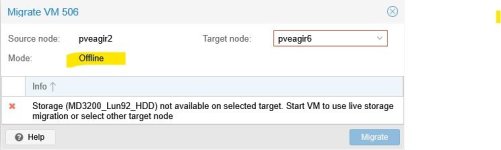

I want to migrate a VM (preferably online, otherwise offline) from on node to another node, but there is no shared storage between the 2 nodes.

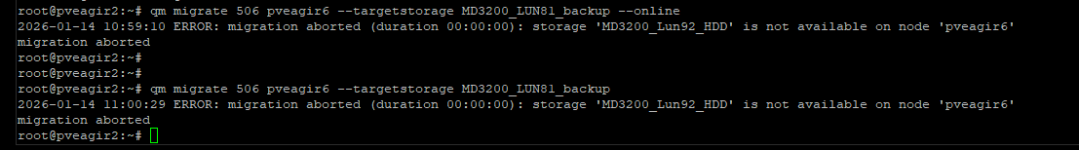

I think it is possible at least if the VM is down, but i have an error.

So my question is : how migrate vm without shared storage ?

the storage are Dell MD 3200 SAS LUN mounted as LVM

Best regards all

GE

I have a cluster with several nodes and different storages.

I want to migrate a VM (preferably online, otherwise offline) from on node to another node, but there is no shared storage between the 2 nodes.

I think it is possible at least if the VM is down, but i have an error.

So my question is : how migrate vm without shared storage ?

the storage are Dell MD 3200 SAS LUN mounted as LVM

Best regards all

GE