Hello all,

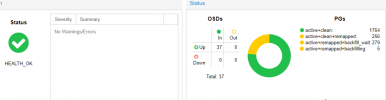

We have a cluster of 9 servers with hdd ceph pool.

We have Recently purchased SSD disks for our SSD pool

Now the situation is

That when we need to create a new crush rule for ssd:

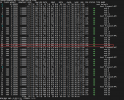

But getting the following error:

But when we are creating an osd with our ssd disk only then... when running the "ceph osd crash" command

The command was successful.

And only then we can create our SSD-POOL and attach it to the new crush rule

yes we have created an ssd class before all of it

And in meanwhile it starting to rebalance the new SSD OSD disk with the already existing HDD OSD's data

The main issue that we are failing with creating a new completely separate SSD pool.

How can we be really sure that we are having a full segregation between SSD Disks OSD's and HDD Disks OSD's ????

We have a cluster of 9 servers with hdd ceph pool.

We have Recently purchased SSD disks for our SSD pool

Now the situation is

That when we need to create a new crush rule for ssd:

ceph osd crush rule create-replicated replicated-ssd defaulthost ssdBut getting the following error:

Error ENOENT: root item default-ssd does not exist But when we are creating an osd with our ssd disk only then... when running the "ceph osd crash" command

The command was successful.

And only then we can create our SSD-POOL and attach it to the new crush rule

yes we have created an ssd class before all of it

And in meanwhile it starting to rebalance the new SSD OSD disk with the already existing HDD OSD's data

The main issue that we are failing with creating a new completely separate SSD pool.

How can we be really sure that we are having a full segregation between SSD Disks OSD's and HDD Disks OSD's ????