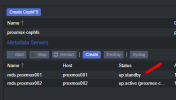

Currently, I have 2 nodes of proxmox cluster with a quorum device as Raspberry Pi ( on which I am running openmediavault as well)

I created an HA group and added the CT,

to test the HA

I just shut the node the CT migrated to another node however they failed to start.

Action Taken

Removed from HA configuration

and tried to start but showing

>>command 'ha-manager migrate ct:101 proxmox002' failed: exit code 255

and showing no such logical volume pve/vm/101-disk-0

I am pretty much sure this is because of the inability to replicate the local-lvm disk across the node

Question

is there any way in which I can set the replication for the local-lvm ?

also tried to create replication job

and its showing following error

missing replicate feature on volume 'local-lvm:vm-100-disk-0' (500)

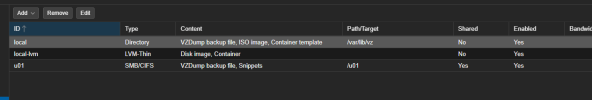

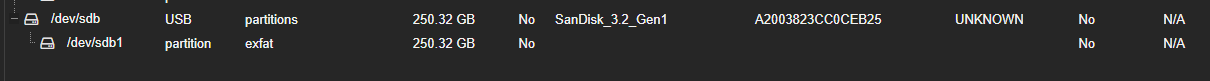

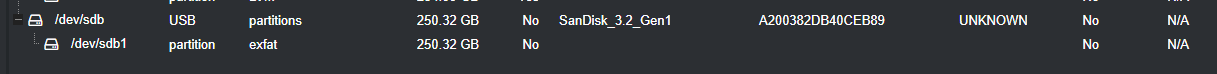

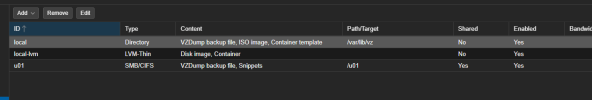

Current configuration :

I created an HA group and added the CT,

to test the HA

I just shut the node the CT migrated to another node however they failed to start.

Action Taken

Removed from HA configuration

and tried to start but showing

>>command 'ha-manager migrate ct:101 proxmox002' failed: exit code 255

and showing no such logical volume pve/vm/101-disk-0

I am pretty much sure this is because of the inability to replicate the local-lvm disk across the node

Question

is there any way in which I can set the replication for the local-lvm ?

also tried to create replication job

and its showing following error

missing replicate feature on volume 'local-lvm:vm-100-disk-0' (500)

Current configuration :