Hey guys,

we do a PCI Passthrough of a TRX 4060 TI on a B650D4U-2L2T/BCM mainboard with an AMD Ryzen 9 7900X CPU.

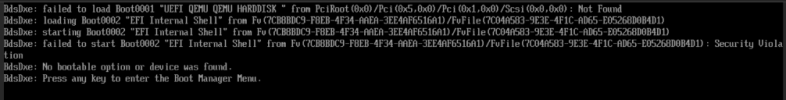

I got the passthrough working yesterday once after a CMOS reset followed by reapplying these bios options (I verified working passthrough using nvidia-smi in the VM), but after powering off the server and putting it back into the rack, it doesn't work anymore. Every time I start the VM, it does show this error (rebooted ~5 times to test if it occurs every time):

I am trying to figure out what causes this now, because I did not change anything yesterday from before the server was placed in the rack and afterward. The only thing I changed when putting it into the rack were power cable & lan cables.

Additionally, I do not know what to troubleshoot next. We already replaced the mainboard and the GPU a couple of weeks ago, maybe someone has a good idea what to test next?

lspci -nnk

root@proxmox9:~# ls -l /sys/kernel/iommu_groups/12/devices/

total 0

lrwxrwxrwx 1 root root 0 Feb 27 09:44 0000:01:00.0 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:01:00.0

lrwxrwxrwx 1 root root 0 Feb 27 09:44 0000:01:00.1 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:01:00.1

root@proxmox9:~# cat /etc/modprobe.d/vfio.conf

options vfio-pci ids=10de:2803,10de:22bd disable_vga=1 disable_idle_d3=1

VM > Hardware > PCI Device:

root@proxmox9:~# cat /etc/default/grub

[...]

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt pcie_aspm=off vfio-pci.disable_idle_d3=1 pci=realloc,reset pcie_port_pm=off"

[...]

root@proxmox9:~# dmesg | grep vfio

[ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-6.8.12-8-pve root=/dev/mapper/pve-root ro quiet amd_iommu=on iommu=pt pcie_aspm=off vfio-pci.disable_idle_d3=1 pci=realloc,reset pcie_port_pm=off

[ 0.049791] Kernel command line: BOOT_IMAGE=/boot/vmlinuz-6.8.12-8-pve root=/dev/mapper/pve-root ro quiet amd_iommu=on iommu=pt pcie_aspm=off vfio-pci.disable_idle_d3=1 pci=realloc,reset pcie_port_pm=off

[ 3.326677] vfio-pci 0000:01:00.0: vgaarb: VGA decodes changed: olddecodes=io+mem,decodes=none wns=none

wns=none

[ 3.326760] vfio_pci: add [10de:2803[ffffffff:ffffffff]] class 0x000000/00000000

[ 3.326819] vfio_pci: add [10de:22bd[ffffffff:ffffffff]] class 0x000000/00000000

[ 16.847494] vfio-pci 0000:01:00.1: enabling device (0000 -> 0002)

[ 20.033227] vfio-pci 0000:01:00.1: vfio_bar_restore: reset recovery - restoring BARs

[ 20.037272] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 20.758299] vfio-pci 0000:01:00.0: timed out waiting for pending transaction; performing function level reset anyway

[ 21.950364] vfio-pci 0000:01:00.0: not ready 1023ms after FLR; waiting

[ 23.038217] vfio-pci 0000:01:00.0: not ready 2047ms after FLR; waiting

[ 25.150502] vfio-pci 0000:01:00.0: not ready 4095ms after FLR; waiting

[ 29.502524] vfio-pci 0000:01:00.0: not ready 8191ms after FLR; waiting

[ 38.206361] vfio-pci 0000:01:00.0: not ready 16383ms after FLR; waiting

[ 55.102743] vfio-pci 0000:01:00.0: not ready 32767ms after FLR; waiting

[ 91.454980] vfio-pci 0000:01:00.0: not ready 65535ms after FLR; giving up

[ 91.553262] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 91.553648] vfio-pci 0000:01:00.1: vfio_bar_restore: reset recovery - restoring BARs

[ 100.580322] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 100.580357] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 1149.620773] vfio-pci 0000:01:00.0: timed out waiting for pending transaction; performing function level reset anyway

[ 1150.796899] vfio-pci 0000:01:00.0: not ready 1023ms after FLR; waiting

[ 1151.884942] vfio-pci 0000:01:00.0: not ready 2047ms after FLR; waiting

[ 1153.996774] vfio-pci 0000:01:00.0: not ready 4095ms after FLR; waiting

[ 1158.476981] vfio-pci 0000:01:00.0: not ready 8191ms after FLR; waiting

[ 1167.181084] vfio-pci 0000:01:00.0: not ready 16383ms after FLR; waiting

[ 1184.077151] vfio-pci 0000:01:00.0: not ready 32767ms after FLR; waiting

[ 1217.869729] vfio-pci 0000:01:00.0: not ready 65535ms after FLR; giving up

root@proxmox9:~# dmesg | grep -e DMAR -e IOMMU

[ 0.442148] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.444095] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

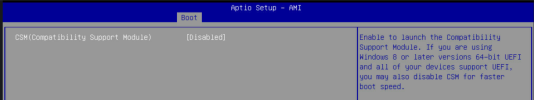

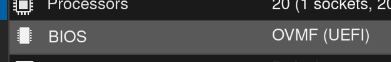

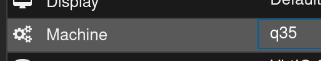

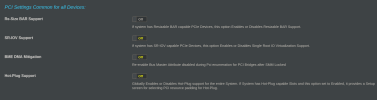

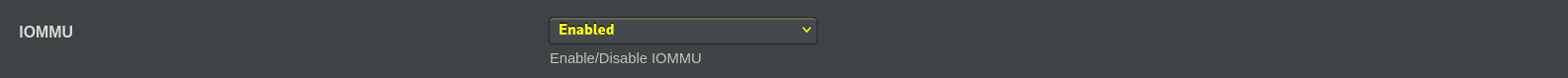

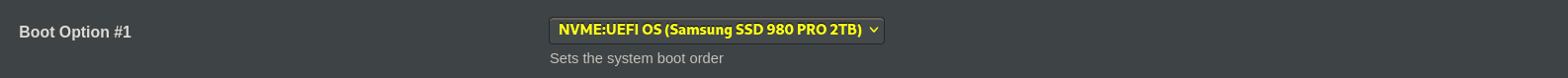

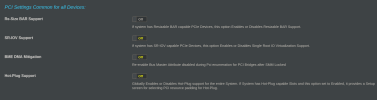

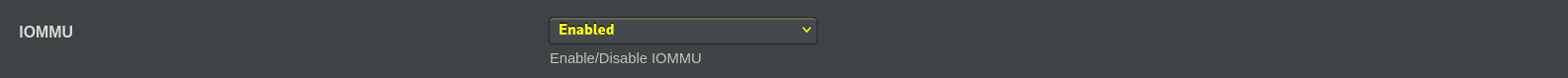

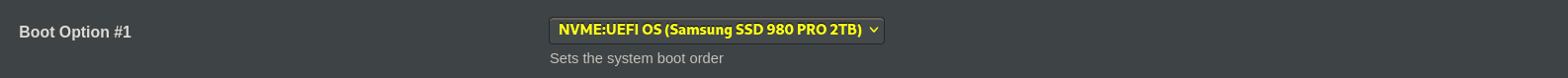

BIOS Settings:

root@proxmox9:~# apt update

Hit:1 http://security.debian.org bookworm-security InRelease

Hit:2 http://ftp.de.debian.org/debian bookworm InRelease

Hit:3 http://ftp.de.debian.org/debian bookworm-updates InRelease

Hit:4 https://enterprise.proxmox.com/debian/pve bookworm InRelease

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

All packages are up to date.

root@proxmox9:~# uname -a

Linux proxmox9 6.8.12-8-pve #1 SMP PREEMPT_DYNAMIC PMX 6.8.12-8 (2025-01-24T12:32Z) x86_64 GNU/Linux

we do a PCI Passthrough of a TRX 4060 TI on a B650D4U-2L2T/BCM mainboard with an AMD Ryzen 9 7900X CPU.

I got the passthrough working yesterday once after a CMOS reset followed by reapplying these bios options (I verified working passthrough using nvidia-smi in the VM), but after powering off the server and putting it back into the rack, it doesn't work anymore. Every time I start the VM, it does show this error (rebooted ~5 times to test if it occurs every time):

Code:

kvm: vfio: Unable to power on device, stuck in D3

kvm: vfio: Unable to power on device, stuck in D3

TASK ERROR: start failed: QEMU exited with code -1I am trying to figure out what causes this now, because I did not change anything yesterday from before the server was placed in the rack and afterward. The only thing I changed when putting it into the rack were power cable & lan cables.

Additionally, I do not know what to troubleshoot next. We already replaced the mainboard and the GPU a couple of weeks ago, maybe someone has a good idea what to test next?

lspci -nnk

01:00.0 VGA compatible controller [0300]: NVIDIA Corporation AD106 [GeForce RTX 4060 Ti] [10de:2803] (rev a1) Subsystem: Micro-Star International Co., Ltd. [MSI] AD106 [GeForce RTX 4060 Ti] [1462:5174] Kernel driver in use: vfio-pci Kernel modules: nvidiafb, nouveau01:00.1 Audio device [0403]: NVIDIA Corporation AD106M High Definition Audio Controller [10de:22bd] (rev a1) Subsystem: Micro-Star International Co., Ltd. [MSI] AD106M High Definition Audio Controller [1462:5174] Kernel driver in use: vfio-pci Kernel modules: snd_hda_intelroot@proxmox9:~# ls -l /sys/kernel/iommu_groups/12/devices/

total 0

lrwxrwxrwx 1 root root 0 Feb 27 09:44 0000:01:00.0 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:01:00.0

lrwxrwxrwx 1 root root 0 Feb 27 09:44 0000:01:00.1 -> ../../../../devices/pci0000:00/0000:00:01.1/0000:01:00.1

root@proxmox9:~# cat /etc/modprobe.d/vfio.conf

options vfio-pci ids=10de:2803,10de:22bd disable_vga=1 disable_idle_d3=1

VM > Hardware > PCI Device:

root@proxmox9:~# cat /etc/default/grub

[...]

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt pcie_aspm=off vfio-pci.disable_idle_d3=1 pci=realloc,reset pcie_port_pm=off"

[...]

root@proxmox9:~# dmesg | grep vfio

[ 0.000000] Command line: BOOT_IMAGE=/boot/vmlinuz-6.8.12-8-pve root=/dev/mapper/pve-root ro quiet amd_iommu=on iommu=pt pcie_aspm=off vfio-pci.disable_idle_d3=1 pci=realloc,reset pcie_port_pm=off

[ 0.049791] Kernel command line: BOOT_IMAGE=/boot/vmlinuz-6.8.12-8-pve root=/dev/mapper/pve-root ro quiet amd_iommu=on iommu=pt pcie_aspm=off vfio-pci.disable_idle_d3=1 pci=realloc,reset pcie_port_pm=off

[ 3.326677] vfio-pci 0000:01:00.0: vgaarb: VGA decodes changed: olddecodes=io+mem,decodes=none

[ 3.326760] vfio_pci: add [10de:2803[ffffffff:ffffffff]] class 0x000000/00000000

[ 3.326819] vfio_pci: add [10de:22bd[ffffffff:ffffffff]] class 0x000000/00000000

[ 16.847494] vfio-pci 0000:01:00.1: enabling device (0000 -> 0002)

[ 20.033227] vfio-pci 0000:01:00.1: vfio_bar_restore: reset recovery - restoring BARs

[ 20.037272] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 20.758299] vfio-pci 0000:01:00.0: timed out waiting for pending transaction; performing function level reset anyway

[ 21.950364] vfio-pci 0000:01:00.0: not ready 1023ms after FLR; waiting

[ 23.038217] vfio-pci 0000:01:00.0: not ready 2047ms after FLR; waiting

[ 25.150502] vfio-pci 0000:01:00.0: not ready 4095ms after FLR; waiting

[ 29.502524] vfio-pci 0000:01:00.0: not ready 8191ms after FLR; waiting

[ 38.206361] vfio-pci 0000:01:00.0: not ready 16383ms after FLR; waiting

[ 55.102743] vfio-pci 0000:01:00.0: not ready 32767ms after FLR; waiting

[ 91.454980] vfio-pci 0000:01:00.0: not ready 65535ms after FLR; giving up

[ 91.553262] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 91.553648] vfio-pci 0000:01:00.1: vfio_bar_restore: reset recovery - restoring BARs

[ 100.580322] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 100.580357] vfio-pci 0000:01:00.0: vfio_bar_restore: reset recovery - restoring BARs

[ 1149.620773] vfio-pci 0000:01:00.0: timed out waiting for pending transaction; performing function level reset anyway

[ 1150.796899] vfio-pci 0000:01:00.0: not ready 1023ms after FLR; waiting

[ 1151.884942] vfio-pci 0000:01:00.0: not ready 2047ms after FLR; waiting

[ 1153.996774] vfio-pci 0000:01:00.0: not ready 4095ms after FLR; waiting

[ 1158.476981] vfio-pci 0000:01:00.0: not ready 8191ms after FLR; waiting

[ 1167.181084] vfio-pci 0000:01:00.0: not ready 16383ms after FLR; waiting

[ 1184.077151] vfio-pci 0000:01:00.0: not ready 32767ms after FLR; waiting

[ 1217.869729] vfio-pci 0000:01:00.0: not ready 65535ms after FLR; giving up

root@proxmox9:~# dmesg | grep -e DMAR -e IOMMU

[ 0.442148] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.444095] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

BIOS Settings:

| BIOS Firmware Version | 20.07 (latest) |

root@proxmox9:~# apt update

Hit:1 http://security.debian.org bookworm-security InRelease

Hit:2 http://ftp.de.debian.org/debian bookworm InRelease

Hit:3 http://ftp.de.debian.org/debian bookworm-updates InRelease

Hit:4 https://enterprise.proxmox.com/debian/pve bookworm InRelease

Reading package lists... Done

Building dependency tree... Done

Reading state information... Done

All packages are up to date.

root@proxmox9:~# uname -a

Linux proxmox9 6.8.12-8-pve #1 SMP PREEMPT_DYNAMIC PMX 6.8.12-8 (2025-01-24T12:32Z) x86_64 GNU/Linux

Last edited: