Yes, I reconfigured the VM as q35 with vIOMMU = Intel, it didn't even boot up and gave a console error of "guest has not initialized the display (yet)"

[TUTORIAL] PCI/GPU Passthrough on Proxmox VE 8 : Installation and configuration

- Thread starter asded

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

It gives no error, that's the strange part, when entering the console I just get the message "guest has not initialized the display (yet)""

When I try to log into home assistant, I just get the error Connection lost. Reconnecting…,

When I try to log into home assistant, I just get the error Connection lost. Reconnecting…,

Attachments

Last edited:

So, it did boot up then? What is its status after a while: running or stopped?It gives no error, that's the strange part, when entering the console I just get the message "guest has not initialized the display (yet)""

When I try to log into home assistant, I just get the error Connection lost. Reconnecting…,

If it's running and you can't get access to console, it must be your OS, what redirects display output to the PCI video card. Network interfaces are changed after changing VM type - kernel sets another NIC names, so your network configuration could be therefore messed up. If you had installed QEMU Guest Agent inside VM and enabled it in options before, you could see, if VM got another IP address.

I would first set bare minimum:

vIOMMU=Default(none), Display=Standard VGA, PCI Passthrough=ALL Functions enabled, and Primary GPU disabledand try to see, if console is seen at least at boot time, before OS takes over. You have to be fast, there are only few seconds for SeaBIOS.

Then I would try to connect external monitor to the video card, you are passing through. If it shows up there, I would connect and pass through an USB keyboard, so it would be possible to config the OS inside.

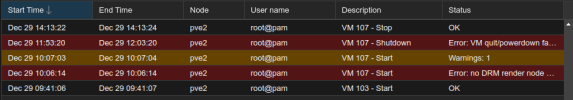

If its status is stopped, it would mean the VM successfully started and stopped after something. I would check the

System Log of the Proxmox machine to see, if there is something helpful.Ok, I got it working after a few hours of messing around. I gave up and installed a new and clean VM. installed the nvidia 535 drivers manually using apt. And voila, its working.

After fiddeling around with my AMD 6650XT GPU, I got it working rather well. But I wanted more. I bought an Nvidia 4070. swapped it. Network card doesn't work anymore. OMFG. I got that fixed. Now the passthrough of the 4070 does not work, I assume. I can see the card using lspci in the guest. NVidia drivers are installed.

nvidia-smi

NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

Now what? I added the gpu raw also tried to map it, both same result.

The guest:

agent: 1

balloon: 2048

bios: ovmf

boot: order=scsi0;ide2;net0

cores: 6

cpu: host

efidisk0: LVM-Thin-Local:vm-104-disk-0,efitype=4m,pre-enrolled-keys=1,size=4M

hostpci0: mapping=RTX4070,pcie=1

ide2: local:iso/ubuntu-24.04.1-live-server-amd64.iso,media=cdrom,size=2708862K

machine: q35

memory: 24576

meta: creation-qemu=9.0.2,ctime=1734616326

name: ollama

net0: virtio=BC:24:11:51:CE:EA,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

parent: working_before_rocm

scsi0: LVM-Thin-Local:vm-104-disk-1,discard=on,iothread=1,size=128G

scsihw: virtio-scsi-single

smbios1: uuid=6f37a799-0309-4073-99b3-6111a85f8e87

sockets: 1

vmgenid: 90a39d27-a595-4f4d-8f26-1dad89b47c32

From the guest:

lspci | grep VGA

00:01.0 VGA compatible controller: Device 1234:1111 (rev 02)

01:00.0 VGA compatible controller: NVIDIA Corporation AD104 [GeForce RTX 4070] (rev a1)

After fiddeling around with my AMD 6650XT GPU, I got it working rather well. But I wanted more. I bought an Nvidia 4070. swapped it. Network card doesn't work anymore. OMFG. I got that fixed. Now the passthrough of the 4070 does not work, I assume. I can see the card using lspci in the guest. NVidia drivers are installed.

nvidia-smi

NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

Now what? I added the gpu raw also tried to map it, both same result.

The guest:

agent: 1

balloon: 2048

bios: ovmf

boot: order=scsi0;ide2;net0

cores: 6

cpu: host

efidisk0: LVM-Thin-Local:vm-104-disk-0,efitype=4m,pre-enrolled-keys=1,size=4M

hostpci0: mapping=RTX4070,pcie=1

ide2: local:iso/ubuntu-24.04.1-live-server-amd64.iso,media=cdrom,size=2708862K

machine: q35

memory: 24576

meta: creation-qemu=9.0.2,ctime=1734616326

name: ollama

net0: virtio=BC:24:11:51:CE:EA,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

parent: working_before_rocm

scsi0: LVM-Thin-Local:vm-104-disk-1,discard=on,iothread=1,size=128G

scsihw: virtio-scsi-single

smbios1: uuid=6f37a799-0309-4073-99b3-6111a85f8e87

sockets: 1

vmgenid: 90a39d27-a595-4f4d-8f26-1dad89b47c32

From the guest:

lspci | grep VGA

00:01.0 VGA compatible controller: Device 1234:1111 (rev 02)

01:00.0 VGA compatible controller: NVIDIA Corporation AD104 [GeForce RTX 4070] (rev a1)

Last edited:

Ok, I got it working after a few hours of messing around. I gave up and installed a new and clean VM. installed the nvidia 535 drivers manually using apt. And voila, its working.

After fiddeling around with my AMD 6650XT GPU, I got it working rather well. But I wanted more. I bought an Nvidia 4070. swapped it. Network card doesn't work anymore. OMFG. I got that fixed. Now the passthrough of the 4070 does not work, I assume. I can see the card using lspci in the guest. NVidia drivers are installed.

nvidia-smi

NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

Now what? I added the gpu raw also tried to map it, both same result.

The guest:

agent: 1

balloon: 2048

bios: ovmf

boot: order=scsi0;ide2;net0

cores: 6

cpu: host

efidisk0: LVM-Thin-Local:vm-104-disk-0,efitype=4m,pre-enrolled-keys=1,size=4M

hostpci0: mapping=RTX4070,pcie=1

ide2: local:iso/ubuntu-24.04.1-live-server-amd64.iso,media=cdrom,size=2708862K

machine: q35

memory: 24576

meta: creation-qemu=9.0.2,ctime=1734616326

name: ollama

net0: virtio=BC:24:11:51:CE:EA,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

parent: working_before_rocm

scsi0: LVM-Thin-Local:vm-104-disk-1,discard=on,iothread=1,size=128G

scsihw: virtio-scsi-single

smbios1: uuid=6f37a799-0309-4073-99b3-6111a85f8e87

sockets: 1

vmgenid: 90a39d27-a595-4f4d-8f26-1dad89b47c32

From the guest:

lspci | grep VGA

00:01.0 VGA compatible controller: Device 1234:1111 (rev 02)

01:00.0 VGA compatible controller: NVIDIA Corporation AD104 [GeForce RTX 4070] (rev a1)

How do you solve the passthrough with your AMD 6650XT GPU. I got some "No Signal" problem with my AMD RX 570 in Manjaro VM.

Here is my VM conf:

Code:

agent: 1

bios: ovmf

boot: order=scsi1;ide2

cores: 6

cpu: host

efidisk0: vm_lxc_storage:vm-1004-disk-0,efitype=4m,size=4M

hostpci0: mapping=rx570,pcie=1

ide2: none,media=cdrom

machine: q35

memory: 16384

meta: creation-qemu=9.0.2,ctime=1737725644

name: Manjaro24

net0: virtio=BC:24:11:51:EB:31,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi1: /dev/disk/by-id/usb-Samsung_SSD_850_EVO_500G_462104130242-0:0,size=488386584K

scsihw: virtio-scsi-single

smbios1: uuid=ba225535-e240-4873-87df-62bcb942bb59

sockets: 1

usb0: host=1-4.4

usb1: host=1-4.3

vga: none

vmgenid: 2169b721-a5fa-47ae-8566-f88550bc1301Please tell me your solution for AMD GPU Passthrough. When CPU Usage get over 50% it will working with my Manjaro VM, but it needs serveral restarts for that. Any Idea?

Last edited:

I sold the 6650 and bought an rtx4070. To get the AMD working, I followed the instructions in this thread. My main issue was the reset bug. You have to make sure the AMD drivers are blacklisted, make sure you did that. Also check grub parameters. Start with the basics quiet and iommu=pt. amd_iommu=on is not valid (assuming have and AMD setup). Check iommu is enabled in bios! Does the GPU show in de vm using lspci? Are you passing the GPU through as primary GPU?

Last edited:

A couple of questions:

1) We should not use pcie_acs_override option at all? If I cannot get separate IOMMU groups I thought I had to use this? In my use case my iGPU is in a separate IOMMU group with or without the pcie_acs_override option.

2) I would assume if I follow this then the GPU is passthrough and not available for other VMs correct?

1) We should not use pcie_acs_override option at all? If I cannot get separate IOMMU groups I thought I had to use this? In my use case my iGPU is in a separate IOMMU group with or without the pcie_acs_override option.

2) I would assume if I follow this then the GPU is passthrough and not available for other VMs correct?

I have the reset issue with amd 8845hs (readon 780m graphics) on Proxmox 8.3.0 w/GRUB in UEFI mode, ZFS.

I managed to setup a Windows VM with gpu passthrough that runs Parsec and uses hardware (gpu) decoding (i.e. it works fine).

But when I stop it and restart it I get the following error:

In etc/default/grub I have:

cat /etc/kernel/cmdline shows:

After trying asded's response for AMDResetBugFix,

cat /etc/modules shows:

Also, dmesg | grep 'remapping' shows:

but I don't get anything similar to this when I start my VM:

so I'm thinking maybe I configured something wrong.

Any advice will be very appreciated

I managed to setup a Windows VM with gpu passthrough that runs Parsec and uses hardware (gpu) decoding (i.e. it works fine).

But when I stop it and restart it I get the following error:

Code:

error writing '1' to '/sys/bus/pci/devices/0000:c6:00.0/reset': Inappropriate ioctl for device

failed to reset PCI device '0000:c6:00.0', but trying to continue as not all devices need a reset

TASK ERROR: timeout waiting on systemdIn etc/default/grub I have:

Code:

GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt"cat /etc/kernel/cmdline shows:

Code:

root=ZFS=rpool/ROOT/pve-1 boot=zfs quiet amd_iommu=on iommu=ptAfter trying asded's response for AMDResetBugFix,

cat /etc/modules shows:

Code:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

vendor-resetAlso, dmesg | grep 'remapping' shows:

Code:

AMD-Vi: Interrupt remapping enabledbut I don't get anything similar to this when I start my VM:

Code:

[57709.971750] vfio-pci 0000:01:00.0: AMD_POLARIS10: version 1.1

[57709.971755] vfio-pci 0000:01:00.0: AMD_POLARIS10: performing pre-reset

[57709.971881] vfio-pci 0000:01:00.0: AMD_POLARIS10: performing reset

[57709.971885] vfio-pci 0000:01:00.0: AMD_POLARIS10: CLOCK_CNTL: 0x0, PC: 0x2055c

[57709.971889] vfio-pci 0000:01:00.0: AMD_POLARIS10: Performing BACO reset

[57710.147491] vfio-pci 0000:01:00.0: AMD_POLARIS10: performing post-reset

[57710.171814] vfio-pci 0000:01:00.0: AMD_POLARIS10: reset result = 0so I'm thinking maybe I configured something wrong.

Any advice will be very appreciated

Thank you for this guide. Small bug, the intel lines are missing 'blacklist' at beginning of line, results in errors when rebuilding initramfs.# Intel drivers

echo "snd_hda_intel" >> /etc/modprobe.d/blacklist.conf

echo "snd_hda_codec_hdmi" >> /etc/modprobe.d/blacklist.conf

echo "i915" >> /etc/modprobe.d/blacklist.conf

Thanks, and yes I finally give up (GitLab) static pages to host my blog, new address https://asded.frthank you so much for your effort in putting this together its my go to for passthrough. your gitlab link no longer works do you have a new link?

you can see this message to fix the error :I have the reset issue with amd 8845hs (readon 780m graphics) on Proxmox 8.3.0 w/GRUB in UEFI mode, ZFS.

I managed to setup a Windows VM with gpu passthrough that runs Parsec and uses hardware (gpu) decoding (i.e. it works fine).

But when I stop it and restart it I get the following error:

Code:error writing '1' to '/sys/bus/pci/devices/0000:c6:00.0/reset': Inappropriate ioctl for device failed to reset PCI device '0000:c6:00.0', but trying to continue as not all devices need a reset TASK ERROR: timeout waiting on systemd

In etc/default/grub I have:

Code:GRUB_CMDLINE_LINUX_DEFAULT="quiet amd_iommu=on iommu=pt"

cat /etc/kernel/cmdline shows:

Code:root=ZFS=rpool/ROOT/pve-1 boot=zfs quiet amd_iommu=on iommu=pt

After trying asded's response for AMDResetBugFix,

cat /etc/modules shows:

Code:vfio vfio_iommu_type1 vfio_pci vfio_virqfd vendor-reset

Also, dmesg | grep 'remapping' shows:

Code:AMD-Vi: Interrupt remapping enabled

but I don't get anything similar to this when I start my VM:

Code:[57709.971750] vfio-pci 0000:01:00.0: AMD_POLARIS10: version 1.1 [57709.971755] vfio-pci 0000:01:00.0: AMD_POLARIS10: performing pre-reset [57709.971881] vfio-pci 0000:01:00.0: AMD_POLARIS10: performing reset [57709.971885] vfio-pci 0000:01:00.0: AMD_POLARIS10: CLOCK_CNTL: 0x0, PC: 0x2055c [57709.971889] vfio-pci 0000:01:00.0: AMD_POLARIS10: Performing BACO reset [57710.147491] vfio-pci 0000:01:00.0: AMD_POLARIS10: performing post-reset [57710.171814] vfio-pci 0000:01:00.0: AMD_POLARIS10: reset result = 0

so I'm thinking maybe I configured something wrong.

Any advice will be very appreciated

after almost... 1 year.. this guide fix (for me) the famous problem

its a windows side, but for me it works, I don't have to restart the host anymore, win11 vm stop and restart like a charm

I hope it's useful for someone

Code:

error writing '1' to '/sys/bus/pci/devices/0000:c6:00.0/reset': Inappropriate ioctl for deviceits a windows side, but for me it works, I don't have to restart the host anymore, win11 vm stop and restart like a charm

I hope it's useful for someone

hoping somone can help me. I have a Minisforum BD790iX3D motherbaord and when i verify that IOMMU is enabled by executing dmesg | grep -e IOMMU i get the following

[ 0.346983] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.351570] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

does this mean that i have enabled IOMMU?

[ 0.346983] pci 0000:00:00.2: AMD-Vi: IOMMU performance counters supported

[ 0.351570] perf/amd_iommu: Detected AMD IOMMU #0 (2 banks, 4 counters/bank).

does this mean that i have enabled IOMMU?