Hi

allows got this error in vreset.service

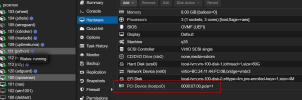

× vreset.service - AMD GPU reset method to 'device_specific'

Loaded: loaded (/etc/systemd/system/vreset.service; enabled; preset: enabled)

Active: failed (Result: exit-code) since Mon 2023-10-02 11:06:56 EEST; 2min 54s ago

Duration: 1ms

Process: 3253 ExecStart=/usr/bin/bash -c echo device_specific > /sys/bus/pci/devices/0000:07:00.0/reset_method (code=ex>

Main PID: 3253 (code=exited, status=1/FAILURE)

CPU: 2ms

Oct 02 11:06:56 pve1 systemd[1]: Started vreset.service - AMD GPU reset method to 'device_specific'.

Oct 02 11:06:56 pve1 bash[3253]: /usr/bin/bash: line 1: echo: write error: Invalid argument

Oct 02 11:06:56 pve1 systemd[1]: vreset.service: Main process exited, code=exited, status=1/FAILURE

Oct 02 11:06:56 pve1 systemd[1]: vreset.service: Failed with result 'exit-code'.

root@pve1:~# dmesg | grep vendor_reset

[ 7.620129] vendor_reset: loading out-of-tree module taints kernel.

[ 7.620177] vendor_reset: module verification failed: signature and/or required key missing - tainting kernel

[ 7.698619] vendor_reset_hook: installed

allows got this error in vreset.service

× vreset.service - AMD GPU reset method to 'device_specific'

Loaded: loaded (/etc/systemd/system/vreset.service; enabled; preset: enabled)

Active: failed (Result: exit-code) since Mon 2023-10-02 11:06:56 EEST; 2min 54s ago

Duration: 1ms

Process: 3253 ExecStart=/usr/bin/bash -c echo device_specific > /sys/bus/pci/devices/0000:07:00.0/reset_method (code=ex>

Main PID: 3253 (code=exited, status=1/FAILURE)

CPU: 2ms

Oct 02 11:06:56 pve1 systemd[1]: Started vreset.service - AMD GPU reset method to 'device_specific'.

Oct 02 11:06:56 pve1 bash[3253]: /usr/bin/bash: line 1: echo: write error: Invalid argument

Oct 02 11:06:56 pve1 systemd[1]: vreset.service: Main process exited, code=exited, status=1/FAILURE

Oct 02 11:06:56 pve1 systemd[1]: vreset.service: Failed with result 'exit-code'.

root@pve1:~# dmesg | grep vendor_reset

[ 7.620129] vendor_reset: loading out-of-tree module taints kernel.

[ 7.620177] vendor_reset: module verification failed: signature and/or required key missing - tainting kernel

[ 7.698619] vendor_reset_hook: installed

Last edited: