All good.Ooops - not my intention.

plus this was in the first few lines of the OP....

"️ Setting up the Debian container ️

We will be using a Debian LXC as the base for PBS."

All good.Ooops - not my intention.

But Udos remark wasn't on the OPs post but antubis question:plus this was in the first few lines of the OP....

Just a thought... since TrueNAS Scale is based on Debian as well as PBS... why not install the PBS directly on the TrueNAS system (without LXC or VMs)?

fair point and acceptedThe answer to that is "no" which is exactly what Udo explained. So your dispute is a just a missunderstanding because both of you talked about different things

@PwrBank , i think i have nailed the permissions needed, and i went one step further and wrote a script that can be run as a cron job on truenas that backs up the pbs dataset to Azure (in my case). The script checks PBS isn't writing to the store, stops the proxmox backup service (not the container), does a snapshot, restarts the pbs service in the container, then snapshot is mounted, rcloned to azure and it is alll cleaned up (snapshot deleted and then mount unmounted). - lemme know if you are interested, for me this is going to be my production pbs1 from now on.

#!/bin/bash

# A simple script to back up PBS to Crypt remote

_lock_file=/root/.tertiary_backup.lock

_schedule_time=1:30

_max_duration="7.5h"

_cutoff_mode="soft"

_datastore_dir_name=Primary_Backup

_datastore_dir_path=/mnt/datastore/$_datastore_dir_name

_config_dir=$_datastore_dir_path/host_backup

_remote_user=user

_remote_ip=tailscale-ip

_remote_port=22

_remote_name=Tertiary_Backup_Crypt...and that is whyI really wouldn't do this if you care sbout your data, in this thread a simmiliar script failed:

Probably you know that, but I thinks it's worth to mention: every time you switch the destination the "dirty bitmap" is dropped. If you toggle every time then every backup needs to read the complete source.considering my # of backups and speed with metadata option i won't use pbs sync but two jobs in the cluster

tl;dr never let a backup tool backup live files without understanding the implications..... and tl;dr my pbs data is quite safe with this approach, if it isn;t then we have bigger issues as it would mean the on-disk state can't be trusted....

no i didn't, i wasnt going to change the destination, just have tow jobs, I had assumed each job maintains its own bitmap and comapares against the metadata (i used metadat mode) - i will do some testing and look oout for what you said, its not like the sync jobs will be long, i just heard they were slower. Thanks for the tip.Probably you know that, but I thinks it's worth to mention: every time you switch the destination the "dirty bitmap" is dropped. If you toggle every time then every backup needs to read the complete source.

well i like to think that, but shhhh, do you hear that - sounds like my pride coming before a fall - like this week when i eventually relalized i was having cephFS issues on one node because with a script i had accidentally overwritte the keyfile with a different key.... in /etc/pve/priv .... thank god i never rebooted the other two nodes....You obviouvsly know what you are doing and know of possible problems

#!/bin/bash

exec > >(tee -a "$LOGFILE") 2>&1

set -euo pipefail

# === CONFIG ===

INCUS_CNAME="pbs1"

ZFS_DATASET="rust/local-backups/pbs"

SNAP_NAME="cloudbackup-$(date +%Y%m%d-%H%M)"

SNAP_MNT="/mnt/pbs-snapshot-${SNAP_NAME}"

AZURE_CONTAINER="pbs"

LOGFILE="/var/log/pbs-cloud-backup.log"

TASK_ID=2

MAX_WAIT_MINUTES=15

WAIT_INTERVAL=30

MAX_ATTEMPTS=$(( MAX_WAIT_MINUTES * 60 / WAIT_INTERVAL ))

ATTEMPT=0

# === Logging Setup ===

LOG_TAG="PBSCloudBackup"

log_info() { local msg="[$(date '+%F %T')] ℹ️ $1"; echo "$msg"; logger -t "$LOG_TAG" "$msg"; }

log_warn() { local msg="[$(date '+%F %T')] ⚠️ $1"; echo "$msg"; logger -p user.warn -t "$LOG_TAG" "$msg"; }

log_error() { local msg="[$(date '+%F %T')] ❌ $1"; echo "$msg"; logger -p user.err -t "$LOG_TAG" "$msg"; }

# === Redirect all stdout/stderr to log file + terminal ===

exec > >(tee -a "$LOGFILE") 2>&1

log_info " Starting PBS snapshot + Azure backup job"

# === STEP 0: Confirm container exists ===

if ! /usr/bin/incus list --format json | jq -e '.[] | select(.name == "'"$INCUS_CNAME"'")' >/dev/null; then

log_error "Container '$INCUS_CNAME' not found. Aborting."

exit 1

fi

# === STEP 1: Wait for PBS to be idle ===

log_info "⏳ Checking for running PBS tasks..."

while /usr/bin/incus exec "$INCUS_CNAME" -- \

proxmox-backup-manager task list --output-format json \

| jq -e '.[] | select(has("endtime") | not)' >/dev/null; do

if (( ATTEMPT++ >= MAX_ATTEMPTS )); then

log_error "Timeout: PBS still has running tasks after $MAX_WAIT_MINUTES minutes"

exit 1

fi

log_info "PBS busy... retrying ($ATTEMPT/$MAX_ATTEMPTS)"

sleep "$WAIT_INTERVAL"

done

log_info "✅ PBS is idle. Proceeding with backup"

# === STEP 2: Stop PBS inside container ===

log_info " Stopping PBS server..."

if ! /usr/bin/incus exec "$INCUS_CNAME" -- systemctl stop proxmox-backup; then

log_error "Failed to stop PBS in container '$INCUS_CNAME'"

exit 1

fi

# === STEP 3: Snapshot ===

log_info " Taking ZFS snapshot: ${SNAP_NAME}"

zfs snapshot "${ZFS_DATASET}@${SNAP_NAME}"

# === STEP 4: Restart PBS ===

log_info " Restarting PBS server..."

if ! /usr/bin/incus exec "$INCUS_CNAME" -- systemctl start proxmox-backup; then

log_error "Failed to start PBS in container '$INCUS_CNAME'"

exit 1

fi

# === STEP 5: Mount Snapshot ===

log_info " Mounting snapshot: ${SNAP_MNT}"

mkdir -p "$SNAP_MNT"

if ! mount -t zfs -o ro "${ZFS_DATASET}@${SNAP_NAME}" "$SNAP_MNT"; then

log_error "Failed to mount snapshot. Cleaning up."

zfs destroy "${ZFS_DATASET}@${SNAP_NAME}" || true

rmdir "$SNAP_MNT"

exit 1

fi

# === STEP 6: Get Azure credentials ===

log_info " Extracting Azure credentials from Cloud Sync task ID ${TASK_ID}..."

CRED_ID=$(midclt call cloudsync.query | jq ".[] | select(.id == ${TASK_ID}) | .credentials.id")

read ACCOUNT KEY ENDPOINT < <(

midclt call cloudsync.credentials.query \

| jq -r ".[] | select(.id == ${CRED_ID}) | .provider | [.account, .key, .endpoint] | @tsv"

)

if [[ -z "$ACCOUNT" || -z "$KEY" || -z "$ENDPOINT" ]]; then

log_error "❌ Failed to extract Azure credentials. Aborting."

umount "$SNAP_MNT"

zfs destroy "${ZFS_DATASET}@${SNAP_NAME}"

rmdir "$SNAP_MNT"

exit 1

fi

CONFIG_FILE=$(mktemp)

cat <<EOF > "$CONFIG_FILE"

[azure]

type = azureblob

account = ${ACCOUNT}

key = ${KEY}

endpoint = ${ENDPOINT}

access_tier = Cool

EOF

# === STEP 7: Sync to Azure ===

log_info "☁️ Syncing snapshot to Azure container: '$AZURE_CONTAINER'"

if ! rclone --config "$CONFIG_FILE" sync "$SNAP_MNT" "azure:${AZURE_CONTAINER}" \

--transfers=32 \

--checkers=16 \

--azureblob-chunk-size=100M \

--buffer-size=265M \

--log-file="$LOGFILE" \

--log-level INFO \

--stats=10s \

--stats-one-line \

--stats-one-line-date \

--stats-log-level NOTICE \

--create-empty-src-dirs; then

log_error "❌ Rclone sync failed"

exit 1

fi

# === STEP 8: Cleanup ===

log_info " Cleaning up snapshot and temp files"

rm -f "$CONFIG_FILE"

umount "$SNAP_MNT"

zfs destroy "${ZFS_DATASET}@${SNAP_NAME}"

rmdir "$SNAP_MNT"

log_info "✅ Backup complete!"tell me how to test and i will test and see if i have the same issueAs an update, the only thing that isn't working as expected (within reason) is the PBS benchmarking tool. It tries to reference 127.0.0.1 for testing instead of the actual IP of the PBS instance.

Other than that, still working like a champ!

@PwrBank

thats normal behaviour and nothing to do with running pbs in a LXC on truenas

you didn't specify a valid pbs datastore URL so it assumed you meant locally, this is by design (see the pbs client docs)

Ahh you are right. I put the connection info in from PBS and it worke

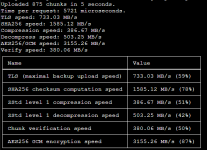

Uploaded 140 chunks in 5 seconds.

Time per request: 37906 microseconds.

TLS speed: 110.65 MB/s

SHA256 speed: 1844.55 MB/s

Compression speed: 621.61 MB/s

Decompress speed: 902.09 MB/s

AES256/GCM speed: 5035.04 MB/s

Verify speed: 609.42 MB/s

┌───────────────────────────────────┬─────────────────────┐

│ Name │ Value │

╞═══════════════════════════════════╪═════════════════════╡

│ TLS (maximal backup upload speed) │ 110.65 MB/s (9%) │

├───────────────────────────────────┼─────────────────────┤

│ SHA256 checksum computation speed │ 1844.55 MB/s (91%) │

├───────────────────────────────────┼─────────────────────┤

│ ZStd level 1 compression speed │ 621.61 MB/s (83%) │

├───────────────────────────────────┼─────────────────────┤

│ ZStd level 1 decompression speed │ 902.09 MB/s (75%) │

├───────────────────────────────────┼─────────────────────┤

│ Chunk verification speed │ 609.42 MB/s (80%) │

├───────────────────────────────────┼─────────────────────┤

│ AES256 GCM encryption speed │ 5035.04 MB/s (138%) │

└───────────────────────────────────┴─────────────────────┘We use essential cookies to make this site work, and optional cookies to enhance your experience.