What?

Are you wanting to use PBS to backup your servers, but want to use TrueNAS as the storage handler?I've been using this setup for the last few weeks and it has worked perfectly.

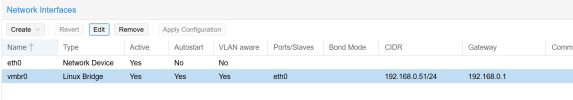

With the latest update of TrueNAS Community Edition, 25.04, you can run Linux Containers (LXC) natively on the system.

Why?

This will allow you to have the ZFS back end of TrueNAS handle snapshots, replication, etc. for a robust storage layer. TrueNAS is a little more user friendly when it comes to managing the actual devices involved in the storage. You can easily expand the storage, divide it up for other uses, and run other services if you wanted to.How?

️ Setting up the Debian container ️

We will be using a Debian LXC as the base for PBS.

Navigate to the Instance section within TrueNAS

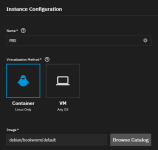

And create a new instance with a Debian Bookworm image

(By default it will allow all RAM and CPU to be shared with the container, set this according to your needs)

Add a disk, create a new dataset for us with the PBS container

For the destination, I chose

/mnt/pbs

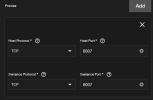

If you'd like to use the same IP address as the TrueNAS system, add a proxy setting. I assigned the default ports used by PBS with HTTPS.

Press Create

(THIS PART COULD USE FEEDBACK FROM THE COMMUNITY, I'M NOT GOOD AT LINUX PERMISSIONS YET, THIS JUST WORKED FOR ME AND MAY NOT BE THE MOST SECURE SETTINGS)

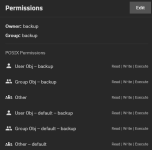

Navigate to the dataset that was created and change the permissions to the preset ACL named "POSIX_OPEN" and set the owner and group to "backup"

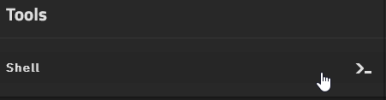

Navigate back to the Instance page and connect to the container with the Shell button

️️ Installing PBS ️

Once you are in the shell of the container, set the root user password with

passwdCreate the directory that will be used for the PBS datastore (This will be the directory mounted in the LXC creation plus a folder inside of that)

mkdir /mnt/pbs/dataRun the following commands

Bash:

# Update available repositories

apt update

# Install wget and nano

apt install wget nano

#Add the Proxmox repository key to the install

wget https://enterprise.proxmox.com/debian/proxmox-release-bookworm.gpg -O /etc/apt/trusted.gpg.d/proxmox-release-bookworm.gpgEdit the apt repository list and add the Proxmox repositories

nano /etc/apt/sources.listAdd the following to the sources list

Code:

# PBS no-subscription

deb http://download.proxmox.com/debian/pbs bookworm pbs-no-subscription

# security updates

deb http://security.debian.org/debian-security bookworm-security main contribUpdate the apt repositories and install PBS

Bash:

apt update

apt install proxmox-backup-serverOnce the installation process if finished, should be able to connect to the TrueNAS IP but on port 8007 via HTTPS

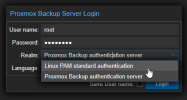

Login using the root account and using the Linux PAM realm

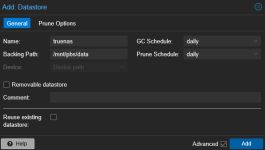

Create a new datastore using the path that was created at the beginning of the PBS install

Press Add

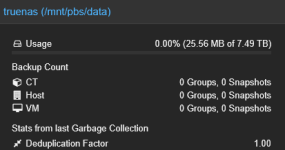

The new dataset should be created and show the full size of the dataset that was created for the data in TrueNAS

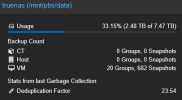

This has been used heavily in my test environment and working perfectly as expected.

TrueNAS also has a scheduled snapshot created of the dataset every 6 hours and keep it for 3 days, hopefully to prevent accidental deletion or in an event where the PBS is subject to ransomware.

Last edited: