Hello,

maybe often diskussed but also question from me too:

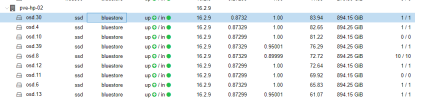

since we have our ceph cluster we can see an unweighted usage of all osd's.

4 nodes with 7x1TB SSDs (1HE, no space left)

3 nodes with 8X1TB SSDs (2HE, some space left)

= 52 SSDs

pve 7.2-11

all ceph-nodes showing us the same like this:

the osd's are used between 80% and 60% - but why?

so my pool with the SSD-Class is nearfull (88%) - but the OSDs are not!

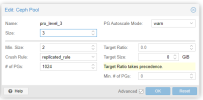

sometimes i reduce the reweight for an high-used OSD (from 1.00 to 0.70) and a few days back.

but it this the normal way?

does we have to less OSDs on our ceph cluster?

What do you think i should do?

i think about:

- upgrade the big nodes with three 2TB SSDs (is this possible because weighting?)

- upgrade the cluster with another node with also 8 SSDs

thanks a lot

Ronny

maybe often diskussed but also question from me too:

since we have our ceph cluster we can see an unweighted usage of all osd's.

4 nodes with 7x1TB SSDs (1HE, no space left)

3 nodes with 8X1TB SSDs (2HE, some space left)

= 52 SSDs

pve 7.2-11

all ceph-nodes showing us the same like this:

the osd's are used between 80% and 60% - but why?

so my pool with the SSD-Class is nearfull (88%) - but the OSDs are not!

sometimes i reduce the reweight for an high-used OSD (from 1.00 to 0.70) and a few days back.

but it this the normal way?

does we have to less OSDs on our ceph cluster?

What do you think i should do?

i think about:

- upgrade the big nodes with three 2TB SSDs (is this possible because weighting?)

- upgrade the cluster with another node with also 8 SSDs

thanks a lot

Ronny