Opt-in Linux 6.5 Kernel with ZFS 2.2 for Proxmox VE 8 available on test & no-subscription

- Thread starter t.lamprecht

- Start date

-

- Tags

- kernel 6.5 zfs 2.2

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

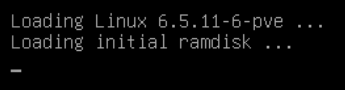

Just tested the latest update 6.5.11-6-pve, unfortunately no change and still stuck after init ramdisk :-(same here as sl4vik..

Dell t140/64Gb ECC

i tried adding nomodeset, removing it, added simplefb, removed it, updated to the latest everything both bios/firmware/pve

i posted in another thread but was linked to this one by fabian at proxmox

booting the 6.2 kernel works fine.

When you say "stuck", can you still SSH into the box, and access the web gui? I initially thought it was hanging, but realised it was just the console.

I've browsed through the suggestions in this thread, but looks like there's no solution yet?

I've browsed through the suggestions in this thread, but looks like there's no solution yet?

Last edited:

Nope. I cannot even ping the server.When you say "stuck", can you still SSH into the box, and access the web gui? I initially thought it was hanging, but realised it was just the console.

I've browsed through the suggestions in this thread, but looks like there's no solution yet?

FWIW, there's a patch for those cards in the 6.5.12 stable kernel that Proxmox does not yet has:the NIC model in my dell t140 is Broadcom Gigabit Ethernet BCM5720

https://lore.kernel.org/all/20231115191636.921055584@linuxfoundation.org/

We'll look into updating to latest stable kernel relatively soon now that releases freeze is over.

This doesn't work for me either (Dell R240).One thing that came to my mind is that it could be maybe a side effect of dropping adding thesimplefbmodule by default to the initramfs again, as it caused some trouble on other HW while not really seeming to fix that many issues.

https://git.proxmox.com/?p=proxmox-...ff;h=9c41f9482666a392b80a3c4da3e695c4649d8ee1

To try that out you would do:

Code:echo "simplefb" >> /etc/initramfs-tools/modules update-initramfs -u -k 6.5.11-4-pve # reboot

Any feedback would be appreciated, it could help to improve this in the long run.

All 6.5 kernels stuck on loading init ramdisk

My Dell R240 doesn't boot with any 6.5 kernel and it's not a display issue.Just to be sure, you did try to ping the Proxmox VE host IP address, and it doesn't reply – after waiting a few minutes (depending on your HW) to ensure it actually had enough time to boot? As a crashing kernel is much noisier most of the time.

It doesn't respond to ping, GUI, ceph or whatever, it's just stuck.

I don't use GPU passthrough either.

This is my setup:

root@pve221:~# uname -a

Linux pve221 6.2.16-19-pve #1 SMP PREEMPT_DYNAMIC PMX 6.2.16-19 (2023-10-24T12:07Z) x86_64 GNU/Linux

root@pve221:~# pveversion -v

proxmox-ve: 8.1.0 (running kernel: 6.2.16-19-pve)

pve-manager: 8.1.3 (running version: 8.1.3/b46aac3b42da5d15)

proxmox-kernel-helper: 8.1.0

pve-kernel-5.19: 7.2-15

proxmox-kernel-6.5: 6.5.11-6

proxmox-kernel-6.5.11-6-pve-signed: 6.5.11-6

proxmox-kernel-6.5.11-5-pve-signed: 6.5.11-5

proxmox-kernel-6.2.16-19-pve: 6.2.16-19

proxmox-kernel-6.2: 6.2.16-19

pve-kernel-5.19.17-2-pve: 5.19.17-2

ceph: 17.2.7-pve1

ceph-fuse: 17.2.7-pve1

corosync: 3.1.7-pve3

criu: 3.17.1-2

glusterfs-client: 10.3-5

ifupdown: residual config

ifupdown2: 3.2.0-1+pmx7

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-4

libknet1: 1.28-pve1

libproxmox-acme-perl: 1.5.0

libproxmox-backup-qemu0: 1.4.1

libproxmox-rs-perl: 0.3.1

libpve-access-control: 8.0.7

libpve-apiclient-perl: 3.3.1

libpve-common-perl: 8.1.0

libpve-guest-common-perl: 5.0.6

libpve-http-server-perl: 5.0.5

libpve-network-perl: 0.9.4

libpve-rs-perl: 0.8.7

libpve-storage-perl: 8.0.5

libqb0: 1.0.5-1

libspice-server1: 0.15.1-1

lvm2: 2.03.16-2

lxc-pve: 5.0.2-4

lxcfs: 5.0.3-pve3

novnc-pve: 1.4.0-3

proxmox-backup-client: 3.0.4-1

proxmox-backup-file-restore: 3.0.4-1

proxmox-kernel-helper: 8.1.0

proxmox-mail-forward: 0.2.2

proxmox-mini-journalreader: 1.4.0

proxmox-offline-mirror-helper: 0.6.3

proxmox-widget-toolkit: 4.1.3

pve-cluster: 8.0.5

pve-container: 5.0.8

pve-docs: 8.1.3

pve-edk2-firmware: 4.2023.08-2

pve-firewall: 5.0.3

pve-firmware: 3.9-1

pve-ha-manager: 4.0.3

pve-i18n: 3.1.2

pve-qemu-kvm: 8.1.2-4

pve-xtermjs: 5.3.0-2

qemu-server: 8.0.10

smartmontools: 7.3-pve1

spiceterm: 3.3.0

swtpm: 0.8.0+pve1

vncterm: 1.8.0

zfsutils-linux: 2.2.0-pve4

Yeah, seems those Dell really are affected by something completely different than the missing console output.This doesn't work for me either (Dell R240).

I guess your host is using

BCM5720 network card too? As:FWIW, there's a patch for those cards in the 6.5.12 stable kernel that Proxmox does not yet has:

https://lore.kernel.org/all/20231115191636.921055584@linuxfoundation.org/

We'll look into updating to latest stable kernel relatively soon now that releases freeze is over.

For now, we added a known issue entry for those Dell + NIC model combos in the 8.1 release notes while we're further investigating.

Hey,

does anybody know, if theres an upcoming fix for adaptec controllers too?

We are struggling right now, because we are running alot of vm-nodes (Dual Xeon, Debian12 Bullseye running Proxmox latest version) which save their data into raid5 hardware raid using adaptec 8405 controllers. Controllers are on latest Firmware 7.18-0 (33556).

It happens at every system and started directly after installing latest kernel. Its not a hardware fault.

The problem:

With Kernel 6.5.11-5-pve and latest public stable: 6.5.11-6-pve system keeps hanging with errors like "aacraid: Outstanding commands on (0,0,0,0):" (full log / errors attached in the log extract below).

Our testings showed us, that it happens with latest default debian 12 kernel right now based on 6.1.0-13-amd64 too and it does not happens with older default debian kernels like 5.10.0-xx (was common in the best times of debian 11)

System freezes for about 60 seconds, then starts working again.

With Kernel 6.2.16-19-pve and all older version that doesnt happen and never happenend before.

We were running proxmox on that hosts for months without any problems and they startet to be stable and working fine, after downgrading to 6.2.16-19 without any problem.

Thats why I guess something got lost "on the way" in kernel branch 6.5.xxx that is not included in linux default kernels too? Maybe some patches for adaptec?

Is there a fix upcoming?

does anybody know, if theres an upcoming fix for adaptec controllers too?

We are struggling right now, because we are running alot of vm-nodes (Dual Xeon, Debian12 Bullseye running Proxmox latest version) which save their data into raid5 hardware raid using adaptec 8405 controllers. Controllers are on latest Firmware 7.18-0 (33556).

It happens at every system and started directly after installing latest kernel. Its not a hardware fault.

The problem:

With Kernel 6.5.11-5-pve and latest public stable: 6.5.11-6-pve system keeps hanging with errors like "aacraid: Outstanding commands on (0,0,0,0):" (full log / errors attached in the log extract below).

Our testings showed us, that it happens with latest default debian 12 kernel right now based on 6.1.0-13-amd64 too and it does not happens with older default debian kernels like 5.10.0-xx (was common in the best times of debian 11)

System freezes for about 60 seconds, then starts working again.

With Kernel 6.2.16-19-pve and all older version that doesnt happen and never happenend before.

We were running proxmox on that hosts for months without any problems and they startet to be stable and working fine, after downgrading to 6.2.16-19 without any problem.

Thats why I guess something got lost "on the way" in kernel branch 6.5.xxx that is not included in linux default kernels too? Maybe some patches for adaptec?

Is there a fix upcoming?

Code:

2023-11-29T16:47:56.293052+01:00 vm-node-4 kernel: [65636.810168] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:56.293054+01:00 vm-node-4 kernel: [65636.811400] aacraid: Host adapter abort request.

2023-11-29T16:47:56.293057+01:00 vm-node-4 kernel: [65636.811400] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:56.293059+01:00 vm-node-4 kernel: [65636.812526] aacraid: Host adapter abort request.

2023-11-29T16:47:56.293063+01:00 vm-node-4 kernel: [65636.812526] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:56.293065+01:00 vm-node-4 kernel: [65636.813648] aacraid: Host adapter abort request.

2023-11-29T16:47:56.293067+01:00 vm-node-4 kernel: [65636.813648] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:57.001190+01:00 vm-node-4 kernel: [65637.518170] aacraid: Host adapter abort request.

2023-11-29T16:47:57.001212+01:00 vm-node-4 kernel: [65637.518170] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:57.001215+01:00 vm-node-4 kernel: [65637.519219] aacraid: Host adapter abort request.

2023-11-29T16:47:57.001219+01:00 vm-node-4 kernel: [65637.519219] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:57.001221+01:00 vm-node-4 kernel: [65637.520467] aacraid: Host adapter abort request.

2023-11-29T16:47:57.001223+01:00 vm-node-4 kernel: [65637.520467] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:57.001225+01:00 vm-node-4 kernel: [65637.521747] aacraid: Host adapter abort request.

2023-11-29T16:47:57.001227+01:00 vm-node-4 kernel: [65637.521747] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:57.001228+01:00 vm-node-4 kernel: [65637.523002] aacraid: Host adapter abort request.

2023-11-29T16:47:57.001230+01:00 vm-node-4 kernel: [65637.523002] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:57.004832+01:00 vm-node-4 kernel: [65637.524199] aacraid: Host adapter abort request.

2023-11-29T16:47:57.004845+01:00 vm-node-4 kernel: [65637.524199] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:47:57.004846+01:00 vm-node-4 kernel: [65637.525395] aacraid: Host adapter abort request.

2023-11-29T16:47:57.004848+01:00 vm-node-4 kernel: [65637.525395] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:48:00.776384+01:00 vm-node-4 kernel: [65641.294182] aacraid: Host adapter abort request.

2023-11-29T16:48:00.776421+01:00 vm-node-4 kernel: [65641.294182] aacraid: Outstanding commands on (0,0,0,0):

2023-11-29T16:48:00.800395+01:00 vm-node-4 kernel: [65641.318329] aacraid: Host bus reset request. SCSI hang ?

2023-11-29T16:48:00.800431+01:00 vm-node-4 kernel: [65641.319346] aacraid 0000:08:00.0: outstanding cmd: midlevel-0

2023-11-29T16:48:00.800434+01:00 vm-node-4 kernel: [65641.319351] aacraid 0000:08:00.0: outstanding cmd: lowlevel-0

2023-11-29T16:48:00.800437+01:00 vm-node-4 kernel: [65641.319354] aacraid 0000:08:00.0: outstanding cmd: error handler-0

2023-11-29T16:48:00.800438+01:00 vm-node-4 kernel: [65641.319357] aacraid 0000:08:00.0: outstanding cmd: firmware-52

2023-11-29T16:48:00.800440+01:00 vm-node-4 kernel: [65641.319360] aacraid 0000:08:00.0: outstanding cmd: kernel-0

2023-11-29T16:48:00.824395+01:00 vm-node-4 kernel: [65641.342232] aacraid 0000:08:00.0: Controller reset type is 3

2023-11-29T16:48:00.824408+01:00 vm-node-4 kernel: [65641.343200] aacraid 0000:08:00.0: Issuing IOP reset

2023-11-29T16:48:30.212394+01:00 vm-node-4 kernel: [65670.730935] aacraid 0000:08:00.0: IOP reset succeeded

2023-11-29T16:48:30.236340+01:00 vm-node-4 kernel: [65670.755676] aacraid: Comm Interface type2 enabledAttachments

Last edited:

Hey,

does anybody know, if theres an upcoming fix for adaptec controllers too?

We are struggling right now, because we are running alot of vm-nodes (Dual Xeon, Debian12 Bullseye running Proxmox latest version) which save their data into raid5 hardware raid using adaptec 8405 controllers. Controllers are on latest Firmware 7.18-0 (33556).

It happens at every system and started directly after installing latest kernel. Its not a hardware fault.

The problem:

With Kernel 6.5.11-5-pve and latest public stable: 6.5.11-6-pve system keeps hanging with errors like "aacraid: Outstanding commands on (0,0,0,0):" (full log / errors attached in the log extract below).

Our testings showed us, that it happens with latest default debian 12 kernel right now based on 6.1.0-13-amd64 too and it does not happens with older default debian kernels like 5.10.0-xx (was common in the best times of debian 11)

System freezes for about 60 seconds, then starts working again.

With Kernel 6.2.16-19-pve and all older version that doesnt happen and never happenend before.

We were running proxmox on that hosts for months without any problems and they startet to be stable and working fine, after downgrading to 6.2.16-19 without any problem.

Thats why I guess something got lost "on the way" in kernel branch 6.5.xxx that is not included in linux default kernels too? Maybe some patches for adaptec?

Is there a fix upcoming?

There seems to be a bug report here: https://bugzilla.proxmox.com/show_bug.cgi?id=5077

Great okay, will wait for a patch to move to the new kernel. 6.2 is working fine for the time being. Thank you very much!Yeah, seems those Dell really are affected by something completely different than the missing console output.

I guess your host is usingBCM5720network card too? As:

For now, we added a known issue entry for those Dell + NIC model combos in the 8.1 release notes while we're further investigating.

Try with kernel kernel-6.5.11-6-pve and won't boot.

My Melanox 10G network card won't start either.

root@pve-d340:~# lspci

00:00.0 Host bridge: Intel Corporation 8th Gen Core Processor Host Bridge/DRAM Registers (rev 07)

00:01.0 PCI bridge: Intel Corporation 6th-10th Gen Core Processor PCIe Controller (x16) (rev 07)

00:01.1 PCI bridge: Intel Corporation Xeon E3-1200 v5/E3-1500 v5/6th Gen Core Processor PCIe Controller (x8) (rev 07)

00:08.0 System peripheral: Intel Corporation Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th Gen Core Processor Gaussian Mixture Model

00:12.0 Signal processing controller: Intel Corporation Cannon Lake PCH Thermal Controller (rev 10)

00:14.0 USB controller: Intel Corporation Cannon Lake PCH USB 3.1 xHCI Host Controller (rev 10)

00:14.2 RAM memory: Intel Corporation Cannon Lake PCH Shared SRAM (rev 10)

00:16.0 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller (rev 10)

00:16.4 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller #2 (rev 10)

00:17.0 SATA controller: Intel Corporation Cannon Lake PCH SATA AHCI Controller (rev 10)

00:1c.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #1 (rev f0)

00:1c.1 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #2 (rev f0)

00:1d.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #9 (rev f0)

00:1e.0 Communication controller: Intel Corporation Cannon Lake PCH Serial IO UART Host Controller (rev 10)

00:1f.0 ISA bridge: Intel Corporation Cannon Point-LP LPC Controller (rev 10)

00:1f.4 SMBus: Intel Corporation Cannon Lake PCH SMBus Controller (rev 10)

00:1f.5 Serial bus controller: Intel Corporation Cannon Lake PCH SPI Controller (rev 10)

01:00.0 Ethernet controller: Mellanox Technologies MT27500 Family [ConnectX-3]

02:00.0 RAID bus controller: Broadcom / LSI MegaRAID SAS-3 3008 [Fury] (rev 02)

03:00.0 PCI bridge: PLDA PCI Express Bridge (rev 02)

04:00.0 VGA compatible controller: Matrox Electronics Systems Ltd. Integrated Matrox G200eW3 Graphics Controller (rev 04)

05:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

05:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

06:00.0 Non-Volatile memory controller: Sandisk Corp WD Blue SN570 NVMe SSD 1TB

My Melanox 10G network card won't start either.

root@pve-d340:~# lspci

00:00.0 Host bridge: Intel Corporation 8th Gen Core Processor Host Bridge/DRAM Registers (rev 07)

00:01.0 PCI bridge: Intel Corporation 6th-10th Gen Core Processor PCIe Controller (x16) (rev 07)

00:01.1 PCI bridge: Intel Corporation Xeon E3-1200 v5/E3-1500 v5/6th Gen Core Processor PCIe Controller (x8) (rev 07)

00:08.0 System peripheral: Intel Corporation Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th Gen Core Processor Gaussian Mixture Model

00:12.0 Signal processing controller: Intel Corporation Cannon Lake PCH Thermal Controller (rev 10)

00:14.0 USB controller: Intel Corporation Cannon Lake PCH USB 3.1 xHCI Host Controller (rev 10)

00:14.2 RAM memory: Intel Corporation Cannon Lake PCH Shared SRAM (rev 10)

00:16.0 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller (rev 10)

00:16.4 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller #2 (rev 10)

00:17.0 SATA controller: Intel Corporation Cannon Lake PCH SATA AHCI Controller (rev 10)

00:1c.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #1 (rev f0)

00:1c.1 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #2 (rev f0)

00:1d.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #9 (rev f0)

00:1e.0 Communication controller: Intel Corporation Cannon Lake PCH Serial IO UART Host Controller (rev 10)

00:1f.0 ISA bridge: Intel Corporation Cannon Point-LP LPC Controller (rev 10)

00:1f.4 SMBus: Intel Corporation Cannon Lake PCH SMBus Controller (rev 10)

00:1f.5 Serial bus controller: Intel Corporation Cannon Lake PCH SPI Controller (rev 10)

01:00.0 Ethernet controller: Mellanox Technologies MT27500 Family [ConnectX-3]

02:00.0 RAID bus controller: Broadcom / LSI MegaRAID SAS-3 3008 [Fury] (rev 02)

03:00.0 PCI bridge: PLDA PCI Express Bridge (rev 02)

04:00.0 VGA compatible controller: Matrox Electronics Systems Ltd. Integrated Matrox G200eW3 Graphics Controller (rev 04)

05:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

05:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

06:00.0 Non-Volatile memory controller: Sandisk Corp WD Blue SN570 NVMe SSD 1TB

Those are also BCM5720, seems to be the common property of all affected hosts for now (and Dell is using them a lot it seems).05:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

05:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

Just to not miss anything - and because I was shortly confused - do you mean Debian 12 Bookworm? (Bullseye was Debian 11 corresponding to PVE 7.X)?Hey,

does anybody know, if theres an upcoming fix for adaptec controllers too?

We are struggling right now, because we are running alot of vm-nodes (Dual Xeon, Debian12 Bullseye running Proxmox latest version) which save their data into raid5 hardware raid using adaptec 8405 controllers. Controllers are on latest Firmware 7.18-0 (33556).

I don't think so:Yeah, seems those Dell really are affected by something completely different than the missing console output.

I guess your host is usingBCM5720network card too? As:

For now, we added a known issue entry for those Dell + NIC model combos in the 8.1 release notes while we're further investigating.

Code:

root@pve221:~# hwinfo --network | grep Driver

Driver: "tg3"

Driver: "ixgbe"Also, some Linux VMs hangs with CPU errors when migrating from kernel 6.5 hosts to 6.2 ones.

Driver: "tg3"

tg3 is a Broadcom driver though, and it is used (among other models) by the BCM5720 model, e.g. check your lspci output...What errors? And that seems to be another host then (which HW? some details please), as the "Dell R240" one cannot boot with 6.5, or?Also, some Linux VMs hangs with CPU errors when migrating from kernel 6.5 hosts to 6.2 ones.

Yes, you're right, there are 2 Broadcom NetXtreme BCM5720, although we are not using them.tg3is a Broadcom driver though, and it is used (among other models) by the BCM5720 model, e.g. check yourlspcioutput...

What errors? And that seems to be another host then (which HW? some details please), as the "Dell R240" one cannot boot with 6.5, or?

We use only the Intel SFP+ ones.

Code:

root@pve221:~# lspci

00:00.0 Host bridge: Intel Corporation 8th/9th Gen Core Processor Host Bridge/DRAM Registers [Coffee Lake] (rev 07)

00:01.0 PCI bridge: Intel Corporation 6th-10th Gen Core Processor PCIe Controller (x16) (rev 07)

00:08.0 System peripheral: Intel Corporation Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th Gen Core Processor Gaussian Mixture Model

00:12.0 Signal processing controller: Intel Corporation Cannon Lake PCH Thermal Controller (rev 10)

00:14.0 USB controller: Intel Corporation Cannon Lake PCH USB 3.1 xHCI Host Controller (rev 10)

00:14.2 RAM memory: Intel Corporation Cannon Lake PCH Shared SRAM (rev 10)

00:16.0 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller (rev 10)

00:16.4 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller #2 (rev 10)

00:17.0 SATA controller: Intel Corporation Cannon Lake PCH SATA AHCI Controller (rev 10)

00:1b.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #21 (rev f0)

00:1c.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #1 (rev f0)

00:1c.1 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #2 (rev f0)

00:1e.0 Communication controller: Intel Corporation Cannon Lake PCH Serial IO UART Host Controller (rev 10)

00:1f.0 ISA bridge: Intel Corporation Cannon Point-LP LPC Controller (rev 10)

00:1f.4 SMBus: Intel Corporation Cannon Lake PCH SMBus Controller (rev 10)

00:1f.5 Serial bus controller: Intel Corporation Cannon Lake PCH SPI Controller (rev 10)

01:00.0 Non-Volatile memory controller: Samsung Electronics Co Ltd NVMe SSD Controller 172X (rev 01)

02:00.0 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

02:00.1 Ethernet controller: Intel Corporation 82599ES 10-Gigabit SFI/SFP+ Network Connection (rev 01)

03:00.0 PCI bridge: PLDA PCI Express Bridge (rev 02)

04:00.0 VGA compatible controller: Matrox Electronics Systems Ltd. Integrated Matrox G200eW3 Graphics Controller (rev 04)

05:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

05:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIeAbout the VM migrations, they hang when moving from/to any host running kernel 6.5.

In this cluster we have a Dell R620 and a Dell R6515 (running 6.5) and the R240 (running 6.2).

Regards,

As you're not using this hardware, can you try if blacklisting theYes, you're right, there are 2 Broadcom NetXtreme BCM5720, although we are not using them.

tg3 module is enough to avoid the boot issue?For example, permanently via:

echo "blacklist tg3" >> /etc/modprobe.d/no-tg3.confAlternatively, for just once on boot one could add

module_blacklist=tg3 to the kernel command line.Does it involve RCU stall error messages? As there was an issue for some systems when migrating towards a host with the 6.5 kernel booted (source kernel version didn't really matter IIRC), which has been fixed in the more recent 6.5.11-5 kernel, that moved to the enterprise repository on Thursday, for more details see https://forum.proxmox.com/threads/p...-11-4-rcu_sched-stall-cpu.136992/#post-610193About the VM migrations, they hang when moving from/to any host running kernel 6.5.

Last edited:

I've doneAs you're not using this hardware, can you try if blacklisting thetg3module is enough to avoid the boot issue?

For example, permanently via:

echo "blacklist tg3" >> /etc/modprobe.d/no-tg3.conf

Alternatively, for just once on boot one could addmodule_blacklist=tg3to the kernel command line.

Does it involve RCU stall error messages? As there was an issue for some systems when migrating towards a host with the 6.5 kernel booted (source kernel version didn't really matter IIRC), which has been fixed in the more recent 6.5.11-5 kernel, that moved to the enterprise repository on Thursday, for more details see https://forum.proxmox.com/threads/p...-11-4-rcu_sched-stall-cpu.136992/#post-610193

echo "blacklist tg3" >> /etc/modprobe.d/no-tg3.conf but it still gets stuck on

and it's not a display issue: no ping, no ceph, nothing.

Using kernel 6.2 with the same config it boots ok.

About the VMs problems, this is what happens:

Thanks,

Hi,Try with kernel kernel-6.5.11-6-pve and won't boot.

My Melanox 10G network card won't start either.

root@pve-d340:~# lspci

00:00.0 Host bridge: Intel Corporation 8th Gen Core Processor Host Bridge/DRAM Registers (rev 07)

00:01.0 PCI bridge: Intel Corporation 6th-10th Gen Core Processor PCIe Controller (x16) (rev 07)

00:01.1 PCI bridge: Intel Corporation Xeon E3-1200 v5/E3-1500 v5/6th Gen Core Processor PCIe Controller (x8) (rev 07)

00:08.0 System peripheral: Intel Corporation Xeon E3-1200 v5/v6 / E3-1500 v5 / 6th/7th/8th Gen Core Processor Gaussian Mixture Model

00:12.0 Signal processing controller: Intel Corporation Cannon Lake PCH Thermal Controller (rev 10)

00:14.0 USB controller: Intel Corporation Cannon Lake PCH USB 3.1 xHCI Host Controller (rev 10)

00:14.2 RAM memory: Intel Corporation Cannon Lake PCH Shared SRAM (rev 10)

00:16.0 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller (rev 10)

00:16.4 Communication controller: Intel Corporation Cannon Lake PCH HECI Controller #2 (rev 10)

00:17.0 SATA controller: Intel Corporation Cannon Lake PCH SATA AHCI Controller (rev 10)

00:1c.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #1 (rev f0)

00:1c.1 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #2 (rev f0)

00:1d.0 PCI bridge: Intel Corporation Cannon Lake PCH PCI Express Root Port #9 (rev f0)

00:1e.0 Communication controller: Intel Corporation Cannon Lake PCH Serial IO UART Host Controller (rev 10)

00:1f.0 ISA bridge: Intel Corporation Cannon Point-LP LPC Controller (rev 10)

00:1f.4 SMBus: Intel Corporation Cannon Lake PCH SMBus Controller (rev 10)

00:1f.5 Serial bus controller: Intel Corporation Cannon Lake PCH SPI Controller (rev 10)

01:00.0 Ethernet controller: Mellanox Technologies MT27500 Family [ConnectX-3]

02:00.0 RAID bus controller: Broadcom / LSI MegaRAID SAS-3 3008 [Fury] (rev 02)

03:00.0 PCI bridge: PLDA PCI Express Bridge (rev 02)

04:00.0 VGA compatible controller: Matrox Electronics Systems Ltd. Integrated Matrox G200eW3 Graphics Controller (rev 04)

05:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

05:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

06:00.0 Non-Volatile memory controller: Sandisk Corp WD Blue SN570 NVMe SSD 1TB

I have a r620 with broadcom BCM7520 && mellanox connect-x4, it's booting fine with 6.5.3 (I'll test newer other 6.5.x to compare)

(I can't tell if the physical console is working as I only manage them remotly through idrac)

Code:

# lspci |grep -i connect

41:00.0 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx]

41:00.1 Ethernet controller: Mellanox Technologies MT27710 Family [ConnectX-4 Lx]

#lspci |grep BCM5720

01:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

01:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

02:00.0 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

02:00.1 Ethernet controller: Broadcom Inc. and subsidiaries NetXtreme BCM5720 Gigabit Ethernet PCIe

# uname -a

Linux formationkvm3 6.5.3-1-pve #1 SMP PREEMPT_DYNAMIC PMX 6.5.3-1 (2023-10-23T08:03Z) x86_64 GNU/Linux