I did link them already in the announcement post thoughKernelchanges should be read at: https://kernelnewbies.org/LinuxChanges

Edit: seems like @BlueMatt was quicker ;-( Thanks for the direct links, could not find them on kernelnewbies.org

Opt-in Linux 6.17 Kernel for Proxmox VE 9 available on test & no-subscription

- Thread starter t.lamprecht

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Gilberto Ferreira

Renowned Member

Last edited:

No issues with nvidia dkms drivers on 6.17:

No Issues with 6.17 and 580.82.07

No issues with 6.17 and 580.95.05

(Genoa 9374f + RTX 6000 96gb)

No Issues with 6.17 and 580.82.07

No issues with 6.17 and 580.95.05

(Genoa 9374f + RTX 6000 96gb)

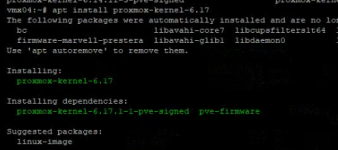

Code:

root@pve-bdr:~# apt install proxmox-headers-6.17

The following packages were automatically installed and are no longer required:

proxmox-headers-6.14.11-2-pve proxmox-headers-6.14.11-3-pve proxmox-kernel-6.14.11-1-pve-signed

Use 'apt autoremove' to remove them.

Installing:

proxmox-headers-6.17

Installing dependencies:

proxmox-headers-6.17.1-1-pve

Summary:

Upgrading: 0, Installing: 2, Removing: 0, Not Upgrading: 0

Download size: 15.3 MB

Space needed: 105 MB / 891 GB available

Continue? [Y/n] Y

Get:1 http://download.proxmox.com/debian/pve trixie/pve-no-subscription amd64 proxmox-headers-6.17.1-1-pve amd64 6.17.1-1 [15.3 MB]

Get:2 http://download.proxmox.com/debian/pve trixie/pve-no-subscription amd64 proxmox-headers-6.17 all 6.17.1-1 [11.1 kB]

Fetched 15.3 MB in 2s (9,621 kB/s)

Selecting previously unselected package proxmox-headers-6.17.1-1-pve.

(Reading database ... 167573 files and directories currently installed.)

Preparing to unpack .../proxmox-headers-6.17.1-1-pve_6.17.1-1_amd64.deb ...

Unpacking proxmox-headers-6.17.1-1-pve (6.17.1-1) ...

Selecting previously unselected package proxmox-headers-6.17.

Preparing to unpack .../proxmox-headers-6.17_6.17.1-1_all.deb ...

Unpacking proxmox-headers-6.17 (6.17.1-1) ...

Setting up proxmox-headers-6.17.1-1-pve (6.17.1-1) ...

Setting up proxmox-headers-6.17 (6.17.1-1) ...

root@pve-bdr:~# apt install proxmox-kernel-6.17 proxmox-headers-6.17

proxmox-headers-6.17 is already the newest version (6.17.1-1).

The following packages were automatically installed and are no longer required:

proxmox-headers-6.14.11-2-pve proxmox-headers-6.14.11-3-pve proxmox-kernel-6.14.11-1-pve-signed

Use 'apt autoremove' to remove them.

Installing:

proxmox-kernel-6.17

Installing dependencies:

proxmox-kernel-6.17.1-1-pve-signed

Summary:

Upgrading: 0, Installing: 2, Removing: 0, Not Upgrading: 0

Download size: 124 MB

Space needed: 989 MB / 891 GB available

Get:1 http://download.proxmox.com/debian/pve trixie/pve-no-subscription amd64 proxmox-kernel-6.17.1-1-pve-signed amd64 6.17.1-1 [124 MB]

Get:2 http://download.proxmox.com/debian/pve trixie/pve-no-subscription amd64 proxmox-kernel-6.17 all 6.17.1-1 [11.4 kB]

Fetched 124 MB in 11s (10.8 MB/s)

Selecting previously unselected package proxmox-kernel-6.17.1-1-pve-signed.

(Reading database ... 195241 files and directories currently installed.)

Preparing to unpack .../proxmox-kernel-6.17.1-1-pve-signed_6.17.1-1_amd64.deb ...

Unpacking proxmox-kernel-6.17.1-1-pve-signed (6.17.1-1) ...

Selecting previously unselected package proxmox-kernel-6.17.

Preparing to unpack .../proxmox-kernel-6.17_6.17.1-1_all.deb ...

Unpacking proxmox-kernel-6.17 (6.17.1-1) ...

Setting up proxmox-kernel-6.17.1-1-pve-signed (6.17.1-1) ...

Examining /etc/kernel/postinst.d.

run-parts: executing /etc/kernel/postinst.d/dkms 6.17.1-1-pve /boot/vmlinuz-6.17.1-1-pve

Sign command: /lib/modules/6.17.1-1-pve/build/scripts/sign-file

Signing key: /var/lib/dkms/mok.key

Public certificate (MOK): /var/lib/dkms/mok.pub

Autoinstall of module nvidia/580.82.07 for kernel 6.17.1-1-pve (x86_64)

Building module(s)........ done.

Signing module /var/lib/dkms/nvidia/580.82.07/build/nvidia.ko

Signing module /var/lib/dkms/nvidia/580.82.07/build/nvidia-modeset.ko

Signing module /var/lib/dkms/nvidia/580.82.07/build/nvidia-drm.ko

Signing module /var/lib/dkms/nvidia/580.82.07/build/nvidia-uvm.ko

Signing module /var/lib/dkms/nvidia/580.82.07/build/nvidia-peermem.ko

Installing /lib/modules/6.17.1-1-pve/updates/dkms/nvidia.ko

Installing /lib/modules/6.17.1-1-pve/updates/dkms/nvidia-modeset.ko

Installing /lib/modules/6.17.1-1-pve/updates/dkms/nvidia-drm.ko

Installing /lib/modules/6.17.1-1-pve/updates/dkms/nvidia-uvm.ko

Installing /lib/modules/6.17.1-1-pve/updates/dkms/nvidia-peermem.ko

Running depmod... done.

Autoinstall on 6.17.1-1-pve succeeded for module(s) nvidia.

run-parts: executing /etc/kernel/postinst.d/initramfs-tools 6.17.1-1-pve /boot/vmlinuz-6.17.1-1-pve

update-initramfs: Generating /boot/initrd.img-6.17.1-1-pve

Running hook script 'zz-proxmox-boot'..

Re-executing '/etc/kernel/postinst.d/zz-proxmox-boot' in new private mount namespace..

Copying and configuring kernels on /dev/disk/by-uuid/8674-D819

Copying kernel and creating boot-entry for 6.14.11-2-pve

Copying kernel and creating boot-entry for 6.14.11-4-pve

Copying kernel and creating boot-entry for 6.17.1-1-pve

Removing old version 6.14.11-3-pve

Copying and configuring kernels on /dev/disk/by-uuid/8695-C91A

Copying kernel and creating boot-entry for 6.14.11-2-pve

Copying kernel and creating boot-entry for 6.14.11-4-pve

Copying kernel and creating boot-entry for 6.17.1-1-pve

Removing old version 6.14.11-3-pve

run-parts: executing /etc/kernel/postinst.d/proxmox-auto-removal 6.17.1-1-pve /boot/vmlinuz-6.17.1-1-pve

run-parts: executing /etc/kernel/postinst.d/zz-proxmox-boot 6.17.1-1-pve /boot/vmlinuz-6.17.1-1-pve

Re-executing '/etc/kernel/postinst.d/zz-proxmox-boot' in new private mount namespace..

Copying and configuring kernels on /dev/disk/by-uuid/8674-D819

Copying kernel and creating boot-entry for 6.14.11-2-pve

Copying kernel and creating boot-entry for 6.14.11-4-pve

Copying kernel and creating boot-entry for 6.17.1-1-pve

Copying and configuring kernels on /dev/disk/by-uuid/8695-C91A

Copying kernel and creating boot-entry for 6.14.11-2-pve

Copying kernel and creating boot-entry for 6.14.11-4-pve

Copying kernel and creating boot-entry for 6.17.1-1-pve

run-parts: executing /etc/kernel/postinst.d/zz-update-grub 6.17.1-1-pve /boot/vmlinuz-6.17.1-1-pve

Generating grub configuration file ...

Found linux image: /boot/vmlinuz-6.17.1-1-pve

Found initrd image: /boot/initrd.img-6.17.1-1-pve

/usr/sbin/grub-probe: error: unknown filesystem.

Found linux image: /boot/vmlinuz-6.14.11-4-pve

Found initrd image: /boot/initrd.img-6.14.11-4-pve

Found linux image: /boot/vmlinuz-6.14.11-3-pve

Found initrd image: /boot/initrd.img-6.14.11-3-pve

Found linux image: /boot/vmlinuz-6.14.11-2-pve

Found initrd image: /boot/initrd.img-6.14.11-2-pve

Found linux image: /boot/vmlinuz-6.14.11-1-pve

Found initrd image: /boot/initrd.img-6.14.11-1-pve

/usr/sbin/grub-probe: error: unknown filesystem.

Found memtest86+ 64bit EFI image: /boot/memtest86+x64.efi

Found memtest86+ 32bit EFI image: /boot/memtest86+ia32.efi

Found memtest86+ 64bit image: /boot/memtest86+x64.bin

Found memtest86+ 32bit image: /boot/memtest86+ia32.bin

Adding boot menu entry for UEFI Firmware Settings ...

done

Setting up proxmox-kernel-6.17 (6.17.1-1) ...

Code:

root@pve-bdr:~# nvidia-smi

Wed Oct 15 07:12:54 2025

+-----------------------------------------------------------------------------------------+

| NVIDIA-SMI 580.95.05 Driver Version: 580.95.05 CUDA Version: 13.0 |

+-----------------------------------------+------------------------+----------------------+

| GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|=========================================+========================+======================|

| 0 NVIDIA RTX PRO 6000 Blac... On | 00000000:41:00.0 Off | Off |

| 30% 27C P8 6W / 300W | 2MiB / 97887MiB | 0% Default |

| | | N/A |

+-----------------------------------------+------------------------+----------------------+

+-----------------------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=========================================================================================|

| No running processes found |

+-----------------------------------------------------------------------------------------+

root@pve-bdr:~# uname -a

Linux pve-bdr 6.17.1-1-pve #1 SMP PREEMPT_DYNAMIC PMX 6.17.1-1 (2025-10-06T16:20Z) x86_64 GNU/LinuxHi,

please share the VM configurationHad one issue today, but I'm not sure if its strictly related to the kernel. Had a windows 11 VM crash with a "Internal VM error". System logs showed the following. I restarted the windows 11 VM and so far so good. View attachment 91673

qm config ID, the output of pveversion -v and the output of lscpu. Are the latest BIOS updates/CPU microcode installed? Anything additional in the surrounding system logs?root@neptune:~# qm config 101

agent: 1,fstrim_cloned_disks=1

audio0: device=ich9-intel-hda,driver=spice

balloon: 0

bios: ovmf

boot: order=ide2;scsi0

cores: 6

cpu: x86-64-v3

cpuunits: 150

description: Running windows 11 with Only Office

efidisk0: sata-zfs:vm-101-disk-3,efitype=4m,pre-enrolled-keys=1,size=1M

hostpci0: mapping=B50-SRIOV,pcie=1,x-vga=1

ide2: none,media=cdrom

machine: pc-q35-9.0

memory: 12288

meta: creation-qemu=7.1.0,ctime=1671541357

name: Win-ThinServer

net0: virtio=2E:4B:05:74:1C:AF,bridge=vmbr1,firewall=1,queues=6,tag=5

numa: 0

onboot: 1

ostype: win11

scsi0: local-zfs:vm-101-disk-0,discard=on,iothread=1,size=85G,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=48d76fbb-0a05-4efc-a48e-e66402df1d80

sockets: 1

spice_enhancements: videostreaming=filter

startup: order=2,up=30,down=60

tablet: 0

tags: ThinClientVM;IOT

tpmstate0: sata-zfs:vm-101-disk-5,size=4M,version=v2.0

vga: qxl2

vmgenid: dd53ac36-3bb1-4017-ac1a-197c4166f202

root@neptune:~# pveversion -v

proxmox-ve: 9.0.0 (running kernel: 6.17.1-1-pve)

pve-manager: 9.0.11 (running version: 9.0.11/3bf5476b8a4699e2)

proxmox-kernel-helper: 9.0.4

proxmox-kernel-6.17.1-1-pve-signed: 6.17.1-1

proxmox-kernel-6.17: 6.17.1-1

proxmox-kernel-6.14.11-4-pve-signed: 6.14.11-4

proxmox-kernel-6.14: 6.14.11-4

proxmox-kernel-6.14.11-2-pve-signed: 6.14.11-2

proxmox-kernel-6.14.8-2-pve-signed: 6.14.8-2

amd64-microcode: 3.20250311.1

ceph-fuse: 19.2.3-pve2

corosync: 3.1.9-pve2

criu: 4.1.1-1

frr-pythontools: 10.3.1-1+pve4

ifupdown2: 3.3.0-1+pmx10

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libproxmox-acme-perl: 1.7.0

libproxmox-backup-qemu0: 2.0.1

libproxmox-rs-perl: 0.4.1

libpve-access-control: 9.0.3

libpve-apiclient-perl: 3.4.0

libpve-cluster-api-perl: 9.0.6

libpve-cluster-perl: 9.0.6

libpve-common-perl: 9.0.11

libpve-guest-common-perl: 6.0.2

libpve-http-server-perl: 6.0.4

libpve-network-perl: 1.1.8

libpve-rs-perl: 0.10.10

libpve-storage-perl: 9.0.13

libspice-server1: 0.15.2-1+b1

lvm2: 2.03.31-2+pmx1

lxc-pve: 6.0.5-1

lxcfs: 6.0.4-pve1

novnc-pve: 1.6.0-3

proxmox-backup-client: 4.0.16-1

proxmox-backup-file-restore: 4.0.16-1

proxmox-backup-restore-image: 1.0.0

proxmox-firewall: 1.2.0

proxmox-kernel-helper: 9.0.4

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-widget-toolkit: 5.0.6

pve-cluster: 9.0.6

pve-container: 6.0.13

pve-docs: 9.0.8

pve-edk2-firmware: 4.2025.02-4

pve-esxi-import-tools: 1.0.1

pve-firewall: 6.0.3

pve-firmware: 3.17-2

pve-ha-manager: 5.0.5

pve-i18n: 3.6.1

pve-qemu-kvm: 10.0.2-4

pve-xtermjs: 5.5.0-2

qemu-server: 9.0.23

smartmontools: 7.4-pve1

spiceterm: 3.4.1

swtpm: 0.8.0+pve2

vncterm: 1.9.1

zfsutils-linux: 2.3.4-pve1

Bios/system firmware is current. I looked in the logs for anything else that seemed relevant and around the time frame of the actual vm lockup most of the locks besides the snippet above were actually fairly quiet.

agent: 1,fstrim_cloned_disks=1

audio0: device=ich9-intel-hda,driver=spice

balloon: 0

bios: ovmf

boot: order=ide2;scsi0

cores: 6

cpu: x86-64-v3

cpuunits: 150

description: Running windows 11 with Only Office

efidisk0: sata-zfs:vm-101-disk-3,efitype=4m,pre-enrolled-keys=1,size=1M

hostpci0: mapping=B50-SRIOV,pcie=1,x-vga=1

ide2: none,media=cdrom

machine: pc-q35-9.0

memory: 12288

meta: creation-qemu=7.1.0,ctime=1671541357

name: Win-ThinServer

net0: virtio=2E:4B:05:74:1C:AF,bridge=vmbr1,firewall=1,queues=6,tag=5

numa: 0

onboot: 1

ostype: win11

scsi0: local-zfs:vm-101-disk-0,discard=on,iothread=1,size=85G,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=48d76fbb-0a05-4efc-a48e-e66402df1d80

sockets: 1

spice_enhancements: videostreaming=filter

startup: order=2,up=30,down=60

tablet: 0

tags: ThinClientVM;IOT

tpmstate0: sata-zfs:vm-101-disk-5,size=4M,version=v2.0

vga: qxl2

vmgenid: dd53ac36-3bb1-4017-ac1a-197c4166f202

root@neptune:~# pveversion -v

proxmox-ve: 9.0.0 (running kernel: 6.17.1-1-pve)

pve-manager: 9.0.11 (running version: 9.0.11/3bf5476b8a4699e2)

proxmox-kernel-helper: 9.0.4

proxmox-kernel-6.17.1-1-pve-signed: 6.17.1-1

proxmox-kernel-6.17: 6.17.1-1

proxmox-kernel-6.14.11-4-pve-signed: 6.14.11-4

proxmox-kernel-6.14: 6.14.11-4

proxmox-kernel-6.14.11-2-pve-signed: 6.14.11-2

proxmox-kernel-6.14.8-2-pve-signed: 6.14.8-2

amd64-microcode: 3.20250311.1

ceph-fuse: 19.2.3-pve2

corosync: 3.1.9-pve2

criu: 4.1.1-1

frr-pythontools: 10.3.1-1+pve4

ifupdown2: 3.3.0-1+pmx10

ksm-control-daemon: 1.5-1

libjs-extjs: 7.0.0-5

libproxmox-acme-perl: 1.7.0

libproxmox-backup-qemu0: 2.0.1

libproxmox-rs-perl: 0.4.1

libpve-access-control: 9.0.3

libpve-apiclient-perl: 3.4.0

libpve-cluster-api-perl: 9.0.6

libpve-cluster-perl: 9.0.6

libpve-common-perl: 9.0.11

libpve-guest-common-perl: 6.0.2

libpve-http-server-perl: 6.0.4

libpve-network-perl: 1.1.8

libpve-rs-perl: 0.10.10

libpve-storage-perl: 9.0.13

libspice-server1: 0.15.2-1+b1

lvm2: 2.03.31-2+pmx1

lxc-pve: 6.0.5-1

lxcfs: 6.0.4-pve1

novnc-pve: 1.6.0-3

proxmox-backup-client: 4.0.16-1

proxmox-backup-file-restore: 4.0.16-1

proxmox-backup-restore-image: 1.0.0

proxmox-firewall: 1.2.0

proxmox-kernel-helper: 9.0.4

proxmox-mail-forward: 1.0.2

proxmox-mini-journalreader: 1.6

proxmox-widget-toolkit: 5.0.6

pve-cluster: 9.0.6

pve-container: 6.0.13

pve-docs: 9.0.8

pve-edk2-firmware: 4.2025.02-4

pve-esxi-import-tools: 1.0.1

pve-firewall: 6.0.3

pve-firmware: 3.17-2

pve-ha-manager: 5.0.5

pve-i18n: 3.6.1

pve-qemu-kvm: 10.0.2-4

pve-xtermjs: 5.5.0-2

qemu-server: 9.0.23

smartmontools: 7.4-pve1

spiceterm: 3.4.1

swtpm: 0.8.0+pve2

vncterm: 1.9.1

zfsutils-linux: 2.3.4-pve1

Bios/system firmware is current. I looked in the logs for anything else that seemed relevant and around the time frame of the actual vm lockup most of the locks besides the snippet above were actually fairly quiet.

Last edited:

Hey, so I may have mistaken about the Socket patch.Unix sockets can be files though!

Or use the correct ABI over LLM generated patches as Fiona pointed out at https://forum.proxmox.com/threads/minor-apparmor-problem-with-tor.173419/#post-808186

I was also wrong that the official 6.17.1-1-pve kernel wouldn’t panic with my LXC container. I hadn’t run the container with the issue yet. Today, I ran it and got this kernel panic:

Code:

[12766.116607] audit: type=1400 audit(1760572259.593:465): apparmor="STATUS" operation="profile_load" profile="/usr/bin/lxc-start" name="lxc-109_</var/lib/lxc>" pid=69671 comm="apparmor_parser"

[12766.337421] vmbr0: port 5(veth109i0) entered blocking state

[12766.337428] vmbr0: port 5(veth109i0) entered disabled state

[12766.337445] veth109i0: entered allmulticast mode

[12766.337473] veth109i0: entered promiscuous mode

[12766.372852] eth0: renamed from vethEt7I3x

[12766.589007] vmbr0: port 5(veth109i0) entered blocking state

[12766.589017] vmbr0: port 5(veth109i0) entered forwarding state

[12766.684938] overlayfs: fs on '/var/lib/containers/storage/overlay/compat649633326/lower1' does not support file handles, falling back to xino=off.

[12766.690522] overlayfs: fs on '/var/lib/containers/storage/overlay/metacopy-check1218510262/l1' does not support file handles, falling back to xino=off.

[12766.690558] evm: overlay not supported

[12766.697703] overlayfs: fs on '/var/lib/containers/storage/overlay/opaque-bug-check2441303130/l2' does not support file handles, falling back to xino=off.

[12771.880063] overlayfs: fs on '/var/lib/containers/storage/overlay/l/DE7CQT5UZRNHHMTHIHAJPPW5J7' does not support file handles, falling back to xino=off.

[12771.881015] podman0: port 1(veth0) entered blocking state

[12771.881020] podman0: port 1(veth0) entered disabled state

[12771.881027] veth0: entered allmulticast mode

[12771.881045] veth0: entered promiscuous mode

[12771.881224] podman0: port 1(veth0) entered blocking state

[12771.881228] podman0: port 1(veth0) entered forwarding state

[12772.024553] BUG: kernel NULL pointer dereference, address: 0000000000000018

[12772.024560] #PF: supervisor read access in kernel mode

[12772.024561] #PF: error_code(0x0000) - not-present page

[12772.024562] PGD 0 P4D 0

[12772.024564] Oops: Oops: 0000 [#1] SMP NOPTI

[12772.024567] CPU: 9 UID: 100000 PID: 70233 Comm: crun Tainted: P OE 6.17.1-1-pve #1 PREEMPT(voluntary)

[12772.024570] Tainted: [P]=PROPRIETARY_MODULE, [O]=OOT_MODULE, [E]=UNSIGNED_MODULE

[12772.024571] Hardware name: Gigabyte Technology Co., Ltd. B650 AORUS ELITE AX ICE/B650 AORUS ELITE AX ICE, BIOS F36 07/31/2025

[12772.024572] RIP: 0010:aa_file_perm+0xb7/0x3b0

[12772.024576] Code: 45 31 c9 c3 cc cc cc cc 49 8b 47 20 41 8b 55 10 0f b7 00 66 25 00 f0 66 3d 00 c0 75 1c 41 f7 c4 46 00 10 00 75 13 49 8b 47 18 <48> 8b 40 18 66 83 78 10 01 0f 84 4a 01 00 00 89 d0 f7 d0 44 21 e0

[12772.024578] RSP: 0018:ffffd19ac846b900 EFLAGS: 00010246

[12772.024580] RAX: 0000000000000000 RBX: ffff8b04a9edf080 RCX: ffff8b04af7863c0

[12772.024581] RDX: 0000000000000000 RSI: ffff8b04c21f1d40 RDI: ffffffff848e7547

[12772.024582] RBP: ffffd19ac846b958 R08: 0000000000000000 R09: 0000000000000000

[12772.024583] R10: 0000000000000000 R11: 0000000000000000 R12: 0000000000000000

[12772.024583] R13: ffff8b0a518a9330 R14: ffff8b04a9edf080 R15: ffff8b04af7863c0

[12772.024584] FS: 00007fe77c67a840(0000) GS:ffff8b0c18a06000(0000) knlGS:0000000000000000

[12772.024586] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

[12772.024587] CR2: 0000000000000018 CR3: 00000001134d6000 CR4: 0000000000f50ef0

[12772.024588] PKRU: 55555554

[12772.024589] Call Trace:

[12772.024590] <TASK>

[12772.024592] common_file_perm+0x6c/0x1a0

[12772.024594] apparmor_file_receive+0x42/0x80

[12772.024596] security_file_receive+0x2e/0x50

[12772.024597] receive_fd+0x1d/0xf0

[12772.024599] scm_detach_fds+0xad/0x1c0

[12772.024601] __scm_recv_common.isra.0+0x66/0x180

[12772.024603] scm_recv_unix+0x30/0x130

[12772.024604] ? unix_destroy_fpl+0x49/0xb0

[12772.024606] __unix_dgram_recvmsg+0x2ac/0x450

[12772.024607] unix_seqpacket_recvmsg+0x43/0x70

[12772.024608] sock_recvmsg+0xde/0xf0

[12772.024609] ? ___sys_recvmsg+0xd2/0xf0

[12772.024610] ____sys_recvmsg+0xa0/0x230

[12772.024612] ___sys_recvmsg+0xc7/0xf0

[12772.024613] __sys_recvmsg+0x89/0x100

[12772.024615] __x64_sys_recvmsg+0x1d/0x30

[12772.024617] x64_sys_call+0x630/0x2330

[12772.024618] do_syscall_64+0x80/0xa30

[12772.024620] ? __x64_sys_recvmsg+0x1d/0x30

[12772.024621] ? x64_sys_call+0x630/0x2330

[12772.024622] ? do_syscall_64+0xb8/0xa30

[12772.024623] ? do_user_addr_fault+0x2f8/0x830

[12772.024625] ? irqentry_exit_to_user_mode+0x2e/0x290

[12772.024627] ? irqentry_exit+0x43/0x50

[12772.024628] ? exc_page_fault+0x90/0x1b0

[12772.024630] entry_SYSCALL_64_after_hwframe+0x76/0x7e

[12772.024631] RIP: 0033:0x7fe77c7fe687

[12772.024643] Code: 48 89 fa 4c 89 df e8 58 b3 00 00 8b 93 08 03 00 00 59 5e 48 83 f8 fc 74 1a 5b c3 0f 1f 84 00 00 00 00 00 48 8b 44 24 10 0f 05 <5b> c3 0f 1f 80 00 00 00 00 83 e2 39 83 fa 08 75 de e8 23 ff ff ff

[12772.024645] RSP: 002b:00007ffcd3649520 EFLAGS: 00000202 ORIG_RAX: 000000000000002f

[12772.024646] RAX: ffffffffffffffda RBX: 00007fe77c67a840 RCX: 00007fe77c7fe687

[12772.024647] RDX: 0000000000000000 RSI: 00007ffcd3649570 RDI: 000000000000000a

[12772.024648] RBP: 00007ffcd3649570 R08: 0000000000000000 R09: 0000000000000000

[12772.024649] R10: 0000000000000000 R11: 0000000000000202 R12: 00007ffcd3649bf0

[12772.024650] R13: 0000000000000006 R14: 00007ffcd3649bf0 R15: 000000000000000a

[12772.024652] </TASK>

[12772.024652] Modules linked in: nft_nat nft_ct nft_fib_inet nft_fib_ipv4 nft_fib_ipv6 nft_fib nft_masq nft_chain_nat nf_nat nf_conntrack nf_defrag_ipv6 nf_defrag_ipv4 tcp_diag inet_diag overlay veth ebtable_filter ebtables ip_set ip6table_raw iptable_raw ip6table_filter ip6_tables iptable_filter nf_tables sunrpc binfmt_misc 8021q garp mrp bonding tls nfnetlink_log input_leds hid_apple hid_generic usbmouse usbkbd btusb btrtl btintel btbcm btmtk usbhid bluetooth hid apple_mfi_fastcharge amd_atl intel_rapl_msr intel_rapl_common iwlmvm amdgpu snd_hda_codec_alc662 snd_hda_codec_realtek_lib mac80211 snd_hda_codec_generic libarc4 snd_hda_codec_atihdmi snd_hda_codec_hdmi snd_hda_intel vfio_pci edac_mce_amd vfio_pci_core vfio_iommu_type1 vfio amdxcp iommufd drm_panel_backlight_quirks gpu_sched kvm_amd snd_hda_codec drm_buddy snd_hda_core drm_ttm_helper ttm kvm iwlwifi snd_intel_dspcfg snd_intel_sdw_acpi drm_exec drm_suballoc_helper snd_hwdep irqbypass drm_display_helper snd_pcm polyval_clmulni ghash_clmulni_intel cec snd_timer

[12772.024676] aesni_intel rc_core cfg80211 rapl ccp snd gigabyte_wmi i2c_algo_bit wmi_bmof soundcore mac_hid k10temp pcspkr acpi_pad sch_fq_codel vhost_net vhost vhost_iotlb tap vendor_reset(OE) efi_pstore nfnetlink dmi_sysfs ip_tables x_tables autofs4 zfs(PO) spl(O) btrfs blake2b_generic xor raid6_pq nvme xhci_pci nvme_core ahci r8169 i2c_piix4 xhci_hcd i2c_smbus libahci realtek nvme_keyring nvme_auth video wmi

[12772.024696] CR2: 0000000000000018

[12772.024697] ---[ end trace 0000000000000000 ]---

[12772.121230] RIP: 0010:aa_file_perm+0xb7/0x3b0

[12772.121239] Code: 45 31 c9 c3 cc cc cc cc 49 8b 47 20 41 8b 55 10 0f b7 00 66 25 00 f0 66 3d 00 c0 75 1c 41 f7 c4 46 00 10 00 75 13 49 8b 47 18 <48> 8b 40 18 66 83 78 10 01 0f 84 4a 01 00 00 89 d0 f7 d0 44 21 e0

[12772.121242] RSP: 0018:ffffd19ac846b900 EFLAGS: 00010246

[12772.121244] RAX: 0000000000000000 RBX: ffff8b04a9edf080 RCX: ffff8b04af7863c0

[12772.121245] RDX: 0000000000000000 RSI: ffff8b04c21f1d40 RDI: ffffffff848e7547

[12772.121246] RBP: ffffd19ac846b958 R08: 0000000000000000 R09: 0000000000000000

[12772.121246] R10: 0000000000000000 R11: 0000000000000000 R12: 0000000000000000

[12772.121247] R13: ffff8b0a518a9330 R14: ffff8b04a9edf080 R15: ffff8b04af7863c0

[12772.121248] FS: 00007fe77c67a840(0000) GS:ffff8b0c18a06000(0000) knlGS:0000000000000000

[12772.121250] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

[12772.121250] CR2: 0000000000000018 CR3: 00000001134d6000 CR4: 0000000000f50ef0

[12772.121252] PKRU: 55555554

[12772.121253] note: crun[70233] exited with irqs disabled

[12772.121371] ------------[ cut here ]------------

[12772.121372] Voluntary context switch within RCU read-side critical section!

[12772.121375] WARNING: CPU: 9 PID: 70233 at kernel/rcu/tree_plugin.h:332 rcu_note_context_switch+0x532/0x5a0

[12772.121378] Modules linked in: nft_nat nft_ct nft_fib_inet nft_fib_ipv4 nft_fib_ipv6 nft_fib nft_masq nft_chain_nat nf_nat nf_conntrack nf_defrag_ipv6 nf_defrag_ipv4 tcp_diag inet_diag overlay veth ebtable_filter ebtables ip_set ip6table_raw iptable_raw ip6table_filter ip6_tables iptable_filter nf_tables sunrpc binfmt_misc 8021q garp mrp bonding tls nfnetlink_log input_leds hid_apple hid_generic usbmouse usbkbd btusb btrtl btintel btbcm btmtk usbhid bluetooth hid apple_mfi_fastcharge amd_atl intel_rapl_msr intel_rapl_common iwlmvm amdgpu snd_hda_codec_alc662 snd_hda_codec_realtek_lib mac80211 snd_hda_codec_generic libarc4 snd_hda_codec_atihdmi snd_hda_codec_hdmi snd_hda_intel vfio_pci edac_mce_amd vfio_pci_core vfio_iommu_type1 vfio amdxcp iommufd drm_panel_backlight_quirks gpu_sched kvm_amd snd_hda_codec drm_buddy snd_hda_core drm_ttm_helper ttm kvm iwlwifi snd_intel_dspcfg snd_intel_sdw_acpi drm_exec drm_suballoc_helper snd_hwdep irqbypass drm_display_helper snd_pcm polyval_clmulni ghash_clmulni_intel cec snd_timer

[12772.121409] aesni_intel rc_core cfg80211 rapl ccp snd gigabyte_wmi i2c_algo_bit wmi_bmof soundcore mac_hid k10temp pcspkr acpi_pad sch_fq_codel vhost_net vhost vhost_iotlb tap vendor_reset(OE) efi_pstore nfnetlink dmi_sysfs ip_tables x_tables autofs4 zfs(PO) spl(O) btrfs blake2b_generic xor raid6_pq nvme xhci_pci nvme_core ahci r8169 i2c_piix4 xhci_hcd i2c_smbus libahci realtek nvme_keyring nvme_auth video wmi

[12772.121437] CPU: 9 UID: 100000 PID: 70233 Comm: crun Tainted: P D OE 6.17.1-1-pve #1 PREEMPT(voluntary)

[12772.121440] Tainted: [P]=PROPRIETARY_MODULE, [D]=DIE, [O]=OOT_MODULE, [E]=UNSIGNED_MODULE

[12772.121441] Hardware name: Gigabyte Technology Co., Ltd. B650 AORUS ELITE AX ICE/B650 AORUS ELITE AX ICE, BIOS F36 07/31/2025

[12772.121443] RIP: 0010:rcu_note_context_switch+0x532/0x5a0

[12772.121444] Code: ff 49 89 96 a8 00 00 00 e9 35 fd ff ff 45 85 ff 75 ef e9 2b fd ff ff 48 c7 c7 58 87 7f 84 c6 05 d7 a8 49 02 01 e8 1e 1c f2 ff <0f> 0b e9 23 fb ff ff 4d 8b 74 24 20 4c 89 f7 e8 da ff 01 01 41 c6

[12772.121446] RSP: 0018:ffffd19ac846bc50 EFLAGS: 00010046

[12772.121447] RAX: 0000000000000000 RBX: ffff8b084b1a0000 RCX: 0000000000000000

[12772.121447] RDX: 0000000000000000 RSI: 0000000000000000 RDI: 0000000000000000

[12772.121448] RBP: ffffd19ac846bc78 R08: 0000000000000000 R09: 0000000000000000

[12772.121449] R10: 0000000000000000 R11: 0000000000000000 R12: ffff8b0b9e4b3800

[12772.121450] R13: 0000000000000000 R14: 0000000000000000 R15: ffff8b084b1a0dc0

[12772.121451] FS: 0000000000000000(0000) GS:ffff8b0c18a06000(0000) knlGS:0000000000000000

[12772.121452] CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033

[12772.121453] CR2: 0000000000000018 CR3: 000000032d23a000 CR4: 0000000000f50ef0

[12772.121453] PKRU: 55555554

[12772.121454] Call Trace:

[12772.121455] <TASK>

[12772.121457] __schedule+0xc6/0x1310

[12772.121460] ? try_to_wake_up+0x392/0x8a0

[12772.121462] ? kthread_insert_work+0xb8/0xe0

[12772.121465] schedule+0x27/0xf0

[12772.121466] synchronize_rcu_expedited+0x1c2/0x220

[12772.121467] ? __pfx_autoremove_wake_function+0x10/0x10

[12772.121469] ? __pfx_wait_rcu_exp_gp+0x10/0x10

[12772.121470] namespace_unlock+0x258/0x330

[12772.121474] put_mnt_ns+0x79/0xb0

[12772.121475] free_nsproxy+0x16/0x190

[12772.121477] switch_task_namespaces+0x74/0xa0

[12772.121478] exit_task_namespaces+0x10/0x20

[12772.121479] do_exit+0x2a8/0xa20

[12772.121481] make_task_dead+0x93/0xa0

[12772.121482] rewind_stack_and_make_dead+0x16/0x20

[12772.121483] RIP: 0033:0x7fe77c7fe687

[12772.121497] Code: Unable to access opcode bytes at 0x7fe77c7fe65d.

[12772.121498] RSP: 002b:00007ffcd3649520 EFLAGS: 00000202 ORIG_RAX: 000000000000002f

[12772.121500] RAX: ffffffffffffffda RBX: 00007fe77c67a840 RCX: 00007fe77c7fe687

[12772.121501] RDX: 0000000000000000 RSI: 00007ffcd3649570 RDI: 000000000000000a

[12772.121502] RBP: 00007ffcd3649570 R08: 0000000000000000 R09: 0000000000000000

[12772.121503] R10: 0000000000000000 R11: 0000000000000202 R12: 00007ffcd3649bf0

[12772.121504] R13: 0000000000000006 R14: 00007ffcd3649bf0 R15: 000000000000000a

[12772.121505] </TASK>

[12772.121506] ---[ end trace 0000000000000000 ]---

[12832.037165] rcu: INFO: rcu_preempt detected stalls on CPUs/tasks:

[12832.037172] rcu: Tasks blocked on level-0 rcu_node (CPUs 0-15): P70233/1:b..l

[12832.037177] rcu: (detected by 7, t=60002 jiffies, g=637313, q=27783 ncpus=16)

[12832.037179] task:crun state:D stack:0 pid:70233 tgid:70233 ppid:70229 task_flags:0x40014c flags:0x00004002

[12832.037182] Call Trace:

[12832.037184] <TASK>

[12832.037186] __schedule+0x468/0x1310

[12832.037190] ? try_to_wake_up+0x392/0x8a0

[12832.037192] schedule+0x27/0xf0

[12832.037194] synchronize_rcu_expedited+0x1c2/0x220

[12832.037196] ? __pfx_autoremove_wake_function+0x10/0x10

[12832.037198] ? __pfx_wait_rcu_exp_gp+0x10/0x10

[12832.037199] namespace_unlock+0x258/0x330

[12832.037201] put_mnt_ns+0x79/0xb0

[12832.037203] free_nsproxy+0x16/0x190

[12832.037204] switch_task_namespaces+0x74/0xa0

[12832.037205] exit_task_namespaces+0x10/0x20

[12832.037207] do_exit+0x2a8/0xa20

[12832.037208] make_task_dead+0x93/0xa0

[12832.037209] rewind_stack_and_make_dead+0x16/0x20

[12832.037211] RIP: 0033:0x7fe77c7fe687

[12832.037225] RSP: 002b:00007ffcd3649520 EFLAGS: 00000202 ORIG_RAX: 000000000000002f

[12832.037227] RAX: ffffffffffffffda RBX: 00007fe77c67a840 RCX: 00007fe77c7fe687

[12832.037228] RDX: 0000000000000000 RSI: 00007ffcd3649570 RDI: 000000000000000a

[12832.037229] RBP: 00007ffcd3649570 R08: 0000000000000000 R09: 0000000000000000

[12832.037229] R10: 0000000000000000 R11: 0000000000000202 R12: 00007ffcd3649bf0

[12832.037230] R13: 0000000000000006 R14: 00007ffcd3649bf0 R15: 000000000000000a

[12832.037232] </TASK>

[12833.506107] rcu: INFO: rcu_preempt detected expedited stalls on CPUs/tasks: { P70233 } 61388 jiffies s: 3017 root: 0x0/T

[12833.506115] rcu: blocking rcu_node structures (internal RCU debug):A LXC container shouldn’t cause a kernel panic, especially since it’s a Unprivileged Container.

I understand your concern about junk code from LLMs. I don’t even know which one Cursor used. It took me a week to find what caused the NULL in the AppArmor code. Sure, I could’ve disabled apparmor for that container, but that’s not a good security practice. It works fine on 6.14.

If you want to debug this, I’m more than happy to send Proxmox a backup of the container to test. Once the kernel Panic happens, the system is unstable.

The patch is only two lines. for more info: https://forum.proxmox.com/threads/i...-or-6-17-kernel-on-proxmox.172483/post-807443, just ignore my 2nd patch.

Diff:

diff --git a/security/apparmor/file.c b/security/apparmor/file.c

--- a/security/apparmor/file.c

+++ b/security/apparmor/file.c

@@ -777,6 +777,9 @@ static bool __unix_needs_revalidation(struct file *file, struct aa_label *label

return false;

if (request & NET_PEER_MASK)

return false;

+ /* sock and sock->sk can be NULL for sockets being set up or torn down */

+ if (!sock || !sock->sk)

+ return false;

if (sock->sk->sk_family == PF_UNIX) {

struct aa_sk_ctx *ctx = aa_sock(sock->sk);

Last edited:

On 6.17 I am now seeing a whole lotta repeating messages in `dmesg` - hundreds and hundreds of them...

Which appears to the the apparmor issue as reported here: https://forum.proxmox.com/threads/i...or-6-17-kernel-on-proxmox.172483/#post-807443

I am not having any functional issues, as far as I cal tell, but it's definitely a bunch of unnecessary noise...

Code:

[18975.874618] audit: type=1400 audit(1760582475.747:115558): apparmor="DENIED" operation="sendmsg" class="file" namespace="root//lxc-205_<-var-lib-lxc>" profile="rsyslogd" name="/run/systemd/journal/dev-log" pid=195010 comm="systemd-journal" requested_mask="r" denied_mask="r" fsuid=100000 ouid=100000

[18981.907202] kauditd_printk_skb: 83 callbacks suppressedWhich appears to the the apparmor issue as reported here: https://forum.proxmox.com/threads/i...or-6-17-kernel-on-proxmox.172483/#post-807443

I am not having any functional issues, as far as I cal tell, but it's definitely a bunch of unnecessary noise...

( I understand the fix may be to add

abi <abi/3.0> to an apparmor profile, but it is not clear to me where/which profile or how to find said profile...)Hi,

What guest are you running in the container with ID

the profile is mentioned in the log line you posted:( I understand the fix may be to addabi <abi/3.0>to an apparmor profile, but it is not clear to me where/which profile or how to find said profile...)

... profile="rsyslogd" ...What guest are you running in the container with ID

205?

Last edited:

Had one issue today, but I'm not sure if its strictly related to the kernel. Had a windows 11 VM crash with a "Internal VM error". System logs showed the following. I restarted the windows 11 VM and so far so good. View attachment 91673

I don't think it's a kernel issue.

In environments with GPU passthrough, only this log is recorded before the system stops.

It doesn't occur on PVE8, but I don't know why, and since no other logs are recorded, I'm at a loss.

Unexplained virtual machine crashes after updating to Proxmox 9.

In this case, the virtual machine will enter an internal-error state.

The issue only occurs in Proxmox 9, not in Proxmox 8.

This does not happen all the time, but it does happen in environments where GPU passthrough is enabled.

If anyone knows the cause or how to troubleshoot this error, I would be grateful if you could let me know.

version

error

In this case, the virtual machine will enter an internal-error state.

The issue only occurs in Proxmox 9, not in Proxmox 8.

This does not happen all the time, but it does happen in environments where GPU passthrough is enabled.

If anyone knows the cause or how to troubleshoot this error, I would be grateful if you could let me know.

version

Code:

pveversion

pve-manager/9.0.6/49c767b70aeb6648 (running kernel: 6.14.8-2-pve)error

Code:

Aug 25 14:13:29 pve1 QEMU[449875]: error: kvm run failed Bad address

Aug 25...Hi,

the profile is mentioned in the log line you posted:... profile="rsyslogd" ...

What guest are you running in the container with ID205?

Yep I'd got as far as working out "rsyslogd" but can't find any specific profile for that in/under

/etc/apparmor*205 is a Ubuntu 24.04 LXC with Jellyfin.

I just installed kernel 6.17 and the headers without any problems.

What I noticed was that my system was using about 15% less memory. I noticed that the ZFS ARC had dropped from 17 GiB to 7 GiB (without any changes). The remaining memory usage dropped from 22.5 GiB to 14 GiB.

System: Terramaster F6-424 MAX, 64 GB DDR5 RAM, 12 x 12th Gen Intel(R) Core(TM) i5-1235U (1 Socket), 2 x 10GB LAN, 2x M2 WD Red SN700 1000GB, 6x GIGASTONE_SSD Enterprise 2TB, USV

What I noticed was that my system was using about 15% less memory. I noticed that the ZFS ARC had dropped from 17 GiB to 7 GiB (without any changes). The remaining memory usage dropped from 22.5 GiB to 14 GiB.

System: Terramaster F6-424 MAX, 64 GB DDR5 RAM, 12 x 12th Gen Intel(R) Core(TM) i5-1235U (1 Socket), 2 x 10GB LAN, 2x M2 WD Red SN700 1000GB, 6x GIGASTONE_SSD Enterprise 2TB, USV

Last edited:

It should be in the container underYep I'd got as far as working out "rsyslogd" but can't find any specific profile for that in/under/etc/apparmor*

205 is a Ubuntu 24.04 LXC with Jellyfin.

/etc/apparmor.d/usr.sbin.rsyslogd.Super,just gonna install it and test. But btw I'd like to know that package pve-firmare create bad symlinks for firmware files of intel iwlwifi cards.We recently uploaded the 6.17 kernel to our repositories. The current default kernel for the Proxmox VE 9 series is still 6.14, but 6.17 is now an option.

We plan to use the 6.17 kernel as the new default for the Proxmox VE 9.1 release later in Q4.

This follows our tradition of upgrading the Proxmox VE kernel to match the current Ubuntu version until we reach an Ubuntu LTS release, at which point we will only provide newer kernels as an opt-in option. The 6.17 kernel is based on the Ubuntu 25.10 Questing release.

We have run this kernel on some of our test setups over the last few days without encountering any significant issues. However, for production setups, we strongly recommend either using the 6.14-based kernel or testing on similar hardware/setups before upgrading any production nodes to 6.17.

How to install:

Future updates to the 6.17 kernel will now be installed automatically when upgrading a node.

- Ensure that either the pve-no-subscription or pvetest repository is set up correctly.

You can do so via CLI text-editor or using the web UI under Node -> Repositories.- Open a shell as root, e.g. through SSH or using the integrated shell on the web UI.

apt updateapt install proxmox-kernel-6.17reboot

Please note:

- The current 6.14 kernel is still supported, and will stay the default kernel until further notice.

- There were many changes, for improved hardware support and performance improvements all over the place.

For a good overview of prominent changes, we recommend checking out the kernel-newbies site for 6.15, 6.16, and 6.17.- The kernel is also available on the test and no-subscription repositories of Proxmox Backup Server, Proxmox Mail Gateway, and in the test repo of Proxmox Datacenter Manager.

- The new 6.17 based opt-in kernel will not be made available for the previous Proxmox VE 8 release series.

- If you're unsure, we recommend continuing to use the 6.14-based kernel for now.

Feedback about how the new kernel performs in any of your setups is welcome!

Please provide basic details like CPU model, storage types used, ZFS as root file system, and the like, for both positive feedback or if you ran into some issues, where using the opt-in 6.17 kernel seems to be the likely cause.

I have also installed Proxmox on my laptop for testing purposes and noticed that my wifi card does not work.

I fixed the issue manually but it would be great if next version of package will fix it automatically

It should be in the container under/etc/apparmor.d/usr.sbin.rsyslogd.

Ah, Ok, thank you - I didn't really realise I should be looking inside the container. Still getting my head around the container <> host relationship, I suppose, as have only recently started with Proxmox.

But - I added

abi <abi/3.0>, to the top of /etc/apparmor.d/usr.sbin.rsyslogd in the container, then reload-or-restart the apparmor service, then for good measure rebooted the container.No change in behaviour, dmesg is still completely full of hundreds/thousands of:

[65656.861041] audit: type=1400 audit(1760651059.654:590680): apparmor="DENIED" operation="sendmsg" class="file" namespace="root//lxc-205_<-var-lib-lxc>" profile="rsyslogd" name="/run/systemd/journal/dev-log" pid=2964609 comm="systemd-journal" requested_mask="r" denied_mask="r" fsuid=100000 ouid=100000Any other ideas?

Hi,

could you provide more details? What symlinks and how did you fix it?Super,just gonna install it and test. But btw I'd like to know that package pve-firmare create bad symlinks for firmware files of intel iwlwifi cards.

I have also installed Proxmox on my laptop for testing purposes and noticed that my wifi card does not work.

I fixed the issue manually but it would be great if next version of package will fix it automatically

I don't think it's a abi issue then. You could allow access with:But - I addedabi <abi/3.0>,to the top of/etc/apparmor.d/usr.sbin.rsyslogdin the container, then reload-or-restart the apparmor service, then for good measure rebooted the container.

No change in behaviour, dmesg is still completely full of hundreds/thousands of:

[65656.861041] audit: type=1400 audit(1760651059.654:590680): apparmor="DENIED" operation="sendmsg" class="file" namespace="root//lxc-205_<-var-lib-lxc>" profile="rsyslogd" name="/run/systemd/journal/dev-log" pid=2964609 comm="systemd-journal" requested_mask="r" denied_mask="r" fsuid=100000 ouid=100000

Any other ideas?

Code:

/run/systemd/journal/dev-log r,/etc/apparmor.d/usr.sbin.rsyslogd

Last edited: