i upgrade three nodes pve to 6.17.2-1-pve, reboot and failed.

all nodes are Dell R630,all are failed to boot new kernel.

i should boot 6.14.11-4-pve to recover.

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 72

On-line CPU(s) list: 0-71

Vendor ID: GenuineIntel

Model name: Intel(R) Xeon(R) CPU E5-2686 v4 @ 2.30GHz

CPU family: 6

Model: 79

Thread(s) per core: 2

Core(s) per socket: 18

Socket(s): 2

Stepping: 1

CPU(s) scaling MHz: 91%

CPU max MHz: 3000.0000

CPU min MHz: 1200.0000

BogoMIPS: 4600.04

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall n

x pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64

monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xs

ave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 pti ssbd ibrs ibpb stibp tpr_shadow flexpriority ept

vpid ept_ad fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm rdt_a rdseed adx smap intel_pt xsaveopt cqm_llc cqm_

occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts vnmi md_clear flush_l1d

Virtualization features:

Virtualization: VT-x

Caches (sum of all):

L1d: 1.1 MiB (36 instances)

L1i: 1.1 MiB (36 instances)

L2: 9 MiB (36 instances)

L3: 90 MiB (2 instances)

NUMA:

NUMA node(s): 2

NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54,56,58,60,62,64,66,68,70

NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55,57,59,61,63,65,67,69,71

Vulnerabilities:

Gather data sampling: Not affected

Ghostwrite: Not affected

Indirect target selection: Not affected

Itlb multihit: KVM: Mitigation: Split huge pages

L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT vulnerable

Mds: Mitigation; Clear CPU buffers; SMT vulnerable

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT vulnerable

Reg file data sampling: Not affected

Retbleed: Not affected

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Retpolines; IBPB conditional; IBRS_FW; STIBP conditional; RSB filling; PBRSB-eIBRS Not affected; BHI Not affected

Srbds: Not affected

Tsa: Not affected

Tsx async abort: Mitigation; Clear CPU buffers; SMT vulnerable

Vmscape: Mitigation; IBPB before exit to userspace

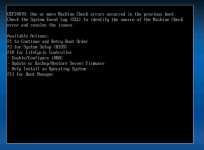

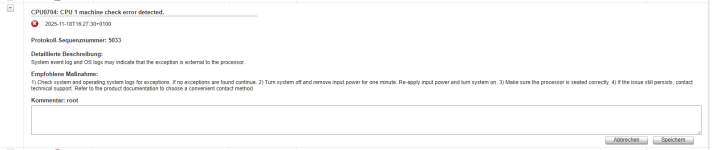

92 | 11/18/2025 | 06:01:15 PM CST | Unknown Additional Info | | Asserted

93 | 11/18/2025 | 06:01:15 PM CST | Processor CPU Machine Chk | Transition to Non-recoverable | Asserted

94 | 11/18/2025 | 06:01:15 PM CST | Unknown MSR Info Log | | Asserted

all nodes are Dell R630,all are failed to boot new kernel.

i should boot 6.14.11-4-pve to recover.

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Address sizes: 46 bits physical, 48 bits virtual

Byte Order: Little Endian

CPU(s): 72

On-line CPU(s) list: 0-71

Vendor ID: GenuineIntel

Model name: Intel(R) Xeon(R) CPU E5-2686 v4 @ 2.30GHz

CPU family: 6

Model: 79

Thread(s) per core: 2

Core(s) per socket: 18

Socket(s): 2

Stepping: 1

CPU(s) scaling MHz: 91%

CPU max MHz: 3000.0000

CPU min MHz: 1200.0000

BogoMIPS: 4600.04

Flags: fpu vme de pse tsc msr pae mce cx8 apic sep mtrr pge mca cmov pat pse36 clflush dts acpi mmx fxsr sse sse2 ss ht tm pbe syscall n

x pdpe1gb rdtscp lm constant_tsc arch_perfmon pebs bts rep_good nopl xtopology nonstop_tsc cpuid aperfmperf pni pclmulqdq dtes64

monitor ds_cpl vmx smx est tm2 ssse3 sdbg fma cx16 xtpr pdcm pcid dca sse4_1 sse4_2 x2apic movbe popcnt tsc_deadline_timer aes xs

ave avx f16c rdrand lahf_lm abm 3dnowprefetch cpuid_fault epb cat_l3 cdp_l3 pti ssbd ibrs ibpb stibp tpr_shadow flexpriority ept

vpid ept_ad fsgsbase tsc_adjust bmi1 hle avx2 smep bmi2 erms invpcid rtm cqm rdt_a rdseed adx smap intel_pt xsaveopt cqm_llc cqm_

occup_llc cqm_mbm_total cqm_mbm_local dtherm ida arat pln pts vnmi md_clear flush_l1d

Virtualization features:

Virtualization: VT-x

Caches (sum of all):

L1d: 1.1 MiB (36 instances)

L1i: 1.1 MiB (36 instances)

L2: 9 MiB (36 instances)

L3: 90 MiB (2 instances)

NUMA:

NUMA node(s): 2

NUMA node0 CPU(s): 0,2,4,6,8,10,12,14,16,18,20,22,24,26,28,30,32,34,36,38,40,42,44,46,48,50,52,54,56,58,60,62,64,66,68,70

NUMA node1 CPU(s): 1,3,5,7,9,11,13,15,17,19,21,23,25,27,29,31,33,35,37,39,41,43,45,47,49,51,53,55,57,59,61,63,65,67,69,71

Vulnerabilities:

Gather data sampling: Not affected

Ghostwrite: Not affected

Indirect target selection: Not affected

Itlb multihit: KVM: Mitigation: Split huge pages

L1tf: Mitigation; PTE Inversion; VMX conditional cache flushes, SMT vulnerable

Mds: Mitigation; Clear CPU buffers; SMT vulnerable

Meltdown: Mitigation; PTI

Mmio stale data: Mitigation; Clear CPU buffers; SMT vulnerable

Reg file data sampling: Not affected

Retbleed: Not affected

Spec rstack overflow: Not affected

Spec store bypass: Mitigation; Speculative Store Bypass disabled via prctl

Spectre v1: Mitigation; usercopy/swapgs barriers and __user pointer sanitization

Spectre v2: Mitigation; Retpolines; IBPB conditional; IBRS_FW; STIBP conditional; RSB filling; PBRSB-eIBRS Not affected; BHI Not affected

Srbds: Not affected

Tsa: Not affected

Tsx async abort: Mitigation; Clear CPU buffers; SMT vulnerable

Vmscape: Mitigation; IBPB before exit to userspace

92 | 11/18/2025 | 06:01:15 PM CST | Unknown Additional Info | | Asserted

93 | 11/18/2025 | 06:01:15 PM CST | Processor CPU Machine Chk | Transition to Non-recoverable | Asserted

94 | 11/18/2025 | 06:01:15 PM CST | Unknown MSR Info Log | | Asserted