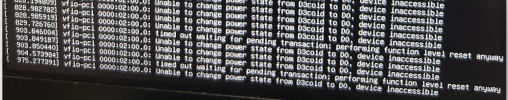

i have the kvm64 cpu setting, on 5.15 i was getting something like this in the log. after 5.19 i don't see it anymore.

[ 141.628962] ------------[ cut here ]------------

[ 141.628965] WARNING: CPU: 7 PID: 3497 at arch/x86/kvm/x86.c:10614 kvm_arch_vcpu_ioctl_run+0x1cb0/0x1df0 [kvm]

[ 141.629027] Modules linked in: veth tcp_diag inet_diag ebtable_filter ebtables ip_set ip6table_raw iptable_raw ip6table_filter ip6_tables iptable_filter bpfilter sctp ip6_udp_tunnel udp_tunnel nf_tables softdog bonding tls nfnetlink_log nfnetlink intel_rapl_msr intel_rapl_common x86_pkg_temp_thermal intel_powerclamp coretemp kvm_intel i915 drm_buddy ttm kvm drm_display_helper crct10dif_pclmul ghash_clmulni_intel cec aesni_intel rc_core crypto_simd cryptd snd_soc_rt5640 mei_hdcp drm_kms_helper mei_pxp snd_soc_rl6231 rapl i2c_algo_bit snd_soc_core fb_sys_fops mei_me syscopyarea sysfillrect intel_cstate at24 sysimgblt mei snd_compress ac97_bus pcspkr snd_pcm_dmaengine efi_pstore snd_pcm zfs(PO) snd_timer snd zunicode(PO) soundcore mac_hid zzstd(O) acpi_pad zlua(O) zavl(PO) icp(PO) zcommon(PO) znvpair(PO) spl(O) vhost_net vhost vhost_iotlb tap ib_iser rdma_cm iw_cm ib_cm ib_core iscsi_tcp libiscsi_tcp libiscsi scsi_transport_iscsi vfio_pci vfio_pci_core vfio_virqfd irqbypass

[ 141.629078] vfio_iommu_type1 vfio drm sunrpc ip_tables x_tables autofs4 btrfs blake2b_generic xor raid6_pq simplefb dm_thin_pool dm_persistent_data dm_bio_prison dm_bufio libcrc32c xhci_pci ahci crc32_pclmul r8169 i2c_i801 xhci_pci_renesas ehci_pci libahci i2c_smbus mpt3sas lpc_ich tg3 raid_class realtek xhci_hcd ehci_hcd scsi_transport_sas video

[ 141.628962] ------------[ cut here ]------------

[ 141.628965] WARNING: CPU: 7 PID: 3497 at arch/x86/kvm/x86.c:10614 kvm_arch_vcpu_ioctl_run+0x1cb0/0x1df0 [kvm]

[ 141.629027] Modules linked in: veth tcp_diag inet_diag ebtable_filter ebtables ip_set ip6table_raw iptable_raw ip6table_filter ip6_tables iptable_filter bpfilter sctp ip6_udp_tunnel udp_tunnel nf_tables softdog bonding tls nfnetlink_log nfnetlink intel_rapl_msr intel_rapl_common x86_pkg_temp_thermal intel_powerclamp coretemp kvm_intel i915 drm_buddy ttm kvm drm_display_helper crct10dif_pclmul ghash_clmulni_intel cec aesni_intel rc_core crypto_simd cryptd snd_soc_rt5640 mei_hdcp drm_kms_helper mei_pxp snd_soc_rl6231 rapl i2c_algo_bit snd_soc_core fb_sys_fops mei_me syscopyarea sysfillrect intel_cstate at24 sysimgblt mei snd_compress ac97_bus pcspkr snd_pcm_dmaengine efi_pstore snd_pcm zfs(PO) snd_timer snd zunicode(PO) soundcore mac_hid zzstd(O) acpi_pad zlua(O) zavl(PO) icp(PO) zcommon(PO) znvpair(PO) spl(O) vhost_net vhost vhost_iotlb tap ib_iser rdma_cm iw_cm ib_cm ib_core iscsi_tcp libiscsi_tcp libiscsi scsi_transport_iscsi vfio_pci vfio_pci_core vfio_virqfd irqbypass

[ 141.629078] vfio_iommu_type1 vfio drm sunrpc ip_tables x_tables autofs4 btrfs blake2b_generic xor raid6_pq simplefb dm_thin_pool dm_persistent_data dm_bio_prison dm_bufio libcrc32c xhci_pci ahci crc32_pclmul r8169 i2c_i801 xhci_pci_renesas ehci_pci libahci i2c_smbus mpt3sas lpc_ich tg3 raid_class realtek xhci_hcd ehci_hcd scsi_transport_sas video