Hi all,

I tried to PCI passthrough a SATA controller (not the onboard one) to my vm and found a significant performance drop, the write speed was dropped to 8x MB/s.

But when I mount it directly on PVE, the writing speed came back to normal. Can someone help me on this issue? Thank you!

Here is my grub setting:

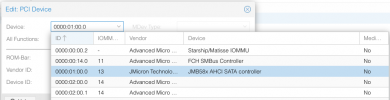

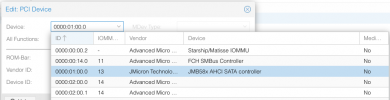

This is my pcipassthrough setting on PVE gui

Here is dmesg in the VM:

I tried to PCI passthrough a SATA controller (not the onboard one) to my vm and found a significant performance drop, the write speed was dropped to 8x MB/s.

But when I mount it directly on PVE, the writing speed came back to normal. Can someone help me on this issue? Thank you!

Here is my grub setting:

GRUB_CMDLINE_LINUX_DEFAULT="quiet iommu=pt amd_iommu=on drm.debug=0 kvm_amd.nested=1 kvm.ignore_msrs=1 kvm.report_ignored_msrs=0 vfio_iommu_type1.allow_unsafe_interrupts=1"This is my pcipassthrough setting on PVE gui

Here is dmesg in the VM:

[ 0.148830] pci 0000:06:10.0: [197b:0585] type 00 class 0x010601

[ 0.150356] pci 0000:06:10.0: reg 0x10: [io 0x9200-0x927f]

[ 0.153708] pci 0000:06:10.0: reg 0x14: [io 0x9180-0x91ff]

[ 0.155051] pci 0000:06:10.0: reg 0x18: [io 0x9100-0x917f]

[ 0.156358] pci 0000:06:10.0: reg 0x1c: [io 0x9080-0x90ff]

[ 0.157652] pci 0000:06:10.0: reg 0x20: [io 0x9000-0x907f]

[ 0.158992] pci 0000:06:10.0: reg 0x24: [mem 0xc1600000-0xc1601fff]

[ 0.159811] pci 0000:06:10.0: PME# supported from D3hot

[ 0.756494] ahci 0000:06:10.0: SSS flag set, parallel bus scan disabled

[ 0.757025] ahci 0000:06:10.0: AHCI 0001.0301 32 slots 5 ports 6 Gbps 0x1f impl SATA mode

[ 0.757387] ahci 0000:06:10.0: flags: 64bit ncq sntf stag pm led clo pmp fbs pio slum part ccc apst boh