hi,

just upgraded to 7.2 and noticing that my cluster is in a state where:

just upgraded to 7.2 and noticing that my cluster is in a state where:

- my sidebar looks like this:

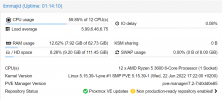

- I can't list or start containers on the "?" node, ibnmajid. VMs seem to work fine. Ceph seems to be fine. Info about that node:

- output of pvecm status:

root@ganges:~# pvecm status Cluster information ------------------- Name: BrokenWorks Config Version: 3 Transport: knet Secure auth: on Quorum information ------------------ Date: Sat Jul 9 01:39:05 2022 Quorum provider: corosync_votequorum Nodes: 3 Node ID: 0x00000002 Ring ID: 1.45b Quorate: Yes Votequorum information ---------------------- Expected votes: 3 Highest expected: 3 Total votes: 3 Quorum: 2 Flags: Quorate Membership information ---------------------- Nodeid Votes Name 0x00000001 1 192.168.9.10 0x00000002 1 192.168.9.11 (local) 0x00000003 1 192.168.9.12 root@ganges:~#