So you can roughly estimate when it will complete:

In my experience - most zero-data is later on in the

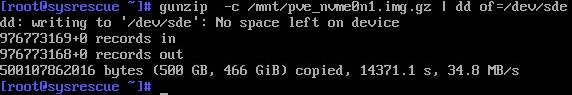

Code:

Time_Taken_So_Far/(Amount_Copied_So_Far/Total_Size) = Expected_Total_Timedd read - so usually its quicker than above. However your error(s) may/will impact the result.