Hi all!

I installed ProxMox in 2021 & till now it just works. My system is: PVE 6.4-15 - last from 6.4 branche. I know it is obsolete, but for my needs (home server) it just works, without any problem. It's there and just works... I almost forgot that I have it. Regarding operation, performance everything is great, but hardware...

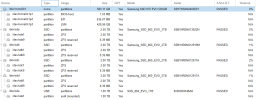

Boot disc (/dev/nvme0n1 - Samsung SSD 970 EVO) is signaling me, that there is only 26% of "Available Spare" (sectors i presume) left on it:

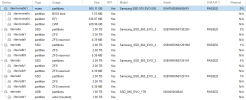

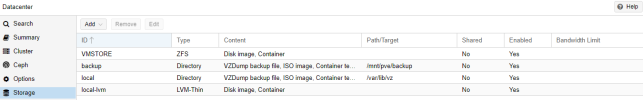

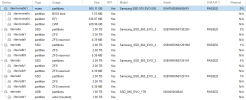

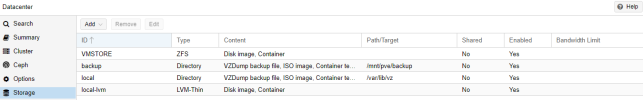

This disc is system only / boot disc. For VM's I have separated ZFS raidz pool (4 SSD in kind of RAID setup) and also one additional SSD (connected via USB) just for VM backups. Other discs are feeling comfortably and without problems (below). But I believe that I need to replace a boot device (/dev/nvme) ASAP.

My plan is: I need to buy a new NVME disc - probably same as this one (if that is no ok, please suggest a better model) and then try to binary duplicate current disc to new one. Then put a new one into server and everything would be fine. This would be optimal, but I don't know if it will work? Are there any problems, that can occure during that process? Or, what is a best and proven way to replace a ProxMox NVME system disc?

Thanks!

I installed ProxMox in 2021 & till now it just works. My system is: PVE 6.4-15 - last from 6.4 branche. I know it is obsolete, but for my needs (home server) it just works, without any problem. It's there and just works... I almost forgot that I have it. Regarding operation, performance everything is great, but hardware...

Boot disc (/dev/nvme0n1 - Samsung SSD 970 EVO) is signaling me, that there is only 26% of "Available Spare" (sectors i presume) left on it:

This disc is system only / boot disc. For VM's I have separated ZFS raidz pool (4 SSD in kind of RAID setup) and also one additional SSD (connected via USB) just for VM backups. Other discs are feeling comfortably and without problems (below). But I believe that I need to replace a boot device (/dev/nvme) ASAP.

My plan is: I need to buy a new NVME disc - probably same as this one (if that is no ok, please suggest a better model) and then try to binary duplicate current disc to new one. Then put a new one into server and everything would be fine. This would be optimal, but I don't know if it will work? Are there any problems, that can occure during that process? Or, what is a best and proven way to replace a ProxMox NVME system disc?

Thanks!

Last edited: