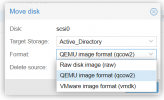

If I store an VM Image on an ZFS storage I only get RAW as option for image format.

I assume it's because ZFS supports thin provisioning.

I assume it's because ZFS supports thin provisioning.

Never do this because qcow is cow and zfs also.If you want QCOW2 you need to add the storage as "Folder".

Maybe someone use qcow2 on zfs because of personal reasons like temporary or migration between different storage formats.

bring no benefit or features.

Yes, do thatmaybe switch to ext4 for my proxmox drives.

If use directory and raw format? Are there any disadvantages to this?Never do this because qcow is cow and zfs also.

A cow fs on a cow fs will kill the performance and bring no benefit or features.

you have no snapshots.Are there any disadvantages to this?

I would use backup/restore.Sometimes need to copy the disk to another machine.

Sometimes need to copy the disk to another machine.

dd if=/dev/rpool/proxmox/vm-100-disk-1 bs=128k | ssh 1.2.3.4 dd bs=128K of=my-disk.rawthanks very muchWith ZFS ZVOL you will have a raw disk, you just have to read it and transfer it:

Code:dd if=/dev/rpool/proxmox/vm-100-disk-1 bs=128k | ssh 1.2.3.4 dd bs=128K of=my-disk.raw

Best way for remote ZFS is to use ZFS-over-iSCSI, but you have to have support for that. I don't know if that's the case for TrueNAS. I'm running this setup on Debian and it works fine - no complicated setup or anything like that because the storage is already ZFS backed (one zvol for each VM), Snapshot capability and configuration free online migration.Both nodes have the same remote storage (marked on prox's gui to handle only VM backups) from a truenas, sharing that storage (dataset created on a pool - was there a better way?) via nfs (didn t use iscsi because this storage might be used at the same time from both nodes). The connection between each node and the Truenas is a 10Gbe connection with DAC cables.

PVE does not see the ZFS, it sees the NFS. You will not have any virtualization-related features like snapshots for this kind of storage.The Backups now of those VMs are going to be placed in the remote storage from Truenas (dataset->nfs shared way given to the specific node)

and here lies the relevant I think question. During adding a storage (both local and remote) from Datacenter you are asked what kind of things this storage is going to keep. If you choose Backups, does this mean that a directory type of storage is being created on top of zfs automatically by proxmox in order to keep those backups? So do we have qcow2 instead of raw?

Snapshots. RAW cannot be snapshotted.I am also trying to get why would I want to transfer the VM's disk from raw that it is to a different file format qcow2. Thoughts?

Ok but NFS is the share protocol, on top or underneath that is zfs from Truenas (by the way scale - so Debian based).PVE does not see the ZFS, it sees the NFS. You will not have any virtualization-related features like snapshots for this kind of storage.

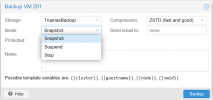

Possibly true but then again why do I have the option from GUI for each VM to back it up to the remote storage (nfs one) and still being able to choose all available backup options like ... see below (possibly you are talking about another kind of snapshot in another menu instead of backup?)Snapshots. RAW cannot be snapshotted.

So it may work with the integration. It depends on the choosen iSCSI implementation.Ok but NFS is the share protocol, on top or underneath that is zfs from Truenas (by the way scale - so Debian based).

Yes, it is unfortunatelly also called "snapshot", but not the same kind as a storage "snapshot". Snapshot in the backup menu means, that QEMU creates an internal and temporal snapshot of your virtual disk, backes it up and then deletes it to create a "more consistent" backup. A storage snapshot is created on the storage and can easily be rolled back if needed and is independent of the state of the VM.Possibly true but then again why do I have the option from GUI for each VM to back it up to the remote storage (nfs one) and still being able to choose all available backup options like ... see below (possibly you are talking about another kind of snapshot in another menu instead of backup?)

AFAIK no one provides this for proxmox- It would be possible to write a shim for trunas using their API, but ultimately that would still be an imperfect solution. the only workable solution is lvm on the iscsi target, unless you're both brave and have unlimited dev time to deploy gfs/ocfs.So it may work with the integration. It depends on the choosen iSCSI implementation.

I wouldn t say the only, since yesterday finished with all the tests I wanted. Shared storage (even for backups and not for Vms themselves) solves many problems when you don t want to have a cluster of 3 (or 2 +1 voting guy) servers.the only workable solution is lvm on the iscsi target, unless you're both brave and have unlimited dev time to deploy gfs/ocfs.

two different applications, although you can get pretty decent speed with qcow2 over nfs (depending how good your storage subsystem is on the filer.) if that works for you, greatI wouldn t say the only, since yesterday finished with all the tests I wanted. Shared storage (even for backups and not for Vms themselves) solves many problems when you don t want to have a cluster of 3 (or 2 +1 voting guy) servers.

We use essential cookies to make this site work, and optional cookies to enhance your experience.