This is on a fresh PVE 8.0.4 installation on a Hetzner Server.

So this node is in a datacenter. There are no private ip addresses involved. I mention this, because all posts I found are related to private ip addresses.

The ip addresses in this post are changed for privacy:

PVE Node has IP address 167.120.71.34/26

and gateway 167.120.71.1

I have this subnet on this node: 6.8.188.95/27

So I can use ip 6.8.188.95 to 6.8.188.124 for containers and VM.

/etc/network/interfaces on the pve node:

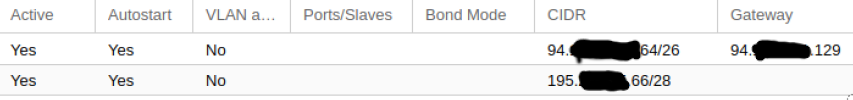

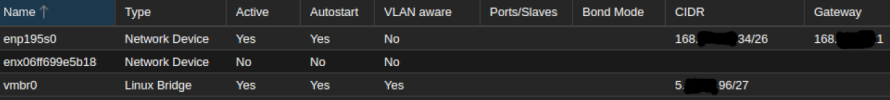

in the GUI the pve host has this network config:

(I tried this also with the CIDR/Gateway addresses on the enp195s0 line and for the vmbr0 a CIDR from the active subnet)

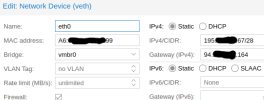

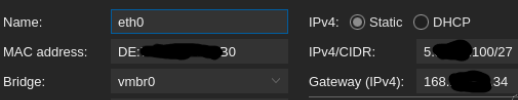

I created a privileged CT with the standard Ubuntu 22.04 Template, nesting activated, and set this on the GUI:

On the node I have all Internet access. But I cannot ping the ip address of the CT. The Firewall for the CT is deactivated.

Inside the Container I can ping its own ip address but not the node nor the gw.

After container creation I saw that there is no /etc/network/interfaces inside the CT. Although this is standard for Ubuntu, I created this file inside the CT:

But this didn't change anything.

I work with proxmox since v2 and the old OpenVZ times, and I have two nodes running old endoflife version 6.4. I am used that such network configurations works out of the box, because there is no special routing or private ip stuff involved.

So this node is in a datacenter. There are no private ip addresses involved. I mention this, because all posts I found are related to private ip addresses.

The ip addresses in this post are changed for privacy:

PVE Node has IP address 167.120.71.34/26

and gateway 167.120.71.1

I have this subnet on this node: 6.8.188.95/27

So I can use ip 6.8.188.95 to 6.8.188.124 for containers and VM.

/etc/network/interfaces on the pve node:

Code:

auto lo

iface lo inet loopback

iface enp195s0 inet manual

iface enx06ff699e5b18 inet manual

auto vmbr0

iface vmbr0 inet static

address 167.120.71.34/26

gateway 167.120.71.1

bridge-ports enp195s0

# tried also "bridge-ports none"

bridge-stp off

bridge-fd 0in the GUI the pve host has this network config:

| Name | Type | Active | Autostart | VLAN aware | Ports/Slaves | Bond Mode | CIDR | Gateway | Comment |

| enp195s0 | Net Device | Yes | No | No | |||||

| enx06ff699e5b18 | Net Device | No | No | No | |||||

| vmbr0 | Linux Bridge | Yes | Yes | No | enp195s0 | 167.12.71.34/26 | 167.12.71.1 |

(I tried this also with the CIDR/Gateway addresses on the enp195s0 line and for the vmbr0 a CIDR from the active subnet)

I created a privileged CT with the standard Ubuntu 22.04 Template, nesting activated, and set this on the GUI:

Code:

Name: eth0 IPv4: Static

MAC addr: SO:ME:CO:DE IPv4/CIDR: 6.8.188.100/27

Bridge: vmbr0 Gateway (IPv4): 167.120.71.34On the node I have all Internet access. But I cannot ping the ip address of the CT. The Firewall for the CT is deactivated.

Inside the Container I can ping its own ip address but not the node nor the gw.

After container creation I saw that there is no /etc/network/interfaces inside the CT. Although this is standard for Ubuntu, I created this file inside the CT:

Code:

auto lo

iface lo inet loopback

auto eth0

iface eth0 inet static

address 6.8.188.100/27

gateway 167.120.71.34But this didn't change anything.

I work with proxmox since v2 and the old OpenVZ times, and I have two nodes running old endoflife version 6.4. I am used that such network configurations works out of the box, because there is no special routing or private ip stuff involved.

Last edited: