On one of my Proxmox system, NFS does not work natively

If try mount after activate on ftsab, work perfectly

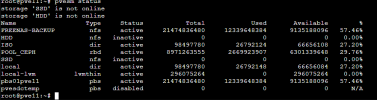

On my storage.cfg

If try pvesm...

On my firewall of course, IP is open

If try mount after activate on ftsab, work perfectly

Code:

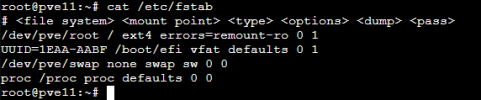

cat /etc/fstab

# <file system> <mount point> <type> <options> <dump> <pass>

/dev/sda1 / ext4 errors=remount-ro 0 1

/dev/sda2 swap swap defaults 0 0

proc /proc proc defaults 0 0

sysfs /sys sysfs defaults 0 0

mynfsserver:/srv/storage/backup/templates /mnt/pve/templates nfs bg,hard,timeo=1200,rsize=1048576,wsize=1048576 0 0

mynfsserver:/srv/storage/backup/pro16 /mnt/pve/backupremote nfs bg,hard,timeo=1200,rsize=1048576,wsize=1048576 0 0

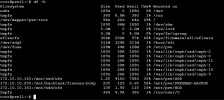

root@pro01:~# df -h

Filesystem Size Used Avail Use% Mounted on

udev 10M 0 10M 0% /dev

tmpfs 26G 770M 25G 3% /run

/dev/sda1 20G 2.8G 16G 15% /

tmpfs 63G 40M 63G 1% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

tmpfs 63G 0 63G 0% /sys/fs/cgroup

tmpfs 13G 0 13G 0% /run/user/0

/dev/fuse 30M 28K 30M 1% /etc/pve

mynfsserver:/srv/storage/backup/templates 30T 19T 9.4T 67% /mnt/pve/templates

mynfsserver/srv/storage/backup/pro16 30T 19T 9.4T 67% /mnt/pve/backupremoteOn my storage.cfg

Code:

cat /etc/pve/storage.cfg

dir: local

path /var/lib/vz

content rootdir,images,vztmpl,iso

maxfiles 0

nfs: backupremote

export /srv/storage/backup/pro16

path /mnt/pve/backupremote

server mynfsserver

content backup

maxfiles 1

options vers=3

nfs: templates

export /srv/storage/backup/templates

path /mnt/pve/templates

server mynfsserver

content images,vztmpl,iso

maxfiles 0

nodes pro01

options vers=3

lvm: lvm

vgname lvm

content rootdir,images

shared 1If try pvesm...

Code:

pvesm nfsscan IP_or_FDQN_of_NFS_SERVER

clnt_create: RPC: Port mapper failure - Unable to receive: errno 111 (Connection refused)

command '/sbin/showmount --no-headers --exports IP_or_FDQN_of_NFS_SERVER' failed: exit code 1On my firewall of course, IP is open