Hey all!

New guy around here. I come from Spain and I've been working in IT for the last 15 years, mainly in Windows and VMware ESXi systems.

Now thanks to Broadcomm, ESXi is out of the window, and here I am.

TL;DR: I'm looking for advice to build storage server to act as backup repository for my company, this is not a homelab question. So I'm debating myself whether to go with something I know or something new. I can find HPE DL380 G10 or Dell R740xd servers as the foundation of the system, but I'm struggling whith the storage adapters and all the HBA/RAID mess.

Long story:

The company I work for as the IT guy need to grow its storage capacity urgently, we work with a single file server holding 20 TB of data and I need to back that thing up.

So I've proposed a new server to act as a repository revolving around Veeam and its hardened repository, this will be in the realm of 60-80 TBs

Now, Veeam works in Windows, the hardened repository works in linux, and here comes issue 1, I need to virtualize the thing, use a VM for the Windows Server machine that will hold the Veeam software and another one that will be the linux repository.

So issue 1: Use proxmox or use HyperV. I'm inclined to go with proxmox given the good reviews around, but that's new to me.

If it was HyperV I know how to follow, RAID card, create volumes, and store the VMs in the different pools (ssds for OS, HDDs for storage), call it a day.

Now, being proxmox I've read hundreds of posts about the HBA/RAID controller issue and I'm at loss. I need to pick this thing from one place, and not DIY components from eBay. And here comes issue 2

Issue 2: If I buy the server with a RAID controller, then I don't know if I can or cannot try the Proxmox route, but if I buy the server with an HBA, I know for sure that I can't make it work with Windows HyperV. Yes I know that Windows can do dynamic disks, but I'd rather sit on a kerosene barrel with a candle.

In the HPE Team I have the HP P816i-a (4GB) controller

In the Dell Team I have the HBA330 and the H740p (8GB)

I might be able to throw an LSI SAS 9305-16i HBA, but again, I need to know how is it going to work.

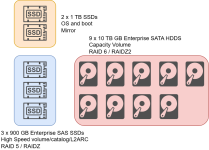

Both options will be populated with SATA HDDs for capacity and at least 2 SSDs for the OS, swap, etc. This will be setup with double parity RAID6 or RAIDZ2

Rest of the part-list is similar in both cases, 1 or 2 procs below the 16 core count in total, at least 128GBs of ECC ram, 9 or 10 x 10TB drives.

So right now, I'm more inclined about the Dell H740p options based on some posts: https://forum.proxmox.com/threads/using-raid-in-hba-mode-or-remove-raid.163468/ or https://www.truenas.com/community/threads/hp-dell-hba-discussion-again.114252/post-791458

Note that I will not be running TrueNAS (although I'd like to) and that no hardware has been purchased yet.

Any pointers will be much appreciated.

Thank you all and apologies for this long introduction.

New guy around here. I come from Spain and I've been working in IT for the last 15 years, mainly in Windows and VMware ESXi systems.

Now thanks to Broadcomm, ESXi is out of the window, and here I am.

TL;DR: I'm looking for advice to build storage server to act as backup repository for my company, this is not a homelab question. So I'm debating myself whether to go with something I know or something new. I can find HPE DL380 G10 or Dell R740xd servers as the foundation of the system, but I'm struggling whith the storage adapters and all the HBA/RAID mess.

Long story:

The company I work for as the IT guy need to grow its storage capacity urgently, we work with a single file server holding 20 TB of data and I need to back that thing up.

So I've proposed a new server to act as a repository revolving around Veeam and its hardened repository, this will be in the realm of 60-80 TBs

Now, Veeam works in Windows, the hardened repository works in linux, and here comes issue 1, I need to virtualize the thing, use a VM for the Windows Server machine that will hold the Veeam software and another one that will be the linux repository.

So issue 1: Use proxmox or use HyperV. I'm inclined to go with proxmox given the good reviews around, but that's new to me.

If it was HyperV I know how to follow, RAID card, create volumes, and store the VMs in the different pools (ssds for OS, HDDs for storage), call it a day.

Now, being proxmox I've read hundreds of posts about the HBA/RAID controller issue and I'm at loss. I need to pick this thing from one place, and not DIY components from eBay. And here comes issue 2

Issue 2: If I buy the server with a RAID controller, then I don't know if I can or cannot try the Proxmox route, but if I buy the server with an HBA, I know for sure that I can't make it work with Windows HyperV. Yes I know that Windows can do dynamic disks, but I'd rather sit on a kerosene barrel with a candle.

In the HPE Team I have the HP P816i-a (4GB) controller

In the Dell Team I have the HBA330 and the H740p (8GB)

I might be able to throw an LSI SAS 9305-16i HBA, but again, I need to know how is it going to work.

Both options will be populated with SATA HDDs for capacity and at least 2 SSDs for the OS, swap, etc. This will be setup with double parity RAID6 or RAIDZ2

Rest of the part-list is similar in both cases, 1 or 2 procs below the 16 core count in total, at least 128GBs of ECC ram, 9 or 10 x 10TB drives.

So right now, I'm more inclined about the Dell H740p options based on some posts: https://forum.proxmox.com/threads/using-raid-in-hba-mode-or-remove-raid.163468/ or https://www.truenas.com/community/threads/hp-dell-hba-discussion-again.114252/post-791458

Note that I will not be running TrueNAS (although I'd like to) and that no hardware has been purchased yet.

Any pointers will be much appreciated.

Thank you all and apologies for this long introduction.