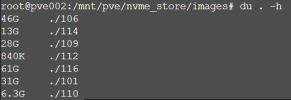

I just imported three VMs overnight. While it looked like it was going to import them as thick-provisioned disks, when I looked in the folder the images sizes were small, indicating they were provisioned as thin drives. Each of these is a 256 GB thin-provisioned drive, but here is a screenshot of the actual sizes on disk:Hi all,

I only use thin disk on my ESXi. Is the import wizard converting that in a way that the disks will be still thin provisioned?

Thanks

Hope that helps!