Hi Folks,

I have performance issue with a 3 node cluster PVE 8.4 (all updated installed) on network side

Hosts are using Intel 710QA2 adapter which are 40Gb QSFP+ 2 port nic. connected through a 5700 HPE switch which obviously support 40Gb connection.

Firmware of the card has been updated to the latest version as you can read below from ethtool ouput.

Driver used is i40e

root@pve-h1:~# ethtool -i ens817f1np1

driver: i40e

version: 6.8.12-13-pve

firmware-version: 9.54 0x8000fb7f 1.3800.0

expansion-rom-version:

bus-info: 0000:98:00.1

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

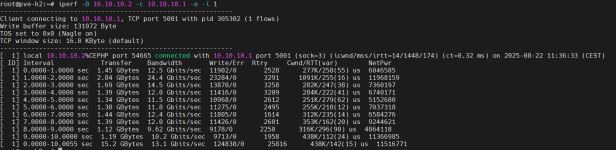

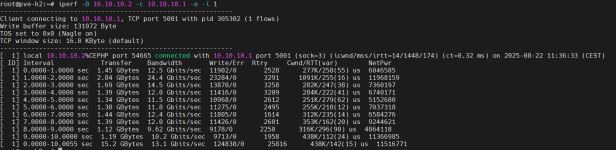

I tested the performance with IPERF connecting node 2 to node 1 on the 40gb network which is used for ceph public network.

below the output of the iperf with a lot of Retry

In theory I should be able to get about 5GB bandwidth while actually I'm at just 20-25% of it.

Any clue please?

Regards

Andrea

I have performance issue with a 3 node cluster PVE 8.4 (all updated installed) on network side

Hosts are using Intel 710QA2 adapter which are 40Gb QSFP+ 2 port nic. connected through a 5700 HPE switch which obviously support 40Gb connection.

Firmware of the card has been updated to the latest version as you can read below from ethtool ouput.

Driver used is i40e

root@pve-h1:~# ethtool -i ens817f1np1

driver: i40e

version: 6.8.12-13-pve

firmware-version: 9.54 0x8000fb7f 1.3800.0

expansion-rom-version:

bus-info: 0000:98:00.1

supports-statistics: yes

supports-test: yes

supports-eeprom-access: yes

supports-register-dump: yes

supports-priv-flags: yes

I tested the performance with IPERF connecting node 2 to node 1 on the 40gb network which is used for ceph public network.

below the output of the iperf with a lot of Retry

In theory I should be able to get about 5GB bandwidth while actually I'm at just 20-25% of it.

Any clue please?

Regards

Andrea