Hello, yesterday I've run into a very strange problem, possibly these were two completely unrelated events but still you should know about them.

The problem is: dist-upgrade Proxmox 7 to Proxmox 8 completely broke the server's BIOS, I think it could have been Mortar hooks that wrote some incorrect data into the NVRAM.

The second issue is Proxmox's Linux kernel version 6.5 does not boot on Dell R240, but this might be unrelated to the main problem.

Here is the full story:

I have upgraded Proxmox 7 to Proxmox 8 and after a reboot the server did not boot up, because its BIOS got broken, reporting some problem with DXE.

The automated BIOS recovery did not succeed.

After the datacenter engineers updated the BIOS to the latest version (and cleared the NVRAM) I've rebooted the server again but the OS did not boot, kernel version 6.5.13-1-pve hangs on loading the initial RAM disk.

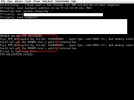

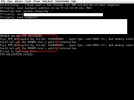

This is what it looked like when I was trying to boot the server via GRUB:

However the older kernels booted well, for example 5.15.83-1-pve or 5.15.143-1-pve.

After I've determined that the old kernels boot well, I've started to set up Mortar from scratch: disabled the Secure Boot in BIOS settings, cleared the default Microsoft/Dell/whatever keys, cleared the TPM, set up my own Secure Boot keys with Mortar again and tried to boot the Proxmox 8 with the latest kernel again but the boot hanged again, on loading the initial RAM disk.

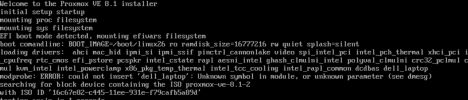

This is what it looked like when I was trying to boot a signed EFI image:

After messing with Secure Boot and TPM for the whole evening, clearing the TPM keys, (re)setting the Secure Boot and (re)signing kernels, I finally gave up with Mortar as I wasn't able to boot even older kernels, and I have set up the Secure Boot following the Proxmox manual:

https://pve.proxmox.com/wiki/Secure_Boot_Setup#Setup_instructions_for_db_key_variant

I have installed and signed the Linux kernel version 6.5.13-1-pve, but the server would not boot again, the boot process hanged on loading the initial RAM disk. So it seems that the Proxmox's build of Linux 6.5.13-1-pve is incompatible with Dell R240 server.

I have installed the kernel version 6.2.16-20-pve, signed it and the server booted well.

Now I get this every boot:

Do not mind the "header validation succeeded" and "TPM validation failed" messages - I have removed the Mortar scripts from "/etc/kernel/postinst.d" but forgot to remove it from "/etc/initramfs-tools/scripts/local-top".

The most important message here is something about NVRAM, after I saw it I've immediately recalled the initial problem with BIOS after I've dist-upgraded Proxmox7 to 8.

Did the dist-upgrade Mortar hooks break something in the NVRAM so BIOS got completely broken?

My server has TPM version 1.2, possibly this was the reason.

I could not mess further with this server as it is a production server rented from a remote datacenter, however I have another Dell R240 locally and could play with it once I have some spare time. Please share your thoughts and suggestions on what I should check on the local server.

Unfortunately I have TPM version 2.0 in the local server, will try to buy and install TPM 1.2 module for an experiment.

If anyone else has a Dell R240 server then please check if you could successfully boot Linux kernel version 6.5.13-1-pve.