Meltdown and Spectre Linux Kernel fixes

- Thread starter martin

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Which host CPU, Intel, ARM or AMD?

More detail. Now it's public.

Following the embargo lifting, the official proof of concept for Spectre 2 from Google was disclosed.

https://bugs.chromium.org/p/project-zero/issues/detail?id=1272

https://news.ycombinator.com/item?id=16107691

Following analysis, we believe the PoC is only working on a specific software configuration.

Not nice at all as Spectre 2 is not mitigated in most softwares.

Warning: kernel 4.4.0-108 is buggy. Still boot problem.

https://bugs.launchpad.net/ubuntu/+source/linux/+bug/1742323

Last edited:

only minor diff related to disks UUID

diff /boot/grub/grub.cfg /root/grub103.cfg

https://i.imgur.com/jbg1WHK.png

https://i.imgur.com/wxBJi4N.png

https://i.imgur.com/GyKbZxJ.png

Also both servers boot in BIOS mode

103

# [ -d /sys/firmware/efi ] && echo UEFI || echo BIOS

BIOS

102

# [ -d /sys/firmware/efi ] && echo UEFI || echo BIOS

BIOS

diff /boot/grub/grub.cfg /root/grub103.cfg

https://i.imgur.com/jbg1WHK.png

https://i.imgur.com/wxBJi4N.png

https://i.imgur.com/GyKbZxJ.png

Also both servers boot in BIOS mode

103

# [ -d /sys/firmware/efi ] && echo UEFI || echo BIOS

BIOS

102

# [ -d /sys/firmware/efi ] && echo UEFI || echo BIOS

BIOS

Last edited:

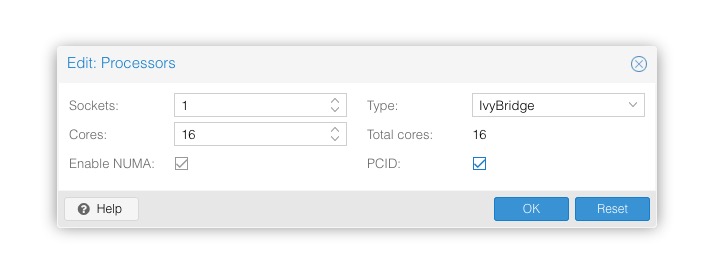

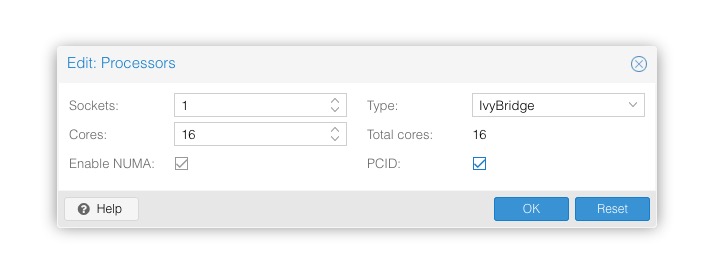

qemu-server and pve-manager packages just got updated (up to pve-no-subscription) to allow passing through the PCID CPU flag to VMs for speeding up KPTI, see the admin guide for details:

Note that you need to shutdown and start (not reboot from within!) the VM for the changes to apply.

PCID Flag

The PCID CPU flag helps to improve performance of the Meltdown vulnerability mitigation approach. In Linux the mitigation is called Kernel Page-Table Isolation (KPTI), which effectively hides the Kernel memory from the user space, which, without PCID, is a expensive operation .

There are two requirements to reduce the cost of the mitigation:

To check if the Proxmox VE host supports PCID, execute the following command as root:

- The host CPU must support PCID and propagate it to the guest’s virtual CPU(s)

- The guest Operating System must be updated to a version which mitigates the attack and utilizes the PCID feature marked by its flag.

# grep ' pcid ' /proc/cpuinfo

If this does not return empty your hosts CPU has support for PCID. If you use ‘host’ as CPU type and the guest OS is able to use it, your done. Else, the PCID CPU flag needs to get set for the virtual CPU. This can be done, for example, by editing the CPU through the WebUI.

Note that you need to shutdown and start (not reboot from within!) the VM for the changes to apply.

Hello,

i have some server with old version of proxmox: 3.3-1,

What can I do for this vulnerability

thanks

i have some server with old version of proxmox: 3.3-1,

What can I do for this vulnerability

thanks

qemu-server and pve-manager packages just got updated (up to pve-no-subscription) to allow passing through the PCID CPU flag to VMs for speeding up KPTI ...

Not seeing these in stretch/pve-no-subscription yet?

ML11O crashed with -102 version.

I'm going to check -103 at the weekend.

You will get feedback, then.

I've seen -103 is available right now.

Thanks for your hard work.

Feedback just before weekend:

Upgraded ML110 Gen7 to -103 Version. Came up smoothly.

Did the same with the other 4.X Servers. They came back properly, too.

As for now, everything is fine besides the lack of bios-updates.

But that's not your issue.

So have a nice weekend

Hi Fabian,qemu-server and pve-manager packages just got updated (up to pve-no-subscription) to allow passing through the PCID CPU flag to VMs for speeding up KPTI, see the admin guide for details:

Code:This can be done, for example, by editing the CPU through the WebUI.

Note that you need to shutdown and start (not reboot from within!) the VM for the changes to apply.

perhaps I miss something, but I don't find the point in the gui!

If I add following line in a vm-config i get the pcid flag inside the vm:

Code:

args: -cpu kvm64,+pcidMy versions:

Code:

proxmox-ve: 5.1-35 (running kernel: 4.13.13-4-pve)

pve-manager: 5.1-41 (running version: 5.1-41/0b958203)

pve-kernel-4.4.98-3-pve: 4.4.98-102

pve-kernel-4.13.13-4-pve: 4.13.13-35

pve-kernel-4.13.13-3-pve: 4.13.13-34

libpve-http-server-perl: 2.0-8

lvm2: 2.02.168-pve6

corosync: 2.4.2-pve3

libqb0: 1.0.1-1

pve-cluster: 5.0-19

qemu-server: 5.0-18

pve-firmware: 2.0-3

libpve-common-perl: 5.0-25

libpve-guest-common-perl: 2.0-14

libpve-access-control: 5.0-7

libpve-storage-perl: 5.0-17

pve-libspice-server1: 0.12.8-3

vncterm: 1.5-3

pve-docs: 5.1-15

pve-qemu-kvm: 2.9.1-5

pve-container: 2.0-18

pve-firewall: 3.0-5

pve-ha-manager: 2.0-4

ksm-control-daemon: 1.2-2

glusterfs-client: 3.8.8-1

lxc-pve: 2.1.1-2

lxcfs: 2.0.8-1

criu: 2.11.1-1~bpo90

novnc-pve: 0.6-4

smartmontools: 6.5+svn4324-1

zfsutils-linux: 0.7.3-pve1~bpo9

openvswitch-switch: 2.7.0-2

ceph: 12.2.2-pve1Did you log in to a proxmox host webgui with an updated pve-manager?If you log on to one with old pve-manager you wont see it anywhere (had the same issue where i just updated one host, but logged in through another and didnt see it)

Hi Fabian,

perhaps I miss something, but I don't find the point in the gui!

If I add following line in a vm-config i get the pcid flag inside the vm:

In the gui I see below Edit: CPU options only VCPUs, CPU limit and CPU units...Code:args: -cpu kvm64,+pcid

My versions:

UdoCode:proxmox-ve: 5.1-35 (running kernel: 4.13.13-4-pve) pve-manager: 5.1-41 (running version: 5.1-41/0b958203) pve-kernel-4.4.98-3-pve: 4.4.98-102 pve-kernel-4.13.13-4-pve: 4.13.13-35 pve-kernel-4.13.13-3-pve: 4.13.13-34 libpve-http-server-perl: 2.0-8 lvm2: 2.02.168-pve6 corosync: 2.4.2-pve3 libqb0: 1.0.1-1 pve-cluster: 5.0-19 qemu-server: 5.0-18 pve-firmware: 2.0-3 libpve-common-perl: 5.0-25 libpve-guest-common-perl: 2.0-14 libpve-access-control: 5.0-7 libpve-storage-perl: 5.0-17 pve-libspice-server1: 0.12.8-3 vncterm: 1.5-3 pve-docs: 5.1-15 pve-qemu-kvm: 2.9.1-5 pve-container: 2.0-18 pve-firewall: 3.0-5 pve-ha-manager: 2.0-4 ksm-control-daemon: 1.2-2 glusterfs-client: 3.8.8-1 lxc-pve: 2.1.1-2 lxcfs: 2.0.8-1 criu: 2.11.1-1~bpo90 novnc-pve: 0.6-4 smartmontools: 6.5+svn4324-1 zfsutils-linux: 0.7.3-pve1~bpo9 openvswitch-switch: 2.7.0-2 ceph: 12.2.2-pve1

hello, issue was related that dmesg flooded messages like

[72638.698664] i40e 0000:01:00.1: TX driver issue detected, PF reset issued

[72639.107399] i40e 0000:01:00.1 eth1: adding 14:fe:b5:2a:2b:7b vid=0

[72639.115347] audit: type=1400 audit(1515602564.655:26027): apparmor="ALLOWED" operation="open" profile="/usr/sbin/sssd" name="/sys/devices/pci0000:00/0000:00:01.0/0000:01:00.1/net/eth1/type" pid=2527 comm="sssd" requested_mask="r" denied_mask="r" fsuid=0 ouid=0

[72641.469976] i40e 0000:01:00.1: TX driver issue detected, PF reset issued

so better check like this

# grep isolation /var/log/kern.log

Jan 10 04:32:48 proxmox03 kernel: [ 0.000000] Kernel/User page tables isolation: enabled

or

# grep --color -E "cpu_insecure|cpu_meltdown|kaiser" /proc/cpuinfo && echo "patched " || echo "unpatched "

...

patched

Thanks for help

[72638.698664] i40e 0000:01:00.1: TX driver issue detected, PF reset issued

[72639.107399] i40e 0000:01:00.1 eth1: adding 14:fe:b5:2a:2b:7b vid=0

[72639.115347] audit: type=1400 audit(1515602564.655:26027): apparmor="ALLOWED" operation="open" profile="/usr/sbin/sssd" name="/sys/devices/pci0000:00/0000:00:01.0/0000:01:00.1/net/eth1/type" pid=2527 comm="sssd" requested_mask="r" denied_mask="r" fsuid=0 ouid=0

[72641.469976] i40e 0000:01:00.1: TX driver issue detected, PF reset issued

so better check like this

# grep isolation /var/log/kern.log

Jan 10 04:32:48 proxmox03 kernel: [ 0.000000] Kernel/User page tables isolation: enabled

or

# grep --color -E "cpu_insecure|cpu_meltdown|kaiser" /proc/cpuinfo && echo "patched " || echo "unpatched "

...

patched

Thanks for help

Last edited:

qemu-server and pve-manager packages just got updated (up to pve-no-subscription) to allow passing through the PCID CPU flag to VMs for speeding up KPTI, see the admin guide for details:

PCID Flag

The PCID CPU flag helps to improve performance of the Meltdown vulnerability mitigation approach. In Linux the mitigation is called Kernel Page-Table Isolation (KPTI), which effectively hides the Kernel memory from the user space, which, without PCID, is a expensive operation .

There are two requirements to reduce the cost of the mitigation:

To check if the Proxmox VE host supports PCID, execute the following command as root:

- The host CPU must support PCID and propagate it to the guest’s virtual CPU(s)

- The guest Operating System must be updated to a version which mitigates the attack and utilizes the PCID feature marked by its flag.

# grep ' pcid ' /proc/cpuinfo

If this does not return empty your hosts CPU has support for PCID. If you use ‘host’ as CPU type and the guest OS is able to use it, your done. Else, the PCID CPU flag needs to get set for the virtual CPU. This can be done, for example, by editing the CPU through the WebUI.

Note that you need to shutdown and start (not reboot from within!) the VM for the changes to apply.

Hi Fabian,

This is not clear for me. Sorry, I'm not a KVM power user.

To check if the Proxmox VE host supports PCID, execute the following command as root:

Where do we need to type this, in PVE server on in the KVM VM?

The story about ‘host’ is not clear too.

Cheers,

"Proxmox Host" = on the proxmox node = "PVE server"Where do we need to type this, in PVE server on in the KVM VM?

had to read this two times as well, it's really meant literally: there is an ("Type:") option called "host" now, which you have to select...The story about ‘host’ is not clear too.

Well - I wouldn't recommend running with cpu type: host if you are running a cluster of several proxmox servers since they might differ in CPU architecture. That might lead to live migration difficulty (when migrating from a node with flags not available in newer node).

The way we do it is that we check the oldest CPU on our nodes, check which cpu architecture it belongs to (google it, or check intels site) and set it on all VMs - don't fortget to tick the PCID checkbox if you use the GUI. You'd have to "shutdown, start" them through proxmox to make it stick though.

I posted two screens above with what it looks like.

If you're only running 1 proxmox server or don't care about live migration for some reason, you're good to go with host mode

From Proxmox wiki:

https://pve.proxmox.com/wiki/Qemu/KVM_Virtual_Machines#qm_virtual_machines_settings

The way we do it is that we check the oldest CPU on our nodes, check which cpu architecture it belongs to (google it, or check intels site) and set it on all VMs - don't fortget to tick the PCID checkbox if you use the GUI. You'd have to "shutdown, start" them through proxmox to make it stick though.

I posted two screens above with what it looks like.

If you're only running 1 proxmox server or don't care about live migration for some reason, you're good to go with host mode

From Proxmox wiki:

https://pve.proxmox.com/wiki/Qemu/KVM_Virtual_Machines#qm_virtual_machines_settings

CPU Type

Qemu can emulate a number different of CPU types from 486 to the latest Xeon processors. Each new processor generation adds new features, like hardware assisted 3d rendering, random number generation, memory protection, etc … Usually you should select for your VM a processor type which closely matches the CPU of the host system, as it means that the host CPU features (also called CPU flags ) will be available in your VMs. If you want an exact match, you can set the CPU type to host in which case the VM will have exactly the same CPU flags as your host system.

This has a downside though. If you want to do a live migration of VMs between different hosts, your VM might end up on a new system with a different CPU type. If the CPU flags passed to the guest are missing, the qemu process will stop. To remedy this Qemu has also its own CPU type kvm64, that Proxmox VE uses by defaults. kvm64 is a Pentium 4 look a like CPU type, which has a reduced CPU flags set, but is guaranteed to work everywhere.

In short, if you care about live migration and moving VMs between nodes, leave the kvm64 default. If you don’t care about live migration or have a homogeneous cluster where all nodes have the same CPU, set the CPU type to host, as in theory this will give your guests maximum performance.

"Proxmox Host" = on the proxmox node = "PVE server"

had to read this two times as well, it's really meant literally: there is an ("Type:") option called "host" now, which you have to select...

Hi Fabian,

perhaps I miss something, but I don't find the point in the gui!

If I add following line in a vm-config i get the pcid flag inside the vm:

In the gui I see below Edit: CPU options only VCPUs, CPU limit and CPU units...Code:args: -cpu kvm64,+pcid

My versions:

UdoCode:proxmox-ve: 5.1-35 (running kernel: 4.13.13-4-pve) pve-manager: 5.1-41 (running version: 5.1-41/0b958203) pve-kernel-4.4.98-3-pve: 4.4.98-102 pve-kernel-4.13.13-4-pve: 4.13.13-35 pve-kernel-4.13.13-3-pve: 4.13.13-34 libpve-http-server-perl: 2.0-8 lvm2: 2.02.168-pve6 corosync: 2.4.2-pve3 libqb0: 1.0.1-1 pve-cluster: 5.0-19 qemu-server: 5.0-18 pve-firmware: 2.0-3 libpve-common-perl: 5.0-25 libpve-guest-common-perl: 2.0-14 libpve-access-control: 5.0-7 libpve-storage-perl: 5.0-17 pve-libspice-server1: 0.12.8-3 vncterm: 1.5-3 pve-docs: 5.1-15 pve-qemu-kvm: 2.9.1-5 pve-container: 2.0-18 pve-firewall: 3.0-5 pve-ha-manager: 2.0-4 ksm-control-daemon: 1.2-2 glusterfs-client: 3.8.8-1 lxc-pve: 2.1.1-2 lxcfs: 2.0.8-1 criu: 2.11.1-1~bpo90 novnc-pve: 0.6-4 smartmontools: 6.5+svn4324-1 zfsutils-linux: 0.7.3-pve1~bpo9 openvswitch-switch: 2.7.0-2 ceph: 12.2.2-pve1

I have the same thing with 5.1 (same versions)

I have a cluster on 4.4 and those have the option PCID. (same hardware, I updated one member of that cluster to 5.1 and the option was gone)

Looks like the change is in 4.4, not 5.1.

it's really necesary to update bios? not enough update kernel for meltdown?Warning: problems with Meltdown patched BIOS on some Dell servers (instabilities).

I've not more details.

the pve-manager and qemu-server packages should now really be available in pve-no-subscription also on PVE 5 (sorry for the delay).

checking whether the physical CPU supports PCID needs to be done on the host.

checking whether the physical CPU supports PCID needs to be done on the host.

Impact with PTI on server.

https://blog.online.net/2018/01/03/...e-security-flaw-impacting-arm-intel-hardware/

https://blog.online.net/2018/01/03/...e-security-flaw-impacting-arm-intel-hardware/

So performance are poor with PTI than without PTI on guest CPU??