Hey y'all,

So I recently set up proxmox on a r720xd and run it as a secondary node in my proxmox cluster. It has 10 1.2TB SAS drives on it (ST1200MM0108) that i have been running in raidz2 and i use a seperate SSD for the host (which runs a NFS share for the zfs pool) and the single VM i run on the r720 which is setup as a torrent seedbox.

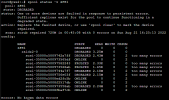

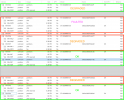

Recently i noticed that the ZFS pool status states the pool is degraded and below is a screenshot of the status. I was a little confused since it says i have multiple faults and degraded drives but i have not noticed much of an issue with my pool and everything still works pretty well. The SMART values for all the drives seem fine and there is no indication there of a failed drive. Does anyone know what I should do here? Are my drives actually dying and if so is the only option to replace them with new ones?

I am hoping that either the status is incorrect or there is something I can do to fix them. I just bought these drives off eBay and through facebook marketplace and no issue was mentioned and i found no issues in my own testing. It would be a bummer to have to replace the whole array or find suitable replacements.

Thanks in advance!

So I recently set up proxmox on a r720xd and run it as a secondary node in my proxmox cluster. It has 10 1.2TB SAS drives on it (ST1200MM0108) that i have been running in raidz2 and i use a seperate SSD for the host (which runs a NFS share for the zfs pool) and the single VM i run on the r720 which is setup as a torrent seedbox.

Recently i noticed that the ZFS pool status states the pool is degraded and below is a screenshot of the status. I was a little confused since it says i have multiple faults and degraded drives but i have not noticed much of an issue with my pool and everything still works pretty well. The SMART values for all the drives seem fine and there is no indication there of a failed drive. Does anyone know what I should do here? Are my drives actually dying and if so is the only option to replace them with new ones?

I am hoping that either the status is incorrect or there is something I can do to fix them. I just bought these drives off eBay and through facebook marketplace and no issue was mentioned and i found no issues in my own testing. It would be a bummer to have to replace the whole array or find suitable replacements.

Thanks in advance!