Ballooning does not start until memory usage is at 80%. And memory is distributed over all VMs, but I guess you have just one large one? Therefore, the VM with 64GB is using 64GB until memory hits 80%. If other things on the Proxmox host (other VMs for example) keep using more memory, the VM with 64GB will eventually reach 2GB. But as long as there is no memory pressure, it gets the maximum amount.

Is that a Windows VM? Windows might use the full 64GB, for example 4GB by system/user processes + 60GB for caching, and will then report to PVE that only 4GB are used. But in reality it uses the full 64GB of physical RAM.

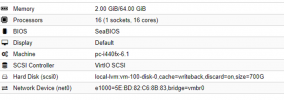

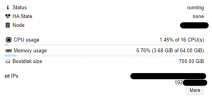

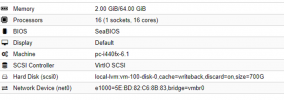

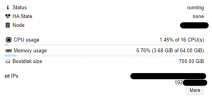

Have a look at your VM.

If its Linux run

free -hm inside the VM and look for the "free" column. Everything that is no "free" is actually used/blocked physical RAM, no matter what "available" is reporting. See

LinuxAteMyRam.com for a better unterstanding.

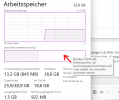

If you got a Windows VM look at the Task manager:

View attachment 35824

Here I got 32GB RAM and Win reports that only 13.3 of 32GB are used. But if you look at the bottom graph it tells you that it also uses 19GB as "Standby", so RAM that is used for cache. So actually it uses 13.3GB for applications/system + 19 GB for caching. So its using the full 32GB RAM and no RAM at all is free/unsued.

The same it will report to PVE through the guest agent and ballooning driver and PVE will then also only tell you that 13.3GB are used even if it uses the full 32GB.

Correct. And using 2GB as min RAM for ballooning also isn't a great choice. As soon as the host exceeds 80% RAM usage the balooning will kick in and will slowly remove RAM from your VM. This will continue until the hosts RAM drops below 80% or until your "Min RAM value" (2GB) is reached. And ballooning doesn't care if that VM is needed that RAM or not. As long as the hosts RAM usage will stay above 80% it will just steal RAM from the VM until the VM only got 2GB RAM left. The VM then is forced to kill processes until everything fits into this 2GB of RAM. So the guest OS basically will need to kill nearly everything. If you don't want balooning to kill your guests processes you should choose a "Min RAM" that is atleast as high as your guest needs it to be able to operate. Lets say usually your guest will use 4 to 32 GB of the 64GB RAM for system/user processes. Then your "Min RAM" shouldn't be lower than 32GB.

Thanks leesteken and Dunuin to your full details answer, I thought this problems were caused from my bad configurations, but instead the functionally is correct.

But instead, for my second problem, can you help me?

This is the first problem, but I have another one: when I started a backup of this VM(towards a Remote Proxmox Backup Serer), the same VM it seems very slow(almost freeze), and this problem occurs both from the console and SPICE.

This night the backup seems to start corretcly, but after 30minutes the process was killed and returned the error "backup write data failed: command error: write_data upload error: pipelined request failed: broken pipe". I have the same problem for several days

This morning I tried to start a manual backup and the Virtual Machine seems to be locked, if I try to launch a ping towards the VM and it responds correctly, but when I try to connect via RDP the VM doesn't respond.

I attach the syslog of my PVE:

Apr 08 01:00:05 srv1 pvescheduler[613142]: <root@pam> starting task UPID:srv1:00095B17:0096B9DD:624F6CF5:vzdump:100:root@pam:

Apr 08 01:00:06 srv1 pvescheduler[613143]: INFO: starting new backup job: vzdump 100 --storage STORE-REMOTEBKP --mailto

berto@berto.it --mode snapshot --mailnotification failure --quiet 1

Apr 08 01:00:06 srv1 pvescheduler[613143]: INFO: Starting Backup of VM 100 (qemu)

Apr 08 01:01:41 srv1 pvedaemon[547893]: <root@pam> successful auth for user 'root@pam'

Apr 08 01:03:59 srv1 postfix/qmgr[1097]: 90779180DA4: from=<>, size=7000, nrcpt=1 (queue active)

Apr 08 01:03:59 srv1 postfix/local[614489]: error: open database /etc/aliases.db: No such file or directory

Apr 08 01:03:59 srv1 postfix/local[614489]: warning: hash:/etc/aliases is unavailable. open database /etc/aliases.db: No such file or directory

Apr 08 01:03:59 srv1 postfix/local[614489]: warning: hash:/etc/aliases: lookup of 'root' failed

Apr 08 01:03:59 srv1 postfix/local[614489]: 90779180DA4: to=<

root@srv1.berto>, relay=local, delay=8999, delays=8999/0.02/0/0.01, dsn=4.3.0, status=deferred (alias database unavailable)

Apr 08 01:13:59 srv1 postfix/qmgr[1097]: BBC2F180E45: from=<

root@srv1.berto>, size=6475, nrcpt=2 (queue active)

Apr 08 01:17:01 srv1 CRON[619048]: pam_unix(cron:session): session opened for user root(uid=0) by (uid=0)

Apr 08 01:17:01 srv1 CRON[619049]: (root) CMD ( cd / && run-parts --report /etc/cron.hourly)

Apr 08 01:17:01 srv1 CRON[619048]: pam_unix(cron:session): session closed for user root

Apr 08 01:17:41 srv1 pvedaemon[547893]: <root@pam> successful auth for user 'root@pam'

Apr 08 01:18:45 srv1 pveproxy[611070]: worker exit

Apr 08 01:18:45 srv1 pveproxy[1147]: worker 611070 finished

Apr 08 01:18:45 srv1 pveproxy[1147]: starting 1 worker(s)

Apr 08 01:18:45 srv1 pveproxy[1147]: worker 619627 started

Apr 08 01:21:21 srv1 pveproxy[610492]: worker exit

Apr 08 01:21:21 srv1 pveproxy[1147]: worker 610492 finished

Apr 08 01:21:21 srv1 pveproxy[1147]: starting 1 worker(s)

Apr 08 01:21:21 srv1 pveproxy[1147]: worker 620496 started

Apr 08 01:23:59 srv1 postfix/qmgr[1097]: 3BA20180F07: from=<>, size=6570, nrcpt=1 (queue active)

Apr 08 01:23:59 srv1 postfix/local[621353]: error: open database /etc/aliases.db: No such file or directory

Apr 08 01:23:59 srv1 postfix/local[621353]: warning: hash:/etc/aliases is unavailable. open database /etc/aliases.db: No such file or directory

Apr 08 01:23:59 srv1 postfix/local[621353]: warning: hash:/etc/aliases: lookup of 'root' failed

Apr 08 01:23:59 srv1 postfix/local[621353]: 3BA20180F07: to=<

root@srv1.berto>, relay=local, delay=269302, delays=269302/0.04/0/0.01, dsn=4.3.0, status=deferred (alias database unavailable)

Apr 08 01:27:04 srv1 systemd[1]: Starting Daily PVE download activities...

Apr 08 01:27:06 srv1 pveupdate[622413]: <root@pam> starting task UPID:srv1:00097F52:009932D1:624F734A:aptupdate::root@pam:

Apr 08 01:27:07 srv1 pveupdate[622418]: update new package list: /var/lib/pve-manager/pkgupdates

Apr 08 01:27:09 srv1 pveupdate[622413]: <root@pam> end task UPID:srv1:00097F52:009932D1:624F734A:aptupdate::root@pam: OK

Apr 08 01:27:09 srv1 systemd[1]: pve-daily-update.service: Succeeded.

Apr 08 01:27:09 srv1 systemd[1]: Finished Daily PVE download activities.

Apr 08 01:27:09 srv1 systemd[1]: pve-daily-update.service: Consumed 4.027s CPU time.

Apr 08 01:28:26 srv1 pveproxy[611800]: worker exit

Apr 08 01:28:26 srv1 pveproxy[1147]: worker 611800 finished

Apr 08 01:28:26 srv1 pveproxy[1147]: starting 1 worker(s)

Apr 08 01:28:26 srv1 pveproxy[1147]: worker 623227 started

Apr 08 01:32:58 srv1 QEMU[25831]: HTTP/2.0 connection failed

Apr 08 01:32:59 srv1 pvescheduler[613143]: ERROR: Backup of VM 100 failed - backup write data failed: command error: write_data upload error: pipelined request failed: broken pipe

Apr 08 01:32:59 srv1 pvescheduler[613143]: INFO: Backup job finished with errors

Apr 08 01:32:59 srv1 pvescheduler[613143]: job errors

Apr 08 01:32:59 srv1 postfix/pickup[597702]: 7B8F2180C6B: uid=0 from=<root>

Apr 08 01:32:59 srv1 postfix/cleanup[624733]: 7B8F2180C6B: message-id=<

20220407233259.7B8F2180C6B@srv1.berto>

Apr 08 01:32:59 srv1 postfix/qmgr[1097]: 7B8F2180C6B: from=<

root@srv1.berto>, size=8767, nrcpt=1 (queue active)

Apr 08 01:33:01 srv1 postfix/smtp[624735]: 7B8F2180C6B: to=<

berto@berto.it>

Apr 08 01:33:01 srv1 postfix/qmgr[1097]: 7B8F2180C6B: removed

Apr 08 01:33:41 srv1 pvedaemon[551578]: <root@pam> successful auth for user 'root@pam'

Apr 08 01:33:59 srv1 postfix/qmgr[1097]: E99D2180A29: from=<>, size=6992, nrcpt=1 (queue active)

Apr 08 01:33:59 srv1 postfix/local[625072]: error: open database /etc/aliases.db: No such file or directory

Apr 08 01:33:59 srv1 postfix/local[625072]: warning: hash:/etc/aliases is unavailable. open database /etc/aliases.db: No such file or directory

Apr 08 01:33:59 srv1 postfix/local[625072]: warning: hash:/etc/aliases: lookup of 'root' failed

Apr 08 01:33:59 srv1 postfix/local[625072]: E99D2180A29: to=<

root@srv1.berto>, relay=local, delay=97203, delays=97203/0.01/0/0.01, dsn=4.3.0, status=deferred (alias database unavailable)

Apr 08 01:38:59 srv1 postfix/qmgr[1097]: 2F084180DC7: from=<

root@srv1.berto>, size=5596, nrcpt=2 (queue active)

Apr 08 01:41:19 srv1 pveproxy[620496]: worker exit

Apr 08 01:41:19 srv1 pveproxy[1147]: worker 620496 finished