I've got four HP DL360 G9 servers, which I intend to use in a hyper-converged cluster setup with CEPH. All of them are of the same hardware configuration: two sockets with Intel(R) Xeon(R) CPU E5-2699 v4 processors @ 2.20GHz (88 cores per server in total), 768GiB of registered DDR4 RAM (configured memory speed: 1866 MT/s), eight Samsung PM883/MZ7LH1T9HMLT SATA SSD (data-center grade, 1.92TB, 30K IOPS on Random Write) connected via H240ar controller in HBA mode @ 6Gb/s, and four integrated 1G and one additional dual-port 10G network adapters. All the servers are upgraded to the latest firmware, and have Proxmox 7.2-11 installed. All packages are upgraded to current versions from public repositories. The OS is installed on an internal Kingston DC1000B 240GB NVMe M.2 SSD drive (of data-center grade) via PCIe-to-NVMe adapter. All disks are brand new.

Having such a configuration, we can assume that everything should be fine with disk performance. But, unfortunately, this is not the case. My 3yo average PC beats these servers by several times over on the same drives.

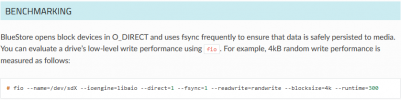

Testing was performed using the fio utility, as follows:

Since all disks were supposed to be used in CEPH, the scheduler and write caching settings on the host have been made according to the recommendations from the CEPH documentation: /sys/block/dev/queue/scheduler was set to 'none', and write caching was set to 'write through'. I ran 5 tests consecutively, the results are the average of the values obtained in the tests.

The best I managed to get when writing to a raw disk device in the host OS is roughly 37 MiB/s at 9,500 IOPS. It's definitely not the 30K IOPS rating, but it'll do. Then I started testing the performance inside the virtual machine.

It was a long marathon during which I tried almost every possible disk layout: RAID, LVM, LVM-Thin, LVM over RAID, LVM-Thin over RAID, ZFS, qcow2, passthrough raw devices into the virtual machine, SCSI controller, VirtIO controller, etc. The best result I got was when I passed the block device through into the virtual machine:

I got about 13 MiB/s at 3325 IOPS. Not impressive numbers at all. Sorry to mention it here, but ESXi VM on the same hardware shows triple the performance. Even FreeBSD 13 performs better than Linux when run on such a server at default settings: 45.46MiB/s at 11.64k IOPS in average (ioengine=psync). So I started to blame the disk controller driver, especially since it already had known regressions.

I had no other controller at hand to test this theory, so I decided to abstract away from the hardware disk subsystem altogether and test the performance of the disk in RAM. I created a small drive in RAM (

Host: 866,4 MiB/s at 221.8k IOPS

VM: 13.56 MiB/s at 3572,8 IOPS

I have never been able to get values greater than these, even when trying to write to a raw patition on an NVMe drive. So far it looks like the bottle neck is inside QEMU. But maybe I am mistaken? The disk performance in CEPH is even more miserable (I use a 10G network to synchronize nodes).

My inner geek really wants to run a cluster on Proxmox VE. And only disk performance issues stand in the way. Please help me to solve them.

Having such a configuration, we can assume that everything should be fine with disk performance. But, unfortunately, this is not the case. My 3yo average PC beats these servers by several times over on the same drives.

Testing was performed using the fio utility, as follows:

fio --name=/dev/drive --ioengine=libaio --direct=1 --fsync=1 --readwrite=randwrite --blocksize=4k --runtime=120Since all disks were supposed to be used in CEPH, the scheduler and write caching settings on the host have been made according to the recommendations from the CEPH documentation: /sys/block/dev/queue/scheduler was set to 'none', and write caching was set to 'write through'. I ran 5 tests consecutively, the results are the average of the values obtained in the tests.

The best I managed to get when writing to a raw disk device in the host OS is roughly 37 MiB/s at 9,500 IOPS. It's definitely not the 30K IOPS rating, but it'll do. Then I started testing the performance inside the virtual machine.

Debian 11, CLI only, fully upgraded:

agent: 1

boot: order=scsi0

cores: 2

cpu: host

machine: q35

memory: 8192

meta: creation-qemu=7.0.0,ctime=1667311996

name: test-vm

net0: virtio=2A:*:*:*:65:7B,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: local-lvm:vm-100-disk-0,discard=on,size=32G,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=xxxxxxxx-xxxx-4xxx-xxxx-xxxxxxxxxxxx

sockets: 2

agent: 1

boot: order=scsi0

cores: 2

cpu: host

machine: q35

memory: 8192

meta: creation-qemu=7.0.0,ctime=1667311996

name: test-vm

net0: virtio=2A:*:*:*:65:7B,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

scsi0: local-lvm:vm-100-disk-0,discard=on,size=32G,ssd=1

scsihw: virtio-scsi-single

smbios1: uuid=xxxxxxxx-xxxx-4xxx-xxxx-xxxxxxxxxxxx

sockets: 2

virtio5: /dev/sdc3,backup=0,discard=on,iothread=1,size=640127671808I got about 13 MiB/s at 3325 IOPS. Not impressive numbers at all. Sorry to mention it here, but ESXi VM on the same hardware shows triple the performance. Even FreeBSD 13 performs better than Linux when run on such a server at default settings: 45.46MiB/s at 11.64k IOPS in average (ioengine=psync). So I started to blame the disk controller driver, especially since it already had known regressions.

I had no other controller at hand to test this theory, so I decided to abstract away from the hardware disk subsystem altogether and test the performance of the disk in RAM. I created a small drive in RAM (

modprobe brd rd_nr=1 rd_size=16777216) and tested its speed first on the host and then in the virtual machine (qm set 100 -virtio0 /dev/ram0 and then set iothread=1 in the vm config file). These are the values I got:Host: 866,4 MiB/s at 221.8k IOPS

VM: 13.56 MiB/s at 3572,8 IOPS

I have never been able to get values greater than these, even when trying to write to a raw patition on an NVMe drive. So far it looks like the bottle neck is inside QEMU. But maybe I am mistaken? The disk performance in CEPH is even more miserable (I use a 10G network to synchronize nodes).

My inner geek really wants to run a cluster on Proxmox VE. And only disk performance issues stand in the way. Please help me to solve them.