Hi

@alexskysilk,

the two ceph interfaces are configured in LACP bond (802.3ad) each interface is at 25Gbits.

CEPH PRIVATE and PUBLIC are configured on the same LACP bond (so 50Gbits)

I'll answer all your questions:

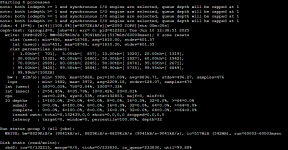

1) I execute the fio test and this the result:

Jobs: 4 (f=4): [w(4)][100.0%][w=1223MiB/s][w=313k IOPS][eta 00m:00s]

test: (groupid=0, jobs=4): err= 0: pid=619700: Wed Jun 4 15:10:16 2025

write: IOPS=321k, BW=1256MiB/s (1317MB/s)(73.6GiB/60001msec); 0 zone resets

clat (usec): min=2, max=12156, avg=12.12, stdev=31.57

lat (usec): min=2, max=12156, avg=12.16, stdev=31.57

clat percentiles (usec):

| 1.00th=[ 6], 5.00th=[ 7], 10.00th=[ 7], 20.00th=[ 8],

| 30.00th=[ 8], 40.00th=[ 9], 50.00th=[ 10], 60.00th=[ 10],

| 70.00th=[ 11], 80.00th=[ 12], 90.00th=[ 17], 95.00th=[ 32],

| 99.00th=[ 43], 99.50th=[ 46], 99.90th=[ 231], 99.95th=[ 490],

| 99.99th=[ 1352]

bw ( MiB/s): min= 630, max= 1421, per=100.00%, avg=1256.07, stdev=23.56, samples=476

iops : min=161482, max=364006, avg=321554.87, stdev=6031.27, samples=476

lat (usec) : 4=0.17%, 10=63.96%, 20=26.80%, 50=8.77%, 100=0.15%

lat (usec) : 250=0.05%, 500=0.05%, 750=0.02%, 1000=0.01%

lat (msec) : 2=0.01%, 4=0.01%, 10=0.01%, 20=0.01%

cpu : usr=3.59%, sys=90.85%, ctx=66512, majf=4, minf=328

IO depths : 1=100.0%, 2=0.0%, 4=0.0%, 8=0.0%, 16=0.0%, 32=0.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

issued rwts: total=0,19285461,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: bw=1256MiB/s (1317MB/s), 1256MiB/s-1256MiB/s (1317MB/s-1317MB/s), io=73.6GiB (79.0GB), run=60001-60001msec

2)CRUSH RULE

{

"rule_id": 1,

"rule_name": "ceph-pool-ssd",

"type": 1,

"steps": [

{

"op": "take",

"item": -2,

"item_name": "default~ssd"

},

{

"op": "chooseleaf_firstn",

"num": 0,

"type": "host"

},

{

"op": "emit"

}

]

}

3) CEPH OSD TREE

ID CLASS WEIGHT TYPE NAME STATUS REWEIGHT PRI-AFF

-1 41.91705 root default

-3 13.97235 host PX01

0 ssd 3.49309 osd.0 up 1.00000 1.00000

1 ssd 3.49309 osd.1 up 0.95001 1.00000

2 ssd 3.49309 osd.2 up 1.00000 1.00000

3 ssd 3.49309 osd.3 up 1.00000 1.00000

-5 13.97235 host PX02

4 ssd 3.49309 osd.4 up 0.95001 1.00000

5 ssd 3.49309 osd.5 up 1.00000 1.00000

6 ssd 3.49309 osd.6 up 1.00000 1.00000

7 ssd 3.49309 osd.7 up 1.00000 1.00000

-7 13.97235 host PX03

8 ssd 3.49309 osd.8 up 1.00000 1.00000

9 ssd 3.49309 osd.9 up 1.00000 1.00000

10 ssd 3.49309 osd.10 up 1.00000 1.00000

11 ssd 3.49309 osd.11 up 1.00000 1.00000

4) CEPH CONF

cat /etc/ceph/ceph.conf

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = 10.255.254.101/24

fsid = d59d29e6-5b2d-4ab3-8eec-5145eaaa4850

mon_allow_pool_delete = true

mon_host = 10.255.255.101 10.255.255.102 10.255.255.103

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = 10.255.255.101/24

rbd cache = true

rbd cache writethrough until flush = false

debug asok = 0/0

debug auth = 0/0

debug buffer = 0/0

debug client = 0/0

debug context = 0/0

debug crush = 0/0

debug filer = 0/0

debug filestore = 0/0

debug finisher = 0/0

debug heartbeatmap = 0/0

debug journal = 0/0

debug journaler = 0/0

debug lockdep = 0/0

debug mds = 0/0

debug mds balancer = 0/0

debug mds locker = 0/0

debug mds log = 0/0

debug mds log expire = 0/0

debug mds migrator = 0/0

debug mon = 0/0

debug monc = 0/0

debug ms = 0/0

debug objclass = 0/0

debug objectcacher = 0/0

debug objecter = 0/0

debug optracker = 0/0

debug osd = 0/0

debug paxos = 0/0

debug perfcounter = 0/0

debug rados = 0/0

debug rbd = 0/0

debug rgw = 0/0

debug throttle = 0/0

debug timer = 0/0

debug tp = 0/0

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[client.crash]

keyring = /etc/pve/ceph/$cluster.$name.keyring

[mon.px01]

public_addr = 10.255.255.101

[mon.px02]

public_addr = 10.255.255.102

[mon.px03]

public_addr = 10.255.255.103