Background:

One 4TB HDD partitioned for home network

Setup multiple VM's and Containers

After a power cut, all my vm's and containers came back online, bar one - Plex Media Server

Proxmox gave me the following error when trying to start the server

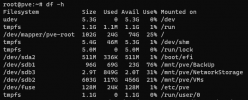

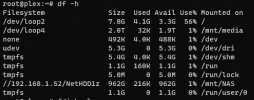

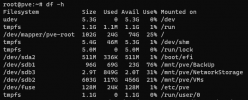

After reading other forums I found that removing the mounted storage file vm-107-disk-1.raw on /mnt/pve/NetworkStorage connected via dev/sdb3 from the Plex server would enable me to start it up again

This worked and I could login to plex, although of course as I detached the storage file I was unable to access any of my media

When trying to add the storage file back it gave me the same error when trying to start the server

Really hoping I haven't lost 850GB worth of data

Here's an old thread from when I was setting it up incase it provides any further info

https://forum.proxmox.com/threads/data-container-from-ext-hdd-plex-proxmox.122723/

Massively appreciate any and all support on fixing this

One 4TB HDD partitioned for home network

Setup multiple VM's and Containers

After a power cut, all my vm's and containers came back online, bar one - Plex Media Server

Proxmox gave me the following error when trying to start the server

Code:

run_buffer: 321 Script exited with status 9

lxc_init: 847 Failed to run lxc.hook.pre-start for container "107"

__lxc_start: 2008 Failed to initialize container "107"

TASK ERROR: startup for container '107' failedAfter reading other forums I found that removing the mounted storage file vm-107-disk-1.raw on /mnt/pve/NetworkStorage connected via dev/sdb3 from the Plex server would enable me to start it up again

This worked and I could login to plex, although of course as I detached the storage file I was unable to access any of my media

When trying to add the storage file back it gave me the same error when trying to start the server

Really hoping I haven't lost 850GB worth of data

Here's an old thread from when I was setting it up incase it provides any further info

https://forum.proxmox.com/threads/data-container-from-ext-hdd-plex-proxmox.122723/

Massively appreciate any and all support on fixing this