Since upgrading to 7.0 I lost all connectivity on 2 nodes (not the third tho even tho they were upgraded all at the same time!).

Troubleshooting steps:

* hwaddress was added to the bridges

* tried auto/hotplug in intrefaces as well

* i CAN bring up the interfaces with ip link set en0 up but can't get it to speak

* ifupdown2 does not seem to be installed

Info

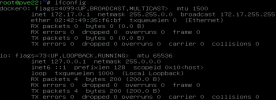

* Attached ìp a`, ifconfig and /etc/network/interface as images through tinypilot

* pveversion was too big so is here https://ibb.co/s6Gy8p0

* lspci was too big so is here (marked the two network cards with red rectangles for wasy viewing): https://ibb.co/XktvQ7m

I am at a loss, and without a ceph quorom so nothing works on my cluster now

Any help appreciated!

Troubleshooting steps:

* hwaddress was added to the bridges

* tried auto/hotplug in intrefaces as well

* i CAN bring up the interfaces with ip link set en0 up but can't get it to speak

* ifupdown2 does not seem to be installed

Info

* Attached ìp a`, ifconfig and /etc/network/interface as images through tinypilot

* pveversion was too big so is here https://ibb.co/s6Gy8p0

* lspci was too big so is here (marked the two network cards with red rectangles for wasy viewing): https://ibb.co/XktvQ7m

I am at a loss, and without a ceph quorom so nothing works on my cluster now

Any help appreciated!