Another workaround besides the one suggested by

@Fidor should be setting

Code:

thin_check_options = [ "-q", "--skip-mappings" ]

in your

/etc/lvm/lvm.conf and running

update-initramfs -u afterwards.

Thanks for this.

I wonder if there is a way to increase the udev timeout, so we can avoid having the pvscan process killed in the first place.

Code:

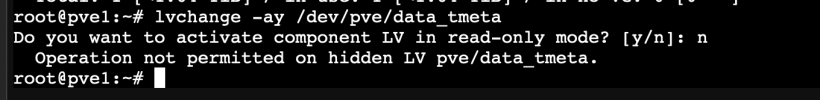

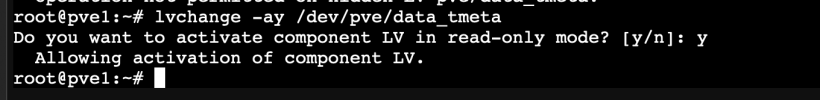

1. pvscan is started by the udev 69-lvm-metad.rules.

2. pvscan activates XYZ_tmeta and XYZ_tdata.

3. pvscan starts thin_check for the pool and waits for it to complete.

4. The timeout enforced by udev is hit and pvscan is killed.

5. Some time later, thin_check completes, but the activation of the

thin pool never completes.

EDIT: INCREASING THE UDEV TIMEOUT DOES WORK

The boot did take longer, but it did not fail.

I have set the timeout to 600s (10min). Default is 180s (3min).

Some people may need even more time, depending on how many disks and pools they have, but above 10min I would just use --skip-mappings

Edited

Code:

# nano /etc/udev/udev.conf

Added

Then I disabled the

--skip-mappings option in lvm.conf, by commenting it

(you may skip this step if you haven't changed your lvm.conf file)

Disabled

Code:

# thin_check_options = [ "-q", "--skip-mappings" ]

Then updated initramfs again with both changes

And

rebooted to test and it worked.

I think I prefer it this way. The server should not be rebooted frequently anyways. It is a longer boot with more through tests (not sure why it takes so long though).

Testing here, it took about 2m20s for the first pool to appear on the screen as "found" and 3m17s for all the pools to load. Then the boot quickly finished and the system was online. In my case, I was just above the 3min limit.

EDIT2: I wonder if there is some optimization I can do to the metadata of the pool to make this better. Also, one of my pools has two 2TB disks, but the metadata is only in one of them (it was expanded to the second disk). Not sure if this matters, but this seems to be the slow pool to check/mount.

Anyways, hope this helps someone.

Cheers"