Updated our Proxmox PVE 7 server this morning and upon reboot the local-lvm was not available and VM's would not start. Below are the updates applied:

libpve-common-perl: 7.0-6 ==> 7.0-9

pve-container: 4.0-9 ==> 4.0-10

pve-kernel-helper: 7.0-7 ==> 7.1-2

qemu-server: 7.0-13 ==> 7.0-14

PVE said reboot was required

Once rebooted, the local-lvm showed at 0GB in WebGUI and got a start failed error when I tried to start VMs. See attachment(s)

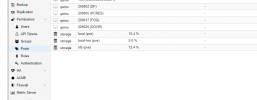

Ran pvdisplay,lvdisplay, and vgdisplay (see attachment) the lvdisplay shows all the logical volumes as "Not available" (see attachment)

Ran lvchange -ay pve/data to activate pve/data so the local-lvm now shows active and with percentage but the VM's still won't start

Ran the lvchange -ay command for each "LV Path" to each VM-disk (ex. lvchange -ay /dev/pve/vm-209002-disk-0) after this the "logical volumes" showed available and VM's started. I have to run the lvchange -ay command for each "LV Path" to each VM-disk anytime the PVE server is rebooted. Why? How can I resolve this permanently?

libpve-common-perl: 7.0-6 ==> 7.0-9

pve-container: 4.0-9 ==> 4.0-10

pve-kernel-helper: 7.0-7 ==> 7.1-2

qemu-server: 7.0-13 ==> 7.0-14

PVE said reboot was required

Once rebooted, the local-lvm showed at 0GB in WebGUI and got a start failed error when I tried to start VMs. See attachment(s)

Ran pvdisplay,lvdisplay, and vgdisplay (see attachment) the lvdisplay shows all the logical volumes as "Not available" (see attachment)

Ran lvchange -ay pve/data to activate pve/data so the local-lvm now shows active and with percentage but the VM's still won't start

Ran the lvchange -ay command for each "LV Path" to each VM-disk (ex. lvchange -ay /dev/pve/vm-209002-disk-0) after this the "logical volumes" showed available and VM's started. I have to run the lvchange -ay command for each "LV Path" to each VM-disk anytime the PVE server is rebooted. Why? How can I resolve this permanently?