Hi,

please check the health of your physical disk, e.g. usingThis just started happening to me, but after a power outage, not a software update.

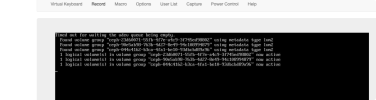

After the outage, the server appeared to hang for a long time, and then finally displayed "Timed out for waiting the udev queue being empty."

It then continued to boot, but without my LVM partition, which caused all my containers to fail to start.

I did the trick of increasing the timeout period, and this did work, but now my server takes an absolute age to boot, and, worse, my containers all take an age to start as well, for no apparent reason.

What actually causes this huge delay, why might it have happened after a power outage, and is there anything I can do to undo the damage?

smartctl -a /dev/XYZ. Are there any interesting messages in the system logs/journal?