Hello.

I'm trying to understand a sync process that I have and the amt of the data storage.

Here's my environment.

production - 2 servers, pve and pve/pbr

dr site - single pve/pbr

The production server does backups every 2 hours and a sync job replicates it over to the pve/pbr server on the same schedule. . This thinking process is that i have a 2 hour window if something goes wrong on the production box and i need to restore something. wouldn't the better option be to replicate every hour with a 2 hour backup schedule to shorten the window?

The retention level on the production side (prune) is configured to last-5, daily 8, weekly/monthly-12, and yearly 2 with weekly garbage collection.

The dr side does a pull every 2 hours from what is in/on the production side.

The dr side prune job is set to last-8, daily-30, weekly/monthly-12, and 5 years.

I have 17 servers containing approx. 2.1TB of space. My current storage, of backups, on the Dr side is approx. 8TB and climbing.

Manually doing the math based off the # of servers * snapshots I would end up with approx. 1244 backup/snapshots at the DR side. The same math means that every year I would have 2.1TB for my yearly backups and a 'floating storage' space of 11Tb. Does this sound correct? I didn't think the data sprawl would be this big, especially the amt of 'floating' data required each year until the previous month/weekly/etc drop off. This seems to indicate that I need at least 25TB of space on the Dr side.

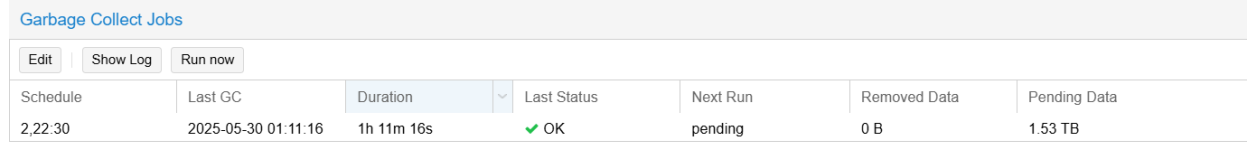

What am I missing? Also, even though i have over 1 year of data (backup/snapshots) on my system and my prune/GC jobs have run - I'm still showing more files than I should. for instance: 1 server has 18 months of backups, and it should only 12 + 1 (yearly). The jobs complete w/o issues but my data never get's reduced.

I'm trying to understand a sync process that I have and the amt of the data storage.

Here's my environment.

production - 2 servers, pve and pve/pbr

dr site - single pve/pbr

The production server does backups every 2 hours and a sync job replicates it over to the pve/pbr server on the same schedule. . This thinking process is that i have a 2 hour window if something goes wrong on the production box and i need to restore something. wouldn't the better option be to replicate every hour with a 2 hour backup schedule to shorten the window?

The retention level on the production side (prune) is configured to last-5, daily 8, weekly/monthly-12, and yearly 2 with weekly garbage collection.

The dr side does a pull every 2 hours from what is in/on the production side.

The dr side prune job is set to last-8, daily-30, weekly/monthly-12, and 5 years.

I have 17 servers containing approx. 2.1TB of space. My current storage, of backups, on the Dr side is approx. 8TB and climbing.

Manually doing the math based off the # of servers * snapshots I would end up with approx. 1244 backup/snapshots at the DR side. The same math means that every year I would have 2.1TB for my yearly backups and a 'floating storage' space of 11Tb. Does this sound correct? I didn't think the data sprawl would be this big, especially the amt of 'floating' data required each year until the previous month/weekly/etc drop off. This seems to indicate that I need at least 25TB of space on the Dr side.

What am I missing? Also, even though i have over 1 year of data (backup/snapshots) on my system and my prune/GC jobs have run - I'm still showing more files than I should. for instance: 1 server has 18 months of backups, and it should only 12 + 1 (yearly). The jobs complete w/o issues but my data never get's reduced.