Hi,

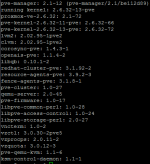

I have two identical systems, Asus P6X58-E WS that have two Intel 82574L NIC cards that refuse to function properly. I have just installed and upgraded to the latest Proxmox VE 2.1-12. Running kernel 2.6.32-12-pve.

I have to run /etc/init.d/netwrorking stop and then /etc/init.d/networking start to get them to function. This does not always work. Then I adjust the setting in /etc/network/interfaces and change from eth0 to eth1

and it works until reboot. After reboot, back to the same behavior, NICs not working. I have tested each NIC with a Live CD of Ubuntu 10.04 and they do function correctly. What must I do do get these Intel NICs to

function properly?

Thank you,

dakota_winds

I have two identical systems, Asus P6X58-E WS that have two Intel 82574L NIC cards that refuse to function properly. I have just installed and upgraded to the latest Proxmox VE 2.1-12. Running kernel 2.6.32-12-pve.

I have to run /etc/init.d/netwrorking stop and then /etc/init.d/networking start to get them to function. This does not always work. Then I adjust the setting in /etc/network/interfaces and change from eth0 to eth1

and it works until reboot. After reboot, back to the same behavior, NICs not working. I have tested each NIC with a Live CD of Ubuntu 10.04 and they do function correctly. What must I do do get these Intel NICs to

function properly?

Thank you,

dakota_winds