You have a virtual network card without any physical boundaries, so no, this is not a bug. You can transfer data from one VM to another VM on the same host and bridge very fast and internally this uses memory copy, so that you will have a very, very huge bandwidth, which does not comply with normal ethernet standards (remember, there is no real hardware). These "huge" numbers are more an upper limit than guaranteed throughput.

I have here on one server (with only 1 GBE cards) 6 GBit/s between two virtual machines.

Sorry for resurrecting this very old thread, but I am just getting around to trying to deploy my Mellanox ConnectX-4 dual port VPI 100 Gbps NIC for use inside of Proxmox.

(Previously, I was using it with a bare metal install of CentOS.)

One of the ports is set to IB link type whilst the other port is set to ETH link type.

The IB port is connected to my 36-port 100 Gbps Mellanox IB switch.

The ETH port is connected point-to-point (2 nodes in total) via a DAC.

IB has SR-IOV enabled.

IB port0 has IPoIB assigned.

ETH port also has an IPv4 address assigned to it as well.

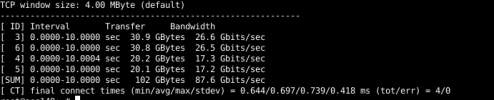

Using

iperf (which is apparently different than

iperf3, which I just learned about it today), and 4 parallel streams, host-to-host (over ETH), I can get 87.6 Gbps.

And with eight parallel streams, I can get 96.9 Gbps.

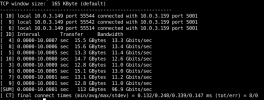

I created a Linux network bridge, inside Promox 7.4-17, using the 100 GbE ports, installed CentOS 7.7.1908 in a VM, installed

iperf and ran the test again, and VM-to-VM, I can only do about 15.7-ish Gbps; doesn't matter if I am using 1 stream, 4 parallel streams, nor 8 parallel streams.

The sum, using the

virtio NIC, stays the same.