Is it a bug?

Sorry for resurrecting this very old thread, but I am just getting around to trying to deploy my Mellanox ConnectX-4 dual port VPI 100 Gbps NIC for use inside of Proxmox.You have a virtual network card without any physical boundaries, so no, this is not a bug. You can transfer data from one VM to another VM on the same host and bridge very fast and internally this uses memory copy, so that you will have a very, very huge bandwidth, which does not comply with normal ethernet standards (remember, there is no real hardware). These "huge" numbers are more an upper limit than guaranteed throughput.

I have here on one server (with only 1 GBE cards) 6 GBit/s between two virtual machines.

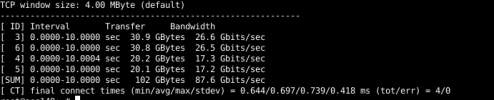

iperf (which is apparently different than iperf3, which I just learned about it today), and 4 parallel streams, host-to-host (over ETH), I can get 87.6 Gbps.

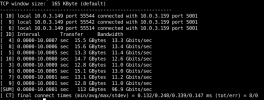

iperf and ran the test again, and VM-to-VM, I can only do about 15.7-ish Gbps; doesn't matter if I am using 1 stream, 4 parallel streams, nor 8 parallel streams.virtio NIC, stays the same.We use essential cookies to make this site work, and optional cookies to enhance your experience.