Hi,

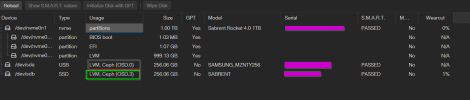

i had created a 3 Nodes Proxmox cluster with 3 Lenovo M720Q (for simplicity i call the nodes N1,N2 and N3).

Then i had added 4 disks (D1, D2, D3 and D4).

All was working fine.

Then i move all the SFF PC and the disk from my desk to the rack but unfortunately i do not write down the association between the 4 disks and the 3 nodes..

Ok...don't laugh at me..

The question now is..

Is there any way to understand what was the right association between the 4 disks and the 3 nodes in order to have the Ceph cluster up and running again..?

I had created only a few VM so if there is no way, I'll start again from scratch..but i'd prefer not to..

Thank you very much for the time you dedicate in answering to my question..

Paul.

i had created a 3 Nodes Proxmox cluster with 3 Lenovo M720Q (for simplicity i call the nodes N1,N2 and N3).

Then i had added 4 disks (D1, D2, D3 and D4).

All was working fine.

Then i move all the SFF PC and the disk from my desk to the rack but unfortunately i do not write down the association between the 4 disks and the 3 nodes..

Ok...don't laugh at me..

The question now is..

Is there any way to understand what was the right association between the 4 disks and the 3 nodes in order to have the Ceph cluster up and running again..?

I had created only a few VM so if there is no way, I'll start again from scratch..but i'd prefer not to..

Thank you very much for the time you dedicate in answering to my question..

Paul.