Hey all,

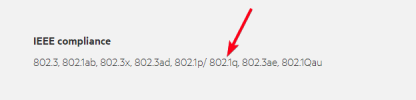

I have a DL gen10 server that I installed an HPE dual 10Gb 560FLR-SFP+ Adapter into.

I've found that it will not pass bond0/LACP communication. An Intel X520-T2 with the same interface bond0/LACP config connects without issue.

The switch shows LACP is communicating/connected.

Does anyone see any issues with the config as to why it's not communicating/connecting?

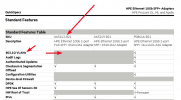

Here's what iLO sees:

Thanks!!!

I have a DL gen10 server that I installed an HPE dual 10Gb 560FLR-SFP+ Adapter into.

I've found that it will not pass bond0/LACP communication. An Intel X520-T2 with the same interface bond0/LACP config connects without issue.

The switch shows LACP is communicating/connected.

Does anyone see any issues with the config as to why it's not communicating/connecting?

Code:

auto lo

iface lo inet loopback

auto eno1

iface eno1 inet manual

auto eno2

iface eno2 inet manual

auto bond0

iface bond0 inet manual

bond-slaves eno1 eno2

bond-miimon 100

bond-mode 802.3ad

bond-xmit-hash-policy layer2+3

auto vmbr0

iface vmbr0 inet static

address 10.##.###.###/24

gateway 10.###.###.1

bridge-ports bond0

bridge-stp off

bridge-fd 0

bridge-vlan-aware yes

bridge-vids 2-4094Here's what iLO sees:

Thanks!!!