Just wanted to share my experience with installing Promox Backup Server as a VM in Unraid as I encountered some problems that I wasn't able to find any answers on the internet.

My setup is a baremetal Unraid 6.11.5, another baremetal Proxmox VE, with a HP Aruba Instant On 1930 8G 2SFP Switch (JL680A) in between them.

Hope it helps whoever that is struggling with this!

My setup is a baremetal Unraid 6.11.5, another baremetal Proxmox VE, with a HP Aruba Instant On 1930 8G 2SFP Switch (JL680A) in between them.

- Go to VMs tab in your Unraid dashboard, then click the "Add VM" button.

- Then, in my case, I selected Debian as the OS.

- I left most options as it is other than the following:

- Initial memory: 4096 MB (this is the value I got from PBS site, but you may set it to more or less base on your use case)

- OS Install ISO: Point this to the location of your ISO. (The ISO path is set in Unraid dashboard -> Settings -> VM Manager -> Default ISO storage path)

- Primary vDisk Size: 32G (again, this is the value I got from PBS site)

- Unraid Share Mode: Choose 9p mode for now so that you can save. (IMPORTANT: You'll need to set this to Virtiofs mode after creating the VM)

- Unraid Share/Unraid Source Path: Choose the share that you want to use to store the backups, or type in the path manually.

- Unraid Mount Tag: This is just a String to identify your source path in the VM. If you have chosen a share in "Unraid Share", then this is populated automatically. Else, you can just type in some easily identifiable name for your source path.

- Uncheck "Start VM after creation".

- Click the "Create" button.

- My VM setup at a glance.

- For more details for VM setup, you can visit the following links:

- Unraid wiki: https://wiki.unraid.net/Manual/VM_Management

- Tutorial videos by @SpaceinvaderOne:

- If you have followed the steps above to install PBS as a VM, or you have an existing PBS VM, then you can proceed.

- Make sure your PBS VM is not running, then edit your VM.

- Click the toggle at the top right of the page to show "XML view".

- From my screenshot above, you should see that there is a red circle around the

memoryBackingblock. You'll need to change your VM settings as shown in my screenshot for Virtiofs to work. You can refer to libvirt's "Sharing files with Virtiofs" page for more info.-

XML:

<memoryBacking> <source type='memfd'/> <access mode='shared'/> </memoryBacking>

-

- Now, click the "Update" button to save the changes.

- Repeat step 2 to edit your VM. Ensure your page is in "Form view" instead of "XML view".

- Now, we can go to change our Unraid Share Mode to Virtiofs Mode. You can also add more Virtiofs shares at this point.

- Now, click the "Update" button again to save your changes.

- Repeat step 2 to edit your VM again. Ensure your page is in "XML view" instead of "Form view".

- Look for

filesystemin your VM's XML, you should see something like this: - For my case, although I'm able to access the share in PBS like this, but for some reason, both CT and VM failed to backup. So, I did the following change and it works for me:

-

XML:

<filesystem type='mount' accessmode='passthrough'> <driver type='virtiofs' queue='1024'/> <binary path='/usr/libexec/virtiofsd' xattr='on'> <cache mode='always'/> <sandbox mode='chroot'/> /// remove this line <lock posix='on' flock='on'> </binary> <source dir='/mnt/user/backup_proxmox'/> <target dir='backup_proxmox_virtiofs'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> /// don't replace this line in your XML with mine </filesystem>

-

- Click the "Update" button again to save your changes, and we are done. (Do note that if you update your VM settings again in the future in "Form view", the changes made to the

filesystemin "XML view" may get overwritten, and so, you'll need to perform step 11 again) - Start your VM! (If this is your first startup, then complete your PBS installation first)

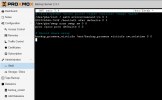

- Go to your PBS dashboard, then navigate to Administration -> Shell page.

- Create a directory so that we can mount the share that we passthrough from Unraid. In my case, I created the following directory:

mkdir /mnt/backup_proxmox. - Now we can mount our share to the directory that was created previously with the following line:

mount -t virtiofs backup_proxmox_virtiofs /mnt/backup_proxmox- Note that you should change my

backup_promox_virtiofsto the value that you have configured as the tag/target dir in your VM setting. - Note that you should change my

/mnt/backup_proxmoxto the directory that you have created for your share.

- Note that you should change my

- Once you are done mounting, check if you can create and read files from your share.

- If you are able to do that, then type the following in your shell:

cat /etc/mtab. You should be able to see a line that corresponds to your mounts. In my case, it isbackup_proxmox_virtiofs /mnt/backup_proxmox virtiofs rw,relatime 0 0 - If you would like to have your share mounted automatically on start, then copy the line(s) from what you have found in step 18 in

/etc/mtaband paste them into/etc/fstab. In my case, it looks like this: - Restart PBS and try to check again to see if your share is mounted automatically.

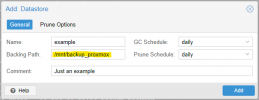

- If everything is ok, you can now add a datastore in your share. In my case, it looks like this:

- Once the datastore is created, you can now try to backup your CT, VM, or whatever you like to Unraid with PBS!

Hope it helps whoever that is struggling with this!